Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

AI and IOT Based Road Accident Detection and Reporting System

Authors: Adesh More, Mitali Mahajan, Hemant Gholap

DOI Link: https://doi.org/10.22214/ijraset.2023.48904

Certificate: View Certificate

Abstract

: Road accidents are increasing daily as the number of automobiles rises. An annual global death toll of 1.4 million and an injury toll of 50 million are reported by the World Health Organization (WHO). The absence of medical assistance at the scene of the accident or the lengthy response time during the rescue effort are the main causes of mortality. We can reduce delays in a rescue operation that has the potential to save many lives by using a cognitive agent-based collision detection and smart accident alarm and rescue system. To gather and send accident-related data to the cloud or server, the suggested system consists of a force sensor, GPS module, alarm controller, ESP8266 controller, camera, Raspberry Pi, and GSM module. The accident is then verified using cloud- based techniques for deep learning. accident is then verified using cloud-based techniques for deep learning. When the deep learning module notices an accident, it immediately alerts all nearby emergency services, including the hospital, police station, mechanics, etc.

Introduction

I. INTRODUCTION

As the population grows, the need for vehicles is rising tremendously. The percentage of traffic accidents has significantly increased over the past few years.[6][7] Over the speeding is the primary contributing factor to accidents, according to a recent study. The location of the accident scene is crucial for any rescue operation. If there is a city or there is a lot of traffic emergency help will soon be accessible, however in low-traffic zones or on roads, it is not. It's challenging to deliver timely emergency relief. Significant injuries are observed to transform into mortality brought on by inadequate medical attention. Due to the rise in traffic accidents, road safety receives a lot of attention from industries. An intelligent system for accident detection and warning is necessary to reduce the number of deaths from the road accidents. Once the system identifies an accident, it will notify all emergency services, including hospitals, police stations, and so on [1].

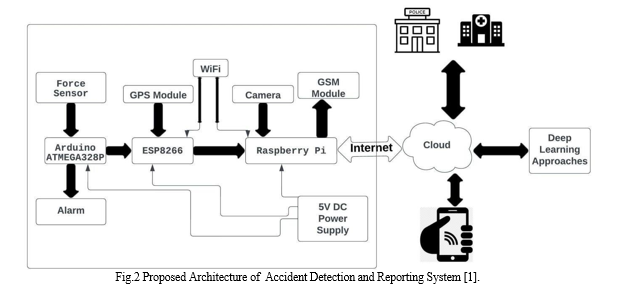

In our project, we suggest a force detection system for accident detection. ESP8266 controller, camera, Raspberry Pi, GSM module, sensor, GPS module, alarm controller, alarm controller, GSM module, and GSM module to gather and send accident- related data to the cloud or server.[1],[2],[3] And then By using deep learning method it’s possible to know the accident's severity and notify the police station and hospitals appropriately.

The suggested system comprises two stages:

- Using IOT and deep learning, an accident is discovered in the first phase.

- The second phase involves sending accident data to the emergency departments for the rescue effort.

II. LITERATURE REVIEW

The purpose of this literature review is to examine previous research, emphasis leading research studies, identify trends and establish a theoretical framework. For the development of accident detection and reporting system we conducted a systematic literature review (SLR) study to investigate the development of research on accident detection and also on reporting system. SLR approach is done by searching, collecting and analyzing scientific literature that aims to answer the research question have been determined [1].

There are many searches done regarding accident detection and reporting system. Those researches deliver many context of Accident detection and its reporting to the emergency department, such as methods, its development, features and technologies[2],[3],[4],[5],[11].

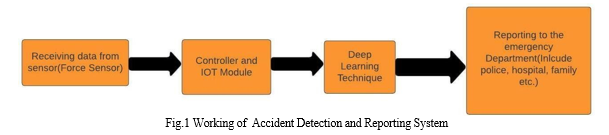

III. WORKING OF ACCIDENT DETECTION AND REPORTING

A. System

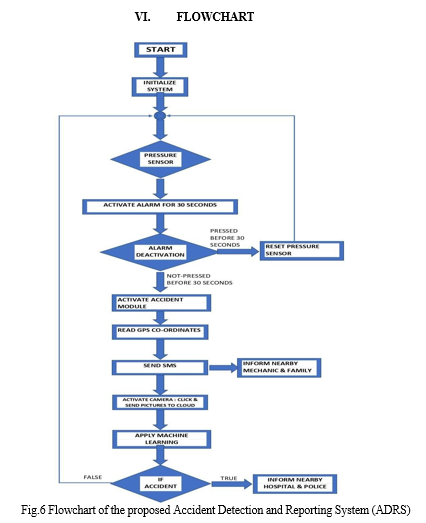

The accident detection and reporting system work in the following way:

B. Proposed Architecture

To address current issues with the ADRS (Accident Detection and Reporting System), this study offers An integrated accident detection and reporting system (ADRS). We employ an IoT and deep learning mix to obtain more accuracy. There are two phases to the proposed ADRS. IoT and deep learning are used in the first phase to detect accidents, and reporting of the accident are handled in the second phase. Below Fig.2 illustrates the architecture of proposed system.[1][2]

III. DETAILS OF PROPOSED ACCIDENT DETECTION AND REPORTING SYSTEM

Working of proposed system is take place in two main parts:

A. Accident Detection

IoT and AI capabilities are used in this module to identify accidents and gauge their severity. Different sensors are used to identify accidents and gather accident-related data like location, force, etc. Deep learning techniques are employed in order to reduce the false alarm rate[1]. The details of the IoT and AI module are as follows:

- IoT Module

The primary function of this module, which serves as the core of the proposed ADRS, is accident detection. It makes use of many sensors and IoT gadgets. A separate power bank and a 5V DC power supply connected to the vehicle's battery are needed to power the entire hardware system. A detailed description of various sensors and other components is given below:

a. Force Sensor: It serves as the foundation of the ADRS. In order to convert applied mechanical forces, such as tensile and compressive forces, into output signals whose value may be employed to indicate the force's intensity[1]

b. ATMEGA328P: It is a microcontroller unit that has a direct connection to the force sensor and manages a number of other devices. When the force value exceeds 350 Pa, an accident is detected. Accident detection sets off the alarm, and if the alert is not reset after 30 seconds, a signal to activate Node MCU is sent.

c. Node MCU- ESP8266: The ATMEGA328 microcontroller, Raspberry Pi, GPS module, and servo motor are all connected to it via a master microcontroller unit. All accident-related data is transmitted to the cloud by a Wi-Fi equipped controller.[1],[2]

d. Raspberry Pi: The Raspberry is a little computer system with an operating system that can complete all tasks that a desktop or laptop can. It is a capable controller that links IoT devices. The Raspberry Pi 3B+ in our ADRS is linked to a camera, which uploads pictures and videos to the cloud to assess the seriousness of the accident.

e. Pi Camera: The Raspberry Pi is connected to the Pi camera, which is mounted to the servo motor. When an accident is identified, the video and photos are taken and sent to the cloud, where deep learning is applied to evaluate the videos and photos and determine the accident's severity. Deep learning is mostly used to reduce the rate of false alarms and gauge the severity of accidents.

f. GSM SIM900A: It supports the rescue effort and communicates with other emergency services like a police station, hospitals, family members, etc. It has a link with the Raspberry Pi. It connects to the Node MCU and is used to determine the accident location [1-4].

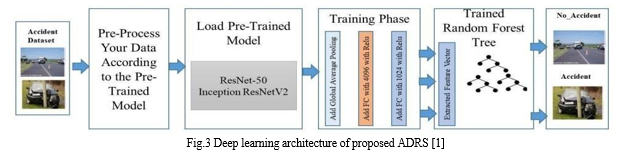

2. Deep Learning Module

Over the past few decades, deep learning algorithms have undergone significant development and may currently be utilised with excellent accuracy [9-13] in any field. The majority of the ADRS now in use only identify the accidents using deep learning or IoT sensors. As a result, information is sent to all emergency numbers as soon as an accident is discovered. Since these methods rely solely on the sensor, they might have a greater false detection rate. Therefore, the existing systems recognise a quick halt by the operator of their vehicle as an accident. We are utilsing a transfer learning-based pre-trained model called Res Net and InceptionResnetV2 to reduce the false detection rate. These models have been taught to divide the input video into two categories, namely accidents and non-accidents. If the model’s output is accident, then only the accident location is shared with the emergency services[1].

Deep learning architecture of proposed ADRS (Accident Detection and Reporting System):

The reduction of false alarms is the main objective of the Deep Learning Technique. The speed of moving vehicles on the road is one of the primary causes of traffic accidents. The majority of the ADRS now in use primarily use the speed sensor to identify an accident. The system identifies an accident if the vehicle's speed changes abruptly and goes above the set threshold. Therefore, the false detection rate of these systems may be significant since the vehicle's speed may change under a variety of conditions, such as a speed breaker, a road obstruction, a vehicle mechanical issue, etc. The proposed ADRS receives input video from the dashboard camera and employs deep learning to reduce this false information rate.

Node MCU will activate the camera and send the activation signal to Raspberry Pi as soon as the device detects the accident. The camera will record a 30-second video, capture some still photographs, and upload the data to the cloud for analysis. To determine whether current events are accidents or not, a pre-trained ResNet-50 and InceptionResnetV2 model is trained in the cloud. The input video is first transformed into frames and sent to the model. This module will assist us in reducing erroneous accident elimination and enhancing model correctness. Below figure 3 shows the architecture of the deep learning module.[1]

3. Pre-Trained Model

The training and accuracy of the deep learning-based model depend on the quantity and quality of the data because deep learning models need a lot of data. An IoT kit that gathers real-time data from the accident site and sends it to the cloud for further processing can be created in order to receive high-quality, real-time data. When we only have a little dataset, one of the most effective options is the pre-trained model.[1] These models are more accurate because to their well-trained accuracy on a large dataset. As a result, we only need to train these models for our particular objective. As a result, a pre-trained model can be trained with greater accuracy on a smaller dataset. There are many models that have already been trained, including VGGNet, ResNet-50, InceptionNet, InceptionResNetV2, etc.

Two Trained models are used in accident validation are given below:

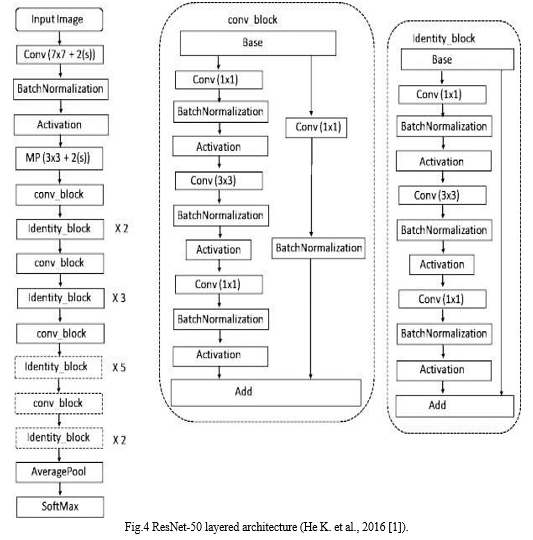

a. ResNet-50: Kaiming He et al. created ResNet-50 in 2015 [1]. It uses a deep neural network made up of numerous different building parts rather than a conventional sequential network like VGGNet. To categorise photos into 1000 different groups, this model was trained. One classification layer with 1000 neurons and the Softmax activation function comprise the model's 175 convolution blocks. Below figure 4 shows the ResNet-50's layered design.[1]

b. InceptionResNetV2: InceptionResNetV2 was created by C. Szegedy et al. in 2017 [1]. The ResNet (residual net) and inception net's most useful features are both incorporated into this model. It is one of the most thorough (1x1) convolutions without activation) neural networks, having 780 inception blocks. A dense layer of 1000 neurons with a Softmax activation function makes up the final classification layer. Below Figure 5 shows the layered architecture of InceptionResNetV2.

B. Reporting Accident Information to the Emergency Department

Once the accident is detected, an alarm will start for 30 s. If the vehicle’s passenger resets the alarm, only nearby mechanics’ information will be sent to the registered mobile. It will help the driver if the vehicle has any technical issues. In contrast, if the alarm is not reset after 30 s, then the location information is immediately sent to the nearby hospitals and police station. This phase uses various databases to store the useful information.

- Databases

The proposed ADRS uses four databases to manage the entire system. Detailed information of the different databases is given below.

a. User Personal Details Database: This database contains all user-related information like vehicle owner, address, and relative’s Contact number.

b. Vehicle Database: This database comprises all details of the vehicle like Vehicle Number, Vehicle _Name, and Vehicle Type.

c. Hospital Database: The hospital database stores all information on the hospitals.

d. Police Station Database: It stores all the information about the police station details.

V. ALGORITHM

A. For Accident Detection

Input Data: Value of force (F) and speed (S)

Output: Accident status

acc ← 0

if (F > Tforce & S > Tspeed) then acc ← 1

else

end if

if (F > Tforce|S > Tspeed) acc ← 1

if acc= 1 then

Activate alarm and set alarm timer (AT = 0) Alarm_OFF_timer ← Alarm_OFF()

else

If (AT >= 30 seconds) then status= Accident_detected

status= no_accident

Get nearby mechanics details from database and send message to the owner

end if end if

if (status= Accident_detected) then final_status = call Deep_Learning_Module() if (final_status=

Accident_detected) then Get accident location from GPS

call rescue_operation_module () else

Get nearby mechanics details from database and send message to the owner.

B. For Rescue or Reporting Operation: Input Data: longitude and latitude

Output: Inform to all emergency services Slat = starting latitude

elat = end latitude

s1on = starting longitude e1on = end longitude dis_lat = elat – slat dis_lon = elon - slon Dist = R * H

Find nearby police station and hospital using Haversine Hostp = nearby_hospital

Police_station = nearby_police_station

Get car details, vehicle details, mechanic details

Send message to cloud and all emergency services using GSM module

C. Random Forest Tree

- Divide the dataset into training and test set

- Set the value of number of tree m

2.1Build m number of trees say (R1, R2, R3 – – – – – – – Rm)

2.2 Train model for the training data based on Gini index and entropy.

2.3 Without purring, extend each tree to its furthest possible length.

3. To evaluate the model performance pass new instance X

3.1For new instance X, using all k trees (R1, R2, R3 – – – – – – – Rm), predict the class of an instance X.

3.2 Use voting approach to finally predict the class on X

D. Deep Learning for Accident Detection

Input: Training dataset which is collection of X-ray images Data= {(A^1, Y^1), (A^2, Y^2), (A^3, Y^3)

——–(A^n, Y^n)}

Parameter: Initialize all the parameters like weight W, learning rate σ, batch size, threshold ∅, max_iteration.

Output: Accident, No_Accident

- Perform pre-processing like augmentation, rescaling, etc., to the input data D

- Customize the pre-trained model by removing top layers

- First add GAPL to flatten the network and then add convolutional layers

- Configure five dense layers after CL where each layer has 2048 neurons

- To avoid overfitting, dropout 20% neurons from each dense layer

- Perform feature extraction from the dense layer and store all extracted features into the feature vector (Df eature).

- Size of Df eature Will be 1x 2048 for one image. So, for n image it will nx2048

- Create data frame of size

o Df eature ={(F^1, Y^1), (F2^2, Y^2), (F3^3, Y^3) − − − − − −(Fn^n, Y^n)}

- Initialize RFT with k number of trees (i.e., k=50)

- Train the RFT model for the extracted features Df eature

- Compile model for binary cross-entropy loss function and SGD optimizer

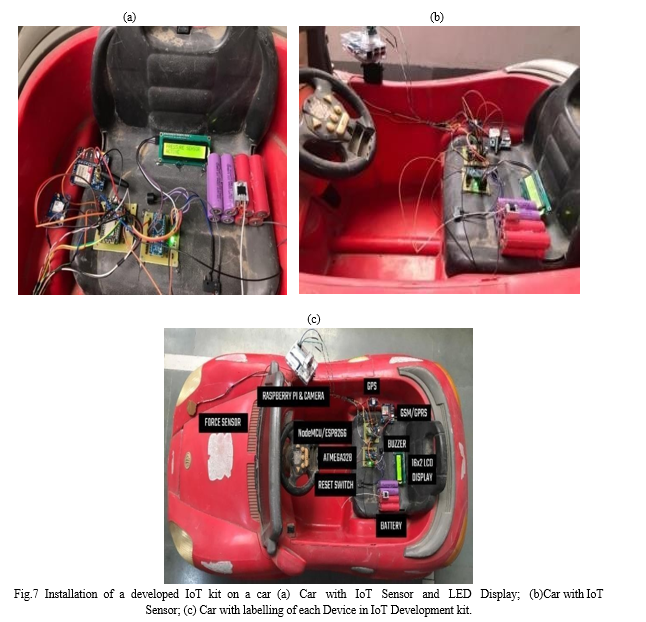

VII. EXPERIMENTAL SETUP

The accident detection and rescue system and ADRS are two separate modules. We attached a built IoT kit to the toy automobile because the suggested ADRS' effectiveness cannot be assessed in the actual vehicle. The car is mounted with every component, including the sensors, alarm, controller, etc. The GPS module is utilised to determine the accident location and vehicle speed. The configuration of numerous equipment in the car is shown in the below Figure 7.

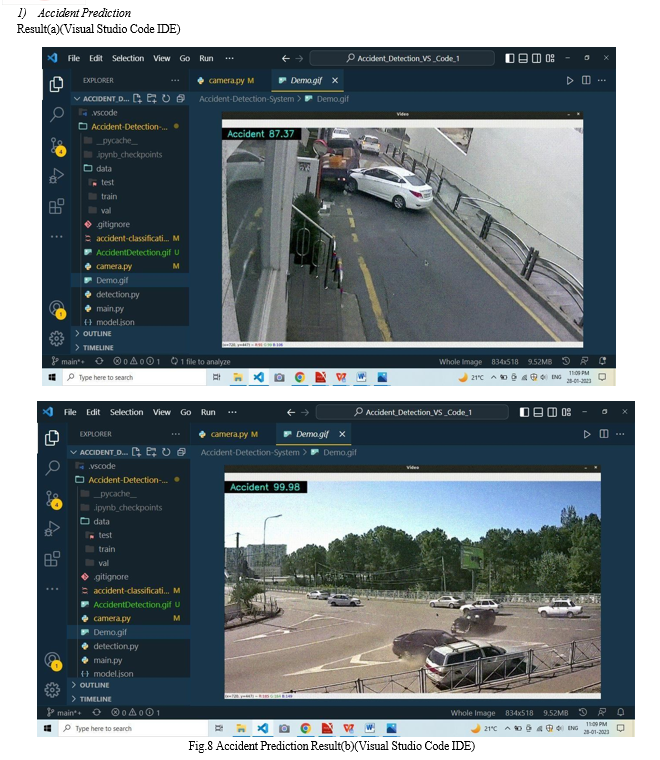

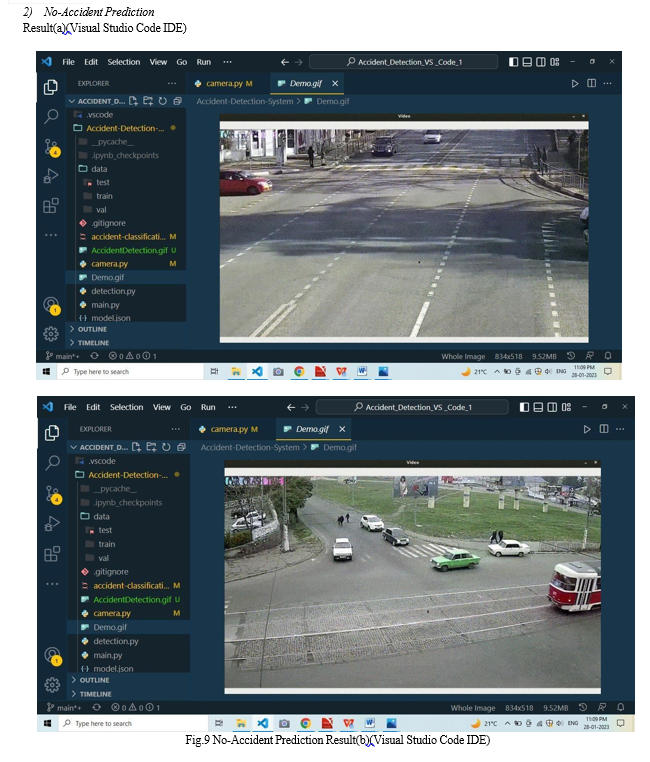

A. Software Simulation Result

Using Python's OpenCV library, the model was trained as accidental and non-accidental by loading images into it. So by doing that, whatever the input data we have fed, like a video or image, it is categorised into accident and non-accident images.

Conclusion

Due to the excellent condition of the roads in the smart city, more accidents on the roadways occur as a result of drivers operating their vehicles at high speeds. Despite the introduction of numerous accident detection and prevention methods, many fatalities continue to occur. At the very least, a portion of the problem is made worse by poor automatic accident recognition, inefficient warning, and emergency service responses. An IoT kit is used in the first phase to detect the accident, and a deep learning-based model is utilized in the second phase to validate the output of the IoT model and carry out the rescue operation. The IoT module, which measures the impact on the automobile using a force sensor and the vehicle\'s speed using a GPS module, detects an accident and sends all relevant data to the cloud. Pre-trained models, specifically VGGNet and InceptionResNetV2, are employed in the second phase to reduce the false detection rate and activate the rescue module. If the deep learning module detects an accident, a rescue module is activated, and information is sent to the nearby police station, hospitals, and family members. The suggested model can assist us in reducing the number of fatalities brought on by the absence of emergency services at the scene of the accident. The model has zero false positives during training and incredibly low false positives during testing because to the integration of IoT and AI. The security component is not taken into account in the suggested model, hence we want to solve this problem in subsequent work. The suggested model can also include some driver alert systems, such as the sleepiness detection module.

References

[1] Nikhilesh Pathak, Rajeev kumar gupta, Yatendra sahu, Ashutosh sharma, Mehedi masudc Mohammad bas, AI Enabled Accident Detection and Alert System Using IoT and Deep Learning for Smart Cities.(Sustainability MDPI) Year 2022 [2] Artificial Intelligence for Accident Detection and Response. Research Gate Year 2021 [3] Traffic Accident Detection by using Machine Learning Methods. Research Gate Year 2020 [4] Accident Detection using Deep Learning: A Brief Survey. IJECCE Year 2020 [5] Accident Detection and Warning System. IEEE Year 2021 [6] World Health Organization. Global Status Report on Road Safety; World Health Organization: Geneva, Switzerland, 2018. [7] Statistics. Available online: https://morth.nic.in/road-accident-in-india (accessed on 13 June 2022). [8] Yan, L.; Cengiz, K.; Sharma, A. An improved image processing algorithm for automatic defect inspection in TFT-LCD TCON. Nonlinear Eng. 2021, 10, 293–303. [CrossRef] [9] Yan, L.; Cengiz, K.; Sharma, A. An improved image processing algorithm for automatic defect inspection in TFT-LCD TCON Nonlinear Eng. 2021, 10, 293–303. [CrossRef] [10] Zhang, X.; Rane, K.P.; Kakaravada, I.; Shabaz, M. Research on vibration monitoring and fault diagnosis of rotating machinery based on internet of things technology. Nonlinear Eng. 2021, 10, 245–254. [CrossRef] [11] Guo, Z.; Xiao, Z. Research on online calibration of lidar and camera for intelligent connected vehicles based on depth-edge matching. Nonlinear Eng. 2021, 10, 469–476. [CrossRef] [12] Xie, H.; Wang, Y.; Gao, Z.; Ganthia, B.P.; Truong, C.V. Research on frequency parameter detection of frequency shifted track circuit based on nonlinear algorithm. Nonlinear Eng. 2021, 10, 592–599. [CrossRef] [13] Liu, C.; Lin, M.; Rauf, H.L.; Shareef, S.S. Parameter simulation of multidimensional urban landscape design based on nonlinear theory. Nonlinear Eng. 2021, 10, 583–591. [CrossRef]

Copyright

Copyright © 2023 Adesh More, Mitali Mahajan, Hemant Gholap. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET48904

Publish Date : 2023-01-29

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online