Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

A Bird's Eye View of Human Facial Expression Recognition Techniques

Authors: Thaneshwari Sahu, Shagufta Farzana

DOI Link: https://doi.org/10.22214/ijraset.2022.44700

Certificate: View Certificate

Abstract

Human Face Expression Recognition is one of the most remarkable and promising errands in friendly interaction. For the most part, facial emotions are normal and straight forward means for human creatures to impart their expressions and goals. Facial emotions are the critical attributes of non-verbal interactions. In this paper depicts the review of Face Expression Recognition (FER) strategies which incorporate the three significant steps, for example, preprocessing, face detection and emotion classification. In this review work, clarifies the different kinds of FER procedures with its significant handout. Till now many works is done in Facial expression recognition. This paper will in general concentrate on the work done in recognition utilizing various methods. Facial recognition is significant viewpoint in making more human like PCs where human intelligent machine can be construct. Various methods are utilized till now on various viewpoints; in this paper we will be mainly focusing on feature based facial expression recognition. This paper additionally centers on classification strategies.

Introduction

I. INTRODUCTION

Facial expression detection has extracted in the thought of various analysts from different areas. Investigation on face expression investigation includes superficial presentations, vocal, movement and biological sign investigation; and so on. Emotions on the facial are the essential finders for human opinions, consider it is contrasted with the sentiments. In an enormous piece of the occurrences (by and large in 54% conditions) [12], the expressions are communicated in a non-verbal method over facial emotions, and it will in general be examined as strong confirmation to uncover assuming an independent in verbal reality [1].

The expression of an independent can be researched to choose if a human is fit or not so much for a fragile endeavor. Computing can likewise be furnished with Facial Expression Detection (FER) limit to further develop the use. Person to person communication associations might incorporate features that suggest stand of the customer's position depending upon expression of the moved photo. Hardly any phone cameras at this point possess the part of getting the photo with perceiving the emotional faces. As all the thing is obtaining mechanized, so an authoritative goal is to empower equipped to see facial expression immediately as an individual can do. The following figure represents three significant stages in facial expression recognition.

Individuals can achieve face expression recognition in a less complex way anyway for computer it is extremely inconvenient. While an image is achieved, we really want to perceive and afterward limit facials. To determine this problem we achieve isolation, highlight extrication and confirming the face expression involving the image in foundation. The representation uses data from image input, see the articulation as well as describe the expression into different information. Beginning advance includes revelation of the face it will find assuming face show up has a place with a human or not in an objective picture. Additionally at whatever point displayed up revelation of however these faces are found is likewise turn-out, the resulting steps is to extricate those individual facials. A trajectory with still focuses is shaped with the assistance of the component focuses. The extrication components move past with ensuing advance of observing the expression imparted by the facials.

Computerized structures for identifying face expressions acquire information base of facials. From the information base images are taken and their element focuses are achieved and put away. Right when another image should be attempted, face from that photos are gotten to remove the highlights, an examination of elements is finished in accompany of those generally existed in the data set and afterward expression of the info facial is sorted according to the comparison outcomes.

II. FRAMEWORK

Expression investigator concluded face emotions has been viably explored in the examination area of PC view. In the view of Machine Learning or ML's, ascending in progress, promotion [2] and procedural advances that relate to the investigation of Neural NetworkLearning [30], the likelihood to gather wise systems which is capable to exactly see expressions transformed into a nearer existence. The intricacy in investigation of expression through PC view has expanded with the headway of areas that are directly associated with investigation of expression, for instance, head research and nervous architect science and so forth.

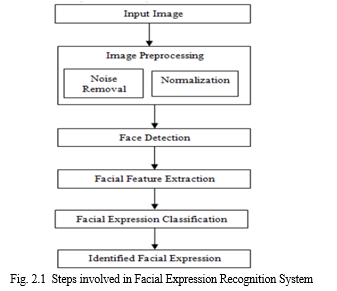

Generally, a Facial Emotion detection system includes the going with steps to be completed: image secusing, preprocessing of the picture, extricating highlights and classification of emotions. Fig. 2.1 shows different stages engaged with Face Expression Recognition:

A. Input Image

For Face expression grouping, pictures are removed from different currently displays data-sets or a picture can be given as a contribution also, one of the famous arrangements of such solid picture data set is JAFFE for example Japanese Female Facial Expression. This picture or an image is for the most part used to track down the facial in the gave picture.

B. Image Preprocessing

Pre-processing of image is a huge development for grouping of expressions since simply the focal piece of facial, for instance nose, mouth and eyes are needed for recognition of the expression depicted by the facial exhibits in the image. In this manner restriction of face in the picture is finished utilizing different procedures and algorithm.

C. Facial Feature Extraction

Extricating the element count of facial is vital for facial recognition. A few procedures are present for extrications of element focuses. Model incorporate Gabor wavelets [4], Scale Invariant Feature Transform (SIFT), minutes Speeded-Up Robust Features (SURF), Linear Discriminant Analysis (LDA) [3], and various so on. Gabor Wavelets are solid as well as strong against photometric agitating impacts so farprovides include vector with huge estimation. SURF remembers simply center for the a few most grounded highlight.

D. Facial Expression Classification

There are different "irregular" as well as "regular" categorize for ordering expressions. The most usually utilized arbitrary categorized are the Random Forest (RF), K Neighbor Neighbor (KNN) classifiers and Support Vector Machine (SVM). Real motivation behind this procedure is to arrange expressions into (disgust, happiness, sadness, fear, anger, surprise, and neutral).

III. LITERATURE SURVEY

The Table I. gives a near investigation of the relative multitude of methods utilized and investigated for face expression detection to have a superior comprehension on the exactness as well as intricacy of the algorithm and method used.

Mahmood M. R. et al. [5], author presents a paper gives a presentation assessment to a bunch of administered categorizations utilized for face emotion investigation in light of least elements chose by chi-square. These elements are the most notable and compelling ones that have substantial incentive for result assurance. The max noteworthy positioned 6 elements are applicable on six classifiers which includes arbitrary woodland, outspread one-sided capacity, multi-facet perceptron, support vector machine, choice tree, and K-Nearest neighbor to systematized the max reliable one-when the base count of element is used. This is happen by means of investigating and assessing the categorization presentation. CK+ is utilized as the examination's data-set. irregular woods with the all out exactness proportion of 94.23 % is outlined as the most dependable classifier among the rest.

Abdulrazaq M. B. et al. [6], author presents paper attempts to examine precision proportion of six classifiers in view of Relief-F include choice strategy, depending on the use of the base number of qualities. The categorization wherein the paper endeavors to review are Support Vector Machine, K-Nearest Neighbor, Multi-Layer Perceptron, and Radial Basis Function and Random Forest, Decision Tree. The test represents that K-Nearest Neighbor is the max dependable categorization with the absolute exactness proportion of 94.92% among the lay when execute on CK+ Dataset.

Wu. M. et al. [7], author presents proposition can deal with circumstances where the commitments of every methodology information to expression detection are exceptionally im-balanced. The nearby paired examples coming from three-symmetrical planes as well asspectro-gram are viewed as initial to separate low-level unique expression, so that the spatio-worldly data of these majorities can be gotten. To uncover another favor highlights, two-profound convolution-neural organizations are developed to separate undeniable even expression semantic elements. In addition, the two-phase fluffy combination methodology is created by incorporating accepted relationship examination and fluffy wide learning framework, to consider the connection and contrast between various modular highlights, just as manage the equivocalness of expressional state-data. The exploratory outcomes acquired on bench-mark data-sets results that the exactnesses of the proposed strategy are maximum than those of present techniques (like the cross breed profound technique, and the standard based and AI strategy) on eNTERFACE'05, SAVEE and AFEW information bases.

Dino H. I. et al. [8], paper gives an examination way to deal with FER in view of three component choice strategies which are connection, gain proportion, and information generate for deciding the max recognized elements of facial pictures utilizing multi-characterization calculations which are multi-facet perceptron, Naïve Bayes, choice tree, and K-closest neighbor (KNN). These methodologies are utilized for the achievement of articulation detection and for contrasting their corresponding execution. The fundamental point of the gave method is to decide the best method in light of least satisfactory number of highlights by examining and looking at their exhibition. The gavemethod has been practicable on CK+ dataset. The test results show that KNN is shown to be better classifier with 91% exactness utilizing just 30 highlights.

Le T. T. Q. et al. [9], author present a reduced system though a position pooling idea is known as dynamic picture is utilized as a descriptor to remove useful outlines on specific districts of curiosity alongside a convolutional neural organization (CNN) sent on inspired powerful pictures to perceive miniature expressions in that. Especially, facial movement amplification procedure is practical on information arrangements to upgrade the greatness of face developments in the information. Accordingly, rank-pooling is carried out to achieve dynamic-pictures. Just a proper amount of confined face regions are extricated on the powerful pictures in light of noticed prevailing strong transformation. CNN techniques are fit to the last characteristics portrayal for expression characterization assignment. The system is basic contrasted with that is of different discoveries, yet the rationale behind it legitimizes the viability by the exploratory outcomes author accomplished all through the review. The examination is assessed on three-cutting edge information bases CASMEII, SMIC and SAMM.

Liu D.. et al. [10], author present a measurement learning system with a siamese fell design that trained a fine grained differentiation for various emotions in video based assignment. Author additionally create a pair-wise inspecting system for context measurement training structure. Moreover, Author proposes an original activity unit’s consideration instrument custom fitted to Facial Expression Recognition assignment to extricate geographical settings from the expression areas.

This system fills in as a meager self-consideration of design to empower a solitary characteristics from any situation to see characteristics of the activity units (AUs) components (eyes, nose, mouth and eyebrows). Also, a mindful pooling-module is intended to choose enlightening things through the video arrangements by catching the worldly significance. Authordirect the analyses on four-broadly utilized data-sets (CK+, OuluCASIA, AffectNet, and MMI), and furthermore probe the wild data-set AFEW to additionally explore the strength of author’s proposed technique. Outcome exhibit that author’s methodology beats current cutting-edge strategies. More in subtleties, we give the removal investigation of every component.

Chen W. et al. [11], author propose a powerful system to consider this test. Author foster a C3D based arrangement engineering, 3D Inception ResNet, to separate geographically-worldly characteristics from the powerful face emotions picture arrangement. A SpatialTemporal and Channel-Attention Module (STCAM) is introduce to expressly take advantage of the allen compassing spatial worldly and channelwise connections existing the extricated characteristics. In particular, the introduced STCAM works out a strain shrewd and a geographical-transient insightful consideration guide to upgrade the characteristics along the relating characteristics aspects for more delegate characteristics. Author assess their strategy on three famous unique face demeanor acknowledgment data-sets, MMI, OuluCASIA and CK+. Exploratory outcomes results that our strategy accomplishes superior or similar execution contrasted with the best in class draws near.

Perveen N. et al. [12], author propose a unique bit based portrayal for facial expressions that absorbs facial developments caught involving nearby spatio-transient portrayals in an enormous wide spread Gaussian combination model (uGMM). These powerful parts are utilized to safeguard neighborhood similitudes while taking care of worldwide setting changes for a similar articulation by using the measurements of uGMM. Author show the viability of dynamic part portrayal utilizing three unique powerful pieces, in particular, , probability-based,matching-based,dynamic kernels, and explicit mapping based, on three-standard face emotions data-sets, namely, BP4D, MMI and AFEW. Their assessments results that likelihood based portions are the max discriminative amid the unique pieces. In any case, as far as computational intricacy, middle matching pieces are more proficient when contrasted with the other two portrayals.

Wen G.. et al. [13], paper proposes another technique for facial demeanor acknowledgment by thinking about a few perspectives. To start with, human creatures are not difficult to perceive a few expressions, while hard to remember others. Enlivened by this instinct, another misfortune work is introduced to grow the difference between tests from handily confounded classes. Secondly, human-basedlearning is isolated into number of stages, and the learning objective of every stage is unique. Along these lines, dynamic goals learning are proposed, where every target at various stage is characterized by the comparing misfortune work. To all the more likely understand the above thoughts, another profound neural organization for face emotion acknowledgment is investigate, which incorporates the covariance-pooling layer as well as remaining organization units into the profound convolution-neural organization to more readily results dynamic-targets learning. The test outcomes on the normal data sets confirm the viability and the unrivaled presentation of their strategies.

Deng L.. et al. [14], In this paper, contrasted and the current profound convolution neural organization streamlining in facial demeanor acknowledgment, these deficiencies are concentrated in this paper. The preparation informational index named fer2013 is accustomed to preparing convolutional network. The end-product show that the technique utilized in this paper can get a decent acknowledgment impact on facial demeanor acknowledgment.

TABLE I. Comparisons of various techniques and method used in existing system

|

S.No. |

Ref. Year |

Approach |

Dataset |

Method Used |

Algorithm Used |

Advantage |

Accuracy |

|

1 |

[5] 2021 |

an effective technique of classification and feature selection for Face Emotion Detection from sequence of face pictures. |

CK+ |

minimum features selected by chisquare |

decision-making board, a random forest, radial bias,MLP, SVM, KNN and MLP. |

support a consistency-assessment of various controlled algorithms to identify face emotion based on limited chi-square characteristics. |

94.23 % |

|

2 |

[6] 2021 |

Effective method for Face Emotion dataset and classify number of facialimages |

CK+ |

Reliever-F approach |

Decision Tree, SVM, KNN, Random Forestry, radial basis functions and MLP |

Concentrating on the usage of a minimal amount of assets, it concentrate at identification the exactness of six classifiers based on the Reliever-F techniques for function attributes |

94.93% |

|

3 |

[7] 2020 |

To achieve effective modality fusion. |

SAVEE, eNTERFACE'0 5, and AFEW |

LBP-TOP and spectrogram |

Fuzzy-Fusion based two-stage neural-network (TSFFCNN) |

The function in which every modal data provide to expression investigation is strongly im-balanced is supported well by TSFFCNN. |

SAVEE 99.79%,eNTERFACE’ 05 90.82%, AFEW 50.28% |

|

4 |

[8] 2020 |

The eight basic emotions of human facial to be investigated |

CK+ |

Co-relation gains ratio and data gain |

using Naïve Bayes, decision tree, Naïve Bayes, decision tree, KNN and MLP algorithms |

Provided FER by comparison method based on three methods of characteristics choose: co-relation, gains ratio, and information gain to examine the max outstanding features of facial pictures |

- |

|

5 |

[9] 2020 |

To improve the impromptu recognition of face micro-expression by world wise extricationmethod by hand |

CASMEII, SMIC and SAMM. |

The unique methodology of the facial motion is expansion to intakenumber - rank pooling to input critical pictures. |

CNN utilizes for the recognition of micro expressions in critical images requested |

Comprehensive as well as effective but plane technique analysis as well as classification of ME |

CASMEI, 78.5%, SMIC 67.3%, SAMM 66.67% |

|

6 |

[10] 2020 |

To permit a particular function to take input from the action units' features (AUs) (eyes, nose, eyebrows, eyes, nose, mouth). |

CK+ OuluCASIA MMI, AffectNet |

-VGG16 structure - Standardization cluster (BN) layers Bi-directional Short-Memory (BiLSTM) |

- CNN as well as RNN |

Experiment result indicate that the SAANet is improved rapidly in comparison to other trained techniques. |

Dataset CK+ is 99.54%, OuluCASIA MM is 88.33%, AffectNetis 87.06% |

|

7 |

[11] 2020 |

Select the difficult research of the facial |

CK+ Oulu CASIA and MMI |

-3D-Inception ResNet network architecture |

A strain and Spatial-Temporal Focus Module (STCAM) |

The result find and suggest that the method is more or less achieved than stateof the art techniques |

CK+ 99.08%, CASI 89.16%, |

|

8 |

[12] 2020 |

To achieve the growth of normal emotion captured from different region of the facial. |

MMI AFEW BP4D |

(UGMM), (MIK), (IMK) |

Dynamic kernelbased facial expression representation |

For any emotion detection method based on measurement time and a dynamic kernel is an essential selection |

BP4D 74.5% |

|

9 |

[13] 2019 |

To perform better dynamic features, learning -Enlarge the capacities between practice from easy confused features |

- |

enhanced deep the pooling-covariance and residual network-units |

-a newly DNN for face emotion - a new loss featuresis proposed to enhanced the gap between samples |

The neural-network construct will preserve the loss of gradients |

86.47% |

|

10 |

[14] 2019 |

To improve the accuracy. |

(CK+, MMI, AFEW) |

Dropout layer, Island layer, Softmax layer Layer, Stem Layer, 3D LayoutResNets, GRU layer |

- CNN video Face Emotion Recognitiontechnique |

It avoid the consequences of facial emotion features and adapt and gain max important of identification as well as generalization |

CK+ 94.39%, MMI 80.43%, AFEW 82.36% |

Conclusion

This paper presents the study of facial expression recognition methodologies where different feature extraction and classification techniques are applied and also sometimes preprocessing. In all the methodologies result are uneven through the aspect of different emotion. Yet anger is an emotion recognized very accurately while other different emotion in different techniques raise to accurate detection. Classifier also plays an important role in accuracy and also more in processing. Sometimes in complex techniques same result are achieved as compare to other lesser complex technique. Principal component analysis (PCA) proved to be an good dimensionality reduction step before going for feature extraction by other technique which in turns give lesser processing time, rather applying it for feature extraction through it.

References

[1] LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436, doi:10.1038/nature14539. [2] Chao, Wei-Lun. “Face Recognition“. GICE, National Taiwan University(2007). [3] Wang, Yi-Qing. “An Analysis of the Viola-Jones face detection algorithm.“Image Processing On Line 4 (2014): 128- 148. [4] Bartlett M, Littlewort G, Vural E, Lee K, Cetin M, Ercil A, Movellan J, “Data mining spontaneous facial behavior with automatic expression coding,” 2008. [5] M. R. Mahmood, M. B. Abdulrazzaq, S. Zeebaree, A. K. Ibrahim, R. R. Zebari, and H. I. Dino, \"Classification techniques’ performance evaluation for facial expression recognition,\" Indonesian Journal of Electrical Engineering and Computer Science, vol. 21, pp. 176-1184, 2021. [6] M. B. Abdulrazaq, M. R. Mahmood, S. R. Zeebaree, M. H. Abdulwahab, R. R. Zebari, and A. B. Sallow, \"An Analytical Appraisal for Supervised Classifiers’ Performance on Facial Expression Recognition Based on Relief-F Feature Selection,\" in Journal of Physics: Conference Series, 2021, p. 012055. [7] M. Wu, W. Su, L. Chen, W. Pedrycz, and K. Hirota, \"Two-stage Fuzzy Fusion based Convolution Neural Network for Dynamic Emotion Recognition,\" IEEE Transactions on Affective Computing, 2020. [8] H. I. Dino and M. B. Abdulrazzaq, \"A Comparison of Four Classification Algorithms for Facial Expression Recognition,\" Polytechnic Journal, vol. 10, pp. 74-80, 2020. [9] T. T. Q. Le, T.-K. Tran, and M. Rege, \"Dynamic image for micro-expression recognition on region-based framework,\" in 2020 IEEE 21st International Conference on Information Reuse and Integration for Data Science (IRI), 2020, pp. 75-81. [10] Liu, X. Ouyang, S. Xu, P. Zhou, K. He, and S. Wen, \"SAANet: Siamese action-units attention network for improving dynamic facial expression recognition,\" Neurocomputing, vol. 413, pp. 145-157, 2020. [11] W. Chen, D. Zhang, M. Li, and D.-J. Lee, \"STCAM: Spatial-Temporal and Channel Attention Module for Dynamic Facial Expression Recognition,\" IEEE Transactions on Affective Computing, 2020. [12] N. Perveen, D. Roy, and K. M. Chalavadi, \"Facial Expression Recognition in Videos Using Dynamic Kernels,\" IEEE Transactions on Image Processing, vol. 29, pp. 8316-8325, 2020. [13] G. Wen, T. Chang, H. Li, and L. Jiang, \"Dynamic Objectives Learning for Facial Expression Recognition,\" IEEE Transactions on Multimedia, 2020. [14] L. Deng, Q. Wang, and D. Yuan, \"Dynamic Facial Expression Recognition Based on Deep Learning,\" in 2019 14th International Conference on Computer Science & Education (ICCSE), 2019, pp. 32-37.

Copyright

Copyright © 2022 Thaneshwari Sahu, Shagufta Farzana. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET44700

Publish Date : 2022-06-22

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online