Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Classification and Counting of Vehicle using Image Processing Techniques

Authors: Bhagya S Hadagalimath, Ayesha Siddiqui, Dr. Suvarna Nandyal

DOI Link: https://doi.org/10.22214/ijraset.2022.48404

Certificate: View Certificate

Abstract

In this research work, we explore the vehicle detection technique that can be used for traffic surveillance systems. This system works with the integration of CCTV cameras for detecting the vehicle. Vehicle Tracking and Counting Monitoring highway traffic is an important application of computer vision research. In this paper, we analyze congested highway situations where it is difficult to track individual vehicles in heavy traffic because vehicles either occlude each other. In this we focus on the issues to propose a viable solution and we apply the vehicle detection results to multi object tracking and vehicle counting. Vehicle detection and vehicle type recognition is a practical application of machine learning concepts and is directly applicable for various operations in a traffic surveillance system contributing to an intelligent traffic surveillance system. This paper will introduce the processing of automatic vehicle detection and recognition using static image datasets. Further using the same technique, we shall improvise vehicle detection by using live CCTV surveillance. The surveillance system includes detection of moving vehicles and recognizing them, counting number of vehicles.

Introduction

I. INTRODUCTION

A CCTV camera is a very essential part of an intelligent traffic surveillance framework [1]. It is simply the automated process of monitoring the traffic in a particular area and detecting vehicles for further action, as shown in diagram. The captured images can provide valuable clues to the cops and other public essential tracking services, such as vehicle’s license plate number, time and motion of vehicle, details associated with the driver, etc. which all may lead to evidences to some crime or any unforeseen or unfortunate incidences . Earlier people used to process images manually. In fact, this system is still going on in India, whereas countries like KSA also have implemented automated machines CCTVs that function 24x7 and take immediate action via signaling too. Manual work has always been proven slower and less efficient due to human errs and many other factors that affect living beings. Keeping these points in mind and moving with the advancement of technologies, many innovative thinkers have developed certain intelligent traffic control systems using various techniques[3] . This research is based upon the combination of two prior-made researches by scholars whose works have been published. The resultant research is expected to help Lovely Professional University. The author has chosen this organization as it contains a huge population – students, teaching and non-teaching staff, other workers and visitors. The university faces many vehicles each day with manual checking of registration of the vehicles, time slots and other parameters. And we know what manual work does. So this research shall help the organization build a system that can automate this process so the human labor that was previously used for manual traffic check within the premises can be exempted from that tedious task and allotted other important tasks that shall be fruitful to the organization as efficient works will maximize in lesser time and capital. A dummy image has been produced to explain this concept in a much clearer way. Consider the following figures.

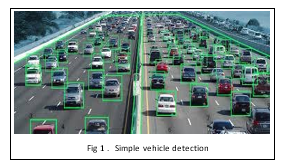

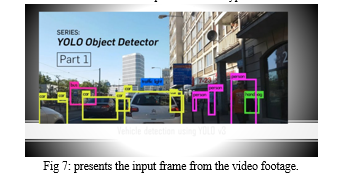

The above image is a output image of the part 1 of our research model which is simple vehicle detection and recognition. It just observes all objects but detects only those that are vehicles and classifies them on the same basis.

Image processing is a method to convert an image into digital form and perform the necessary operations on it, to get an enhanced image and information. It is a type of signal dispensation in which input is an image, like a video frame or photograph or characteristics associated with that image. The image processing system includes considering images as two-dimensional signals while applying already set signal processing methods to them. It is among rapidly growing technology, with its applications in different aspects of a business. Image Processing forms the core research area within engineering and computer science. Image processing includes Importing the image with an optical scanner or by photography, Analyzing and manipulating the image which incorporates data compression, image enhancement and spotting patterns that are not visible to human eyes like satellite photographs, and the output is obtained in the last stage in which the result can either be an altered image or a report that is based on image analysis. We use signal to signal control in our project. Fixed length signals or fixed timers are used for implementation of currently available systems which are leading to traffic jam. In low traffic areas fixed length timers can be used whereas larger area junctions can lead to congestion. In our system we use variable timers to avoid such a problem of traffic jam. Adaptive timers will be set by using different types of algorithms which are based on the density of the traffic. For adapt the traffic signal plans we are developing various distributed algorithms. Vehicle detection and statistics in highway monitoring video scenes are of considerable significance to intelligent traffic management and control of the highway. With the popular installation of traffic surveillance cameras, a vast database of traffic video footage has been obtained for analysis. Generally, at a high viewing angle, a more-distant road surface can be considered. The object size of the vehicle changes greatly at this viewing angle, and the detection accuracy of a small object far away from the road is low. In the face of complex camera scenes, it is essential to effectively solve the above problems and further apply them. In this paper, we focus on the above issues to propose a viable solution, and we apply the vehicle detection results to multi-object tracking and vehicle counting.

II. LITERATURE SURVEY

Literature Survey GPS based vehicle tracking and monitoring system- A solution for public transportation. The author of the paper provides a solution for tracking and monitoring the public transportation vehicles using devices such as Raspberry Pi and GPS Antenna. Raspberry Pi processing board can be used to receiving values and gives the result. This method can find a way to monitor the transportation vehicle from the location source to destination. In this paper, there is a use of GPS receiver module for receiving the latitude and longitude values of the present location of the vehicle continuously.

A. .Real-time GPS Vehicle Tracking System

In this paper implementation and designing of a real-time GPS tracker system via Arduino was applied.

- This method was applicable for salesman tracking, private driver and for vehicle safety.

- The author of the paper also tried to solve the problem of owners who have expensive cars to observe and track the vehicle and find out vehicle movement and its past activities of vehicle.

B. Android app based vehicle tracking using GPS and GSM

- The author of this paper has explains an embedded system, used to know the location of the vehicle using technologies like GSM and GPS.

- System needs closely linked GPS and GSM module with a microcontroller.

- Initially, the GPS installed in the device will receive the vehicle location from satellite and store it in a microcontroller‘s buffer.

- In order to track location the registered mobile number has to send request, once authentication of number get completed, the location will be sent to mobile number in the form of SMS.

C. Survey Paper On Vehicle Tracking System Using GPS And Android

- This paper propose a GPS based vehicle tracking system to help organization for finding addresses of their vehicles and

- Locate their positions on mobile devices.

- The author states system will give the exact location of vehicle along with distance between user and vehicle.

- The system will have single android mobile, GPS and GSM modems along with processor that is installed in vehicle. When vehicle get activated and starts moving, the location of the vehicle will be updated continuously to a server using GPRS service.

D. Review of Accident Alert and Vehicle Tracking System

- In this paper, the author has described the system that can track the vehicle and detect an accident.

- There will be automatic detection of traffic accidents using vibration sensors or a piezoelectric sensor.

- In this sensor will first sense the occurrence of an accident and give its output to the microcontroller.

- As soon as vehicle meets accident the GPS module will detect the latitude and longitudinal position of a vehicle.

- Then the GSM module sends latitude and longitude position of the vehicle to the ambulance which is near to that location.

The main goal of vehicle classification is to categorize vehicles into their respective types and to provide better traffic surveillance to reduce the accidents, ability to identify the class of the passing vehicle. This paper is organized as follows. Section II presents the survey of various vehicle classification techniques; Section III compares different vehicle classification techniques with their merits and demerits and finally concludes the paper.

III. METHODOLOGY

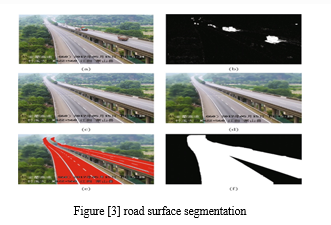

In this research work we are using Road surface segmentation. This section describes the method of highway road surface extraction and segmentation. We implemented surface extraction and segmentation using image processing methods, such as Gaussian mixture modelling, which enables better vehicle detection results when using the deep learning object detection method. The highway surveillance video image has a large field of view. The vehicle is the focus of attention in this study, and the region of interest in the image is thus the highway road surface area. At the same time, according to the camera’s shooting angle, the road surface area is concentrated in a specific range of the image. With this feature, we could extract the highway road surface areas in the video.

To eliminate the influence of vehicles on the road area segmentation, we used the Gaussian mixture modelling method to extract the background in the first 500 frames of the video. The value of the pixel in the image is Gaussian around a certain central value in a certain time range, and each pixel in each frame of the image is counted. If the pixel is far from the centre, the pixel belongs to the foreground. If the value of the pixel point deviates from the centre value within a certain variance, the pixel point is considered to belong to the background. The mixed Gaussian model is especially useful in images where background pixels have multi-peak characteristics such as the highway surveillance images used in this project.

The pixel value of the adjacent continuous road surface areas is close to the seed point pixel value. Finally, the hole filling and morphological expansion operations are performed to more completely extract the road surface. We extracted the road surfaces of different highway scenes.

In the above figure [3],we segmented the road surface area to provide accurate input for subsequent vehicle detection. For the extracted road surface image, a minimum circumscribed rectangle is generated for the image without rotation.

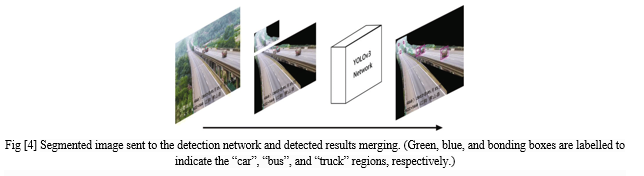

Vehicle detection using YOLOv3

The implementation of the highway vehicle detection framework used the YOLOv3 network. The YOLOv3 algorithm continues the basic idea of the first two generations of YOLO algorithms. The centre of the object label box is in a grid unit, and the grid unit is responsible for predicting the object. The network structure adopted by YOLOv3 is called Darknet-53.

This structure adopts the full convolution method and replaces the previous version of the direct-connected convolutional neural network with the residual structure. The branch is used to directly connect the input to the deep layer of the network. The detection speed is fast, and the detection accuracy is high. Traffic scenes taken by highway surveillance video have good adaptability to the YOLOv3 network. The network will finally output the coordinates, confidence, and category of the object. The dataset presented in vehicle dataset section is placed into the YOLOv3 network for training, and the vehicle object detection model is

obtained. The vehicle object detection model can detect three types of vehicles: cars, buses, and trucks. Because there are few motorcycles on the highway, they were not included in our detection. The remote area and proximal area of the road surface are sent to the network for detection. The detected vehicle box positions of the two areas are mapped back to the original image, and the correct object position is obtained in the original image.

A. Multi-Object Tracking

This section describes how multiple objects are tracked based on the object box detected in “Vehicle detection using YOLOv3” section. In this study, the YOLOv3 algorithm was used to extract the features of the detected vehicles, and good results were obtained. We used the YOLOv3 algorithm to extract feature points in the object detection box obtained by the vehicle detection algorithm. The object feature extraction is not performed from the entire road surface area, which dramatically reduces the amount of calculation. In object tracking, the prediction box of the object in the next frame is drawn since the change of the vehicle object in the continuous frame of the video is subtle according to the feature extracted in the object box.

- Frame Differencing: A video is a set of frames stacked together in the right sequence. So, when we see an object moving in a video, it means that the object is different location at every at every consecutive frames. The pixel difference of the first frame the second frame will highlight the pixels of the moving object. Now, we would have the pixels and the coordinates of the moving object. This is broadly how the frame differencing method works.

- Image Thresholding: In this method, the pixel values of a grayscale image are assigned one of the two values representing black and white colors based on a threshold. So, if the value of a pixel is greater than a threshold value, it is assigned one value, else it is assigned the other value.

- Finding Counters: The contours are used to identify the shape of an area in the image having the same color or intensity. Contours are like boundaries around regions of interest. The white regions have been surrounded by grayish boundaries which are nothing but contours. We can easily get the coordinates of these contours. This means we can get the locations of the highlighted regions.

- Image Dilation: This is a convolution operation on an image wherein a kernel (a matrix) is passed over the entire image. Just to give you intuition, the image on the right is the dilated version of the image on the left.

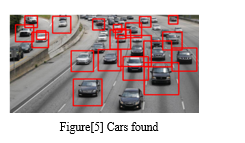

B. Detecting Car using Car Cascade

Now we need a car cascade to detect cars. So, we first need to upload them to collab (if you are doing it in the collab, if you are doing it in the local machine, then add the cascade files in the same folder) and specify the path car_ cascade_src. Here we will use the Cascade Classifier function, the predefined function of Open CV, to train the images from the pre-trained XML file (Cascade file – car).We will use the above-returned contours and draw a rectangle around detected cars. Here we will see that it will create the rectangle with a red boundary around every car it detects.

C. For Bus Detection

Now we will use another image, i.e. bus image, and we will fetch this image from the internet. We will resize the image, store it as a NumPy array, and convert it into gray scale.

We are working with the bus image here, so we require the cascade to detect the bus. We will use Bus_front cascade to identify buses from the image, and we will perform a similar operation as performed above.

As we did in car cascading similarly, we will be performing the same contour operations on the bus image and create a rectangle around the bus if detected any bus.

IV. IMPLEMENTATION OF YOLOV3

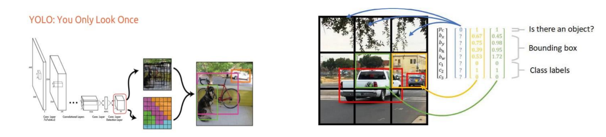

YOLO is a Deep Learning architecture ‘You Only LookOnce:Unified, Real-Time Object Detection’ uses a totally different approach. It is a clever convolutional neural network (CNN) for objectdetection used in real-time.Further, It is popular because it has a very high accuracy while also being able to run in real-time or used for real-time applications. The YOLO algorithm “only looks once” at the input image that is it needs only one forward propagation pass through the network to make the predictions.

A. How Does YOLO Work?

Prior detection systems use localizers or classifiers to carry out the detection process. Then the model is applied to an image at different scales and locations. The regions of the image with High scoring are considered for detections.

YOLO algorithm uses a completely different approach. The algorithm applies a single neural network to the entire full image. Then this network divides that image into regions which provides the bounding boxes and also predicts probabilities for each region. These generated bounding boxes are weighted by the predicted probabilities.

The non-max suppression technique makes sure that the object detection algorithm only detects each object once and it discards any false detections, it thengives out the recognized objects along with the bounding boxes.

In this paper, we are going to design a counter system using OpenCV in Python that will be able to track any moving objects using the idea of Euclidean distance tracking and contouring. You might have worked on computer vision before. Have you ever thought of tracking a single object wherever it goes?

Object tracking is the methodology used to track and keep pointing the same object wherever the object goes.

There are multiple techniques to implement object tracking using OpenCV. This can be either-

- Single Object Tracking

- Multiple Object Tracking

In this paper, we will perform a Multiple Object Tracker since our goal is to track the number of vehicles passed in the time frame.

B. Multiple Tracking Algorithms Depend On Complexity And Computation

- DEEP SORT: DEEP SORT is one of the most widely used tracking algorithms, and it is a very effective object tracking algorithm since it uses deep learning techniques. it works with YOLO object detection and uses Kalman filters for its tracker.

- Centroid Tracking Algorithm: The centroid Tracking algorithm is a combination of multiple-step processes. It uses a simple calculation to track the point using euclidean distance. This Tracking algorithm can be implemented using our custom code as well.

a. Step 1: Calculate the Centroid of detected objects using the bounding box coordinates.

b. Step 2: For every ongoing frame, it does the same; it computes the centroid by using the coordinates of the bounding box and assigns an id to every bounding box it detects. Finally, it computes the Euclidean distance between every pair of centroids possible.

c. Step3: We assume that the same object will be moved the minimum distance compared to other centroids, which means the two pairs of centroids having minimum distance in subsequent frames are considered to be the same object.

d. Step 4: Now it’s time to assign the IDs to the moved centroid that will indicate the same object.

We will use the frame subtraction technique to capture a moving object.

V. RELATED WORK

In this research paperAt present, vision-based vehicle object detection is divided into traditional machine vision methods and complex deep learning methods. Traditional machine vision methods use the motion of a vehicle to separate it from a fixed background image.

This method can be divided into three categories.

- The method of using background subtraction.

- The method of using continuous video frame difference.

- The method of using optical flow.

Using the video frame difference method, the variance is calculated according to the pixel values of two or three consecutive video frames. Moreover, the moving foreground region is separated by the threshold. By using this method and suppressing noise, the stopping of the vehicle can also be detected. When the background image in the video is fixed, the background information is used to establish the background model. Then, each frame image is compared with the background model, and the moving object can also be segmented. The method of using optical flow can detect the motion region in the video. The generated optical flow field represents each pixel’s direction of motion and pixel speed. Vehicle detection methods using vehicle features, such as the Scale Invariant Feature Transform (SIFT) and Speeded Up Robust Features (SURF) methods, have been widely used. For example, 3D models have been used to complete vehicle detection and classification tasks. Using the correlation curves of 3D ridges on the outer surface of the vehicle the vehicles are divided into three categories: cars, SUVs, and minibuses.

The use of deep convolutional networks (CNNs) has achieved amazing success in the field of vehicle object detection. CNNs have a strong ability to learn image features and can perform multiple related tasks, such as classification and bounding box regression. The detection method can be generally divided into two categories. The two-stage method generates a candidate box of the object via various algorithms and then classifies the object by a convolutional neural network. The one-stage method does not generate a candidate box but directly converts the positioning problem of the object bounding box into a regression problem for processing. In the two-stage method, Region-CNN (R-CNN) uses selective region search in the image.

The category loss method is two-class cross-entropy loss, which can handle multiple label problems for the same object. Moreover, logistic regression is used to regress the box confidence to determine if the IOU of the a priori box and the actual box is greater than 0.5. If more than one priority box satisfies the condition, only the largest prior box of the IOU is taken. In the final object prediction.

In this research work the objective of this project is to avoid vehicle collisions and prevent accidents. This is achieved by placing a camera at the front of the car to scan and observe the environment in which the car is further driven. One of the primary steps involved in this project is detection of a car in the image as without a car at the scene or the image that the front camera captures, there is no possibility of accidents. With the popular installation of traffic surveillance cameras , a vast database of traffic video footage has been obtained for analysis. Generally at a high viewing angle a more distant road surface can be considered .

The object size of the vehicle changes greatly at this viewing angle, and the detection accuracy of a small object far away from the road is low. At present ,vision based vehicle object detection is divided into traditional machine vision Also since images are captured in small time intervals , the distance that the vehicle moved in that time interval could be found which directly gives us the average velocity in which the vehicle is moving. With the knowledge of relative velocities and with determining weather the distance btw the vehicle and car reduces less than acceptable threshold value ,the system could identify the collisions and hence could warn an alert the driver or apply breaks if the car has automated breaking system.Image processing is a method to convert an image into digital form and perform the necessary operations on it, to get an enhanced image and information. It is a type of signal dispensation in which input is an image, like a video frame or photograph or characteristics associated with that image.

In this research the image processing system includes considering images as two-dimensional signals while applying already set signal processing methods to them. It is among rapidly growing technology, with its applications in different aspects of a business. Image Processing forms the core research area within engineering and computer science. Image processing includes Importing the image with an optical scanner or by photography, analyzing and manipulating the image which incorporates data compression, image enhancement and spotting patterns that are not visible to human eyes like satellite photographs, and the output is obtained in the last stage in which the result can either be an altered image or a report that is based on image analysis.

Current traffic control technique involving magnetic loop detectors buried in the road, infra-red and radar sensors on the side provide limited traffic information and require separate systems for traffic counting and for traffic surveillance. Inductive loop detectors do provide a cost-effective solution, however they are subject to a high failure rate when installed in poor road surfaces, decrease pavement life and obstruct traffic during maintenance and repair. Infrared sensors are affected to a greater degree by fog than video cameras and cannot be used for effective surveillance.

In contrast video-based systems offer many advantages compared to traditional techniques. They provide more traffic information, combine both surveillance and traffic control technologies, are easily installed, and are processing techniques. In this we tried to evaluate the process and advantages of the use of image processing for traffic control. Implementation of our project will eliminate the need of traffic personnel at various junctions for regulating traffic. Thus the use of this technology is valuable for the analysis and performance improvement of road traffic. Also priority to emergency vehicles has been the topic of some research in the past. A proposed system for detection of these vehicles as in is based on Radio-Frequency Identification (RFID).

VI. EXPERIMENTAL RESULTS AND DISCUSSIONS

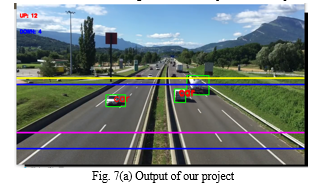

The result of the vehicle detection is shown in this section. Figure 7(a) & 7(b) presents the input frame from video footage.

Region of Interest is shown in the figure 7(a), where coloured lines represent the region of interest.

A video footage was given as an input to the system, by using YOLO V3 algorithm the car was detected. Then a specific region was selected as a region of interest. The car was tracked till it goes out of region of interest. Every frame is compared with the previous frame, if the car is present in both the frames and difference in their x and y coordinates is less than max (Width, Height) pixels then we consider it as a same car. If the difference is more than max (Width, Height) pixels, then we consider them as 2 separate cars. background subtraction, use Traditional machine vision methods and YOLO V3 algorithm, Cascade Classifier method or combining background subtraction to detect more specific vehicle types.

Conclusion

This research designs a classification system to determine object as specific type of vehicle. YOLO V3 algorithm is used to determine object as car and counted the number of passing vehicles on the specific road using traffic videos as input. The detection rate of this system is affected by the scale factor value, different scale factor value providing varied detection rates. In obtaining high detection rate, the scale factor value giving the best performance to classifier should be determined. In the future, providing skillful and robust vehicle detection system will be a challenging task in this field. In this paper we’ve built an advanced vehicle detection and classification system using Open CV. We’ve used the YOLOv3 algorithm along with Open CV to detect and classify objects.Due to the limitations of the YOLOv3 model, when more vehicles are close to each other in the images or the vehicles’ target size is not the same, it will have missed detections and positioning problems, further affecting the accuracy rates of traffic flow statistics and prediction information. This study established a high-definition vehicle object dataset from the perspective of surveillance cameras and proposed an object detection and tracking method for highway surveillance video scenes. A more effective area was obtained by the extraction of the road surface area of the highway. The YOLOv3 object detection algorithm obtained the end-to-end highway vehicle detection model based on the annotated highway vehicle object dataset. To address the problem of the small object detection and the multi-scale variation of the object, the road surface area was defined as a remote area and a proximal area. The two road areas of each frame were sequentially detected to obtain good vehicle detection results in the monitoring field.

References

[1] Punam Patel and Shamik Tiwari in (2016) proposeda paper entitledFlame Detection using Image Processing Techniques in International Journal of Computer Applications Volume 58– No.18. [2] Gamer, J.E., Lee, C.E. and Huang, L.R. (1990) Center for Transportation Research, the University of Texas at Austin proposed a paper entitled Infrared Detectors for Counting, Classifying, and Weighing Vehicles, 3-10-88/0-1162. [3] Ankit Kumar Singh and Nemi Chand Bajiya “Image Vehicle Classification Based on Adaptive Edge Detection & PCA Method” International Journal ofAdvancement in EngineeringTechnology, Management & Applied Science Vol. 2, Issue 8, August 2015. [4] Susmita. A.Meshram and A.V.Malviya “Traffic Surveillance by Counting and Classification of Vehicles from Video using Image Processing” International Journal of Advance Research in Computer Science and Management Studies Vol. 1, Issue 6, November 2013. [5] Jyotsna Tripathi, Kavita Chaudhary, Akansha Joshi and J.B.Jawale “Automatic Vehicle Counting and Classification” International Journal of Innovative and Emerging Research in Engineering, Vol. 2, Issue 4, 2015. [6] Susmita A. Meshram and A.V Malviya “Traffic Surveillance by Counting and Classification of Vehicles from Video using Image Processing” International Journal of Advance Research in Computer Science and Management Studies Vol. 1, Issue 6, November 2013.

Copyright

Copyright © 2022 Bhagya S Hadagalimath, Ayesha Siddiqui, Dr. Suvarna Nandyal. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET48404

Publish Date : 2022-12-26

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online