Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Efficient Deep CNN-Based Fire Detection and Localization in Video Surveillance

Authors: Prof. Manisha Khadse, Sanket Nikam, Chandradeep Patil, Abhishek Nigade, Akash Wagh

DOI Link: https://doi.org/10.22214/ijraset.2022.43347

Certificate: View Certificate

Abstract

Accidents caused by undiscovered fires have cost the globe a lot of money. The demand for effective fire detection systems is on the rise. Because of the system\'s inefficiency, existing fire and smoke detectors are failing. Analyzing live camera data allows for real-time fire detection. The fire flame features are investigated, and the fire is recognized using edge detection and thresholding methods, resulting in the creation of a fire detected model. It detects hazardous fires identified on the size, velocity, volume and the texture. In this paper we are proposing an emerging fire detection system based on Convolutional Neural Network. The model\'s experimental results on our dataset reveal that it has good fire detection capability and ability of detecting multi-scale fire in real-time.

Introduction

I. INTRODUCTION

Fires have become more common as society has progressed and humans have become more reliant on fire. The most dangerous thing due to rapid growth of fire is it is affecting the lives of human being directly and also the constructions made by the humans. As far as the data regarding this is concerned, fire ravaged woods are the major contributors to the increasing temperature of the earth. Large number of people died in China alone in 2019 as a result of indoor fires. These results points to the seriousness of this problem and the importance of detection of fire.

The methods of detecting the fire in past few years are extensively depend on the sensors such as weather and sensors of the smoke, however this approach might overlook distant flame. The methods of detection of fire by means of video apps are in demand in the recent years with the advent of deep learning. a high-dome environment or a fire in its early stages.

The detection of objects visually and extensively utilized for alerting the necessary management into open environment as soon as possible. The various kinds of technologies related to detection of fire like the technology depending upon the vision are in demand in the view of researchers because of the constraints in the previously proposed systems of detection of fire as well as the progress in the technologies depending upon the visually processed objects. Detection of fire using vision technology provides three key advantages over traditional fire detection methods: available, controllable, and instant. The cameras ensures that areas in which we are monitoring will be accessing by a visual detection system, allowing preventing guards for controlling the condition in real time. Following that, an ability to control is evident in movements that were collected and accessed after the danger happened due to fire. Lastly, the instantaneity of fire is ensured by a low computing cost and an efficient method.

II. LITERATURE REVIEW

The authors of paper [1] proposed a support vector machine-based picture fire detection technique. To begin, the inter-frame difference approach is used to detect the motion region, which is then considered the fire suspected area. The probable fire region is then re-sampled using the scene categorization algorithm. The texture and color moment features of the fire suspected area are then extracted. Finally, the trained machine learning model support vector machine is used to input these eigenvalues as eigenvectors for fire and non-fire classification and recognition. The experimental findings revealed that the suggested approach in this work can eliminate the drawback of artificially establishing the flame characteristics threshold and has higher accuracy than a traditional flame identification algorithm. However, there are some phenomena that have been overlooked or misinterpreted. This is what the algorithm performs.

The authors of paper [2] studied some problems about the detection of fire in forest areas, detection of fire in early moments, and erroneous detection. They applied traditional detection using objects approaches for determining the fire in the forests for the first time. Real-time properties are being increased by SSD, greater accuracy of detecting the fire, and the potential to detect fires earlier. To reduce the number of false alarms, they create a system using smoke and fire, and used newly introduced adjustments. Meanwhile, they tweak the tiny-yolo structure of YOLO and suggest a new structure. The experiments show that tiny-yolo-voc1 is improving the rate of accuracy of detection of fire. The document was really useful to safety of forests as well as in monitoring.

The authors of paper [3] founded the benchmark capitalized on YOLOv4 protocol, developed a model of detection of fire using the Darknet deep learning framework. They employed a wide number of self-built fire datasets for training and testing in the experiment, including multi-scale. Our model outperformed others in fire detection, according to the findings. Based on Convolutional Neural Networks, this research developed a better YOLOv4 fire detection algorithm (CNN). They employ automated dataset of fire detection to increase model accuracy, a modified loss function to improve small-scale flame detection, and a combination of Soft-NMS for improving the suppressive impact of the box which is redundantly bounded and lower low rate of recalling.

The authors of paper [4] used a new approach of fire detection that uses gas leak concentration to anticipate explosions and fires, which was previously known as a fire predictor and a fire appearance detector. Fire detection is one of the smart home's features. The early detection of a fire in the house is a critical step in preventing a large-scale fire and saving numerous items. The fire predictor simply displays the gas leak concentration and sounds an alarm. The classification of fire detectors is done using a fuzzy approach. The data can be sent to MFC from the output simulation system, but the MFC reader cannot understand it in real time.

The authors of paper [5] studied on the hardware of Raspberry Pi circumstances and the Raspberry Pi software, they were able to compensate to deficiencies of standard detectors of fire for improving the dependability of fire deep uses paper YOLO v3 is a modest system of identifying the local videos for shipping fires that uses a lightweight direct regression detection technique. The RPI Fire is gaining high perfection rate, recall rate of video testing and fire simulation, able to match the requirement of ship detection of fire.

The authors of paper [6] described and assessed a variety of fire detection technologies. The different strategies are resolved using the MATLAB software. The current fire detection system appears to be underperforming. As a result, for high efficiency and low false alarm rate, a combination of fire detecting algorithms is proposed. As a result, such models can detect fire in any setting, and the intensity and direction of the fire may be computed accurately and quick response for fire detection, the system requires less gear, making it not only more cost-effective but also dependable.

According to the comparison study. This color model was recognized 99.88 percent of the time. It can be improved by taking into account smoke for early fire detection.

The authors of paper [8] proposed contextual to detect the object according to relationships among areas in which the smoke is detected is the most important factor in ensuring fire-alarm accuracy. To classify target items, the framework is efficiently connecting specific moments with graphical approaches. The framework is based on their size as well as on their comparative placements along smoky zones, in order to adequately represent the scene. It is nearly impossible detecting and giving alert regarding fire detection from a single observed smoke location. Contextual object detection is proposed in this study to filter out non-disaster situations. However, due of the variety of smoke-filled conditions, an unclear scenario is a challenge.

The authors of paper [9] proposes a fire recognition approach based on the centroid variety of consecutive frame images, which is based on the flickering characteristic of flame. This method has the advantages of simplicity, speed, and versatility when compared to the standard flame recognition method. It is capable of removing a wide range of non-fire interference sources. This type of fire detection technology, which uses a standard camera, has a lot of practical utility.

The authors of paper [10] proposed the detail information films of the fires in forests in varied situations was used for assessing an aerial-based forest fire identifying approach. To improve the detection rate, the moving properties are first identified, later adjusted by scale to highlight burning region. Second, smoke is retrieved using our proposed technique. In the real use of forest fire detection, our system demonstrates its robustness with a large accuracy rate. using optical flow for computing vectors of motion, then clustering DBSCAN which represents existing models motion patterns. By similarity and criterions of entropy classes resulting matched to motion pattern models. This helps in detecting the motions with some irregularities in traffic.

|

Reference |

Consensus used |

Contributions |

Advantages |

Disadvantages |

|

[1] Ke Chen, Yanying Cheng |

To create facial features, Gabor filters are used. |

Research About Vector Machine for Image Detection |

Provides early notice, allowing guards to be saved. |

The effectiveness of facial recognition is limited by poor image quality. |

|

[2] Shixiao Wu, Libing Zhang |

Techniques are based on gray-scale images |

Use Object Detection for Real Time Fire Detection |

System architecture provides performance and cost-effectiveness |

The camera should be of decent quality. |

|

[3] Huang Hongyu1, Kuang Ping1 |

Algorithm for detecting scarves. |

Improved Detection Using Neural Network

|

An effective tool for improving the security system's performance. |

Facial Recognition is More Difficult With Smaller Image Size |

|

[4] Oxsy Giandi, Riyanarto Sarno |

Motion Edges To B-spline Contour Edges |

Prototype of Fire Symptom Detection System

|

This will attempt to eliminate the possibility of ATM theft-related fraud. |

Facial recognition technology may be limited by data processing and storage. |

|

[5] Wu Benxiang , Luo Ningzhao, Jiang Feng, Yang Feng, |

Develop compact fire Detection System (Rpi-Fire) |

Fire Detection System Develop for ship fire |

Using kinematic constraints ambiguity try to be nullified |

In this approach up to 30 pixels only it can bare errors for the pose’s 2D localization. |

|

[6] Sneha Wilson, Shyni P Varghese |

R-CNN |

A Comprehensive Study on Fire Detection |

Detection of a handgun in real time. |

It only detects handgun as weapon |

|

[7] Plamen Zahariev,Georgi Georgi Hristov Diyana Kinaneva |

MQTT nodes configured TLS connections. |

Use Neural Network to detect fire at early stage |

Works well for detecting abnormalities in crowded traffic. |

Errors were caused due to noise which caused discarding of short time detections. |

|

[8] Huanhuan Bao , Hang Ji, Dengyin Zhang, Xuan Zhao, |

Stacked Hourglass module |

Used Contextual Object Detection\for Smoke Detection |

HG (Hourglass) modules instead of forming a giant encoder and decoder network use HG modules which produce a full heat-map for joint prediction. |

Peoples interactions that causes complex spatial hindrance due to occlusion of individual corridor by clothes, contact, and branch articulations which makes it delicate for association of corridor. |

|

[9] Bu Leping Hou Xinguo , Wang Teng |

Fire color model based on RGB-HIS |

Detection Method used Frames based on centroid variety |

simplicity, fastness and strong adaptability. |

Less precision in case of rough image. |

|

[10] Hieu Trung , Hanh Ngoc, |

Surveillance, Performance, Vision, Evaluation |

Valuation of Aerial Videos for Detection |

Proposed solution take the advantages of both CNNs and vision based recognition |

Temporal interval is very short so long-term model having temporal correlation is not that much effective. |

III. RESEARCH WORK

This study investigated the performance of their fire detection model by examining a large database of various scene conditions. It shows that the delicacy of the model was 93.97 generation, while false alarms and misses were 7.08 generation and 6.86 generation, respectively. This study proposes a further stage of bank discovery as a strategy to enhance the performance of the fire detection model by removing the thick bank which covers nearly all of the fire. A bank discovery stage is established using both color and stir characteristics of the bank pixels. It is demonstrated that this stage is effective in detecting the fire area since fire pixels are segmented using both color and stir characteristics of the bank.

Originally, the areas analogous to fire were detected using the RGB-HIS model. The centroid movement of these areas was calculated using videotape shadows, and a new fire detection methodology was developed. Trial results indicate that this method is effective. The proposed system exhibits a certain practical significance for inner fire discovery as a series of trials demonstrate its capability of excluding the influence of common interferences and triggering fire advising in a timely manner.

Essentially, they trained and tested their system on our custom-built dataset, which consists of images taken from the internet and labeled manually to represent fire and banks. In comparison with the performance of styles based on state-of-the-art infrastructure, our systems performed better and were more complex. Experimental results based on real-world data indicate that our system's performance and complexity are superior.

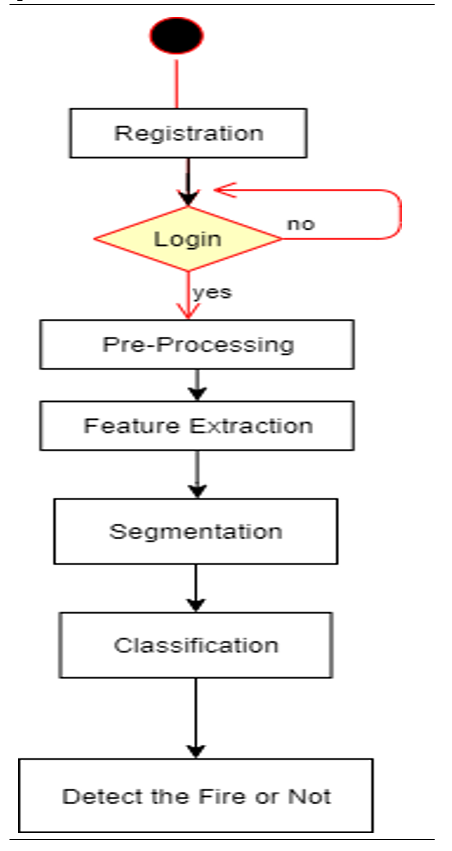

A. Outline

The steps listed below comprise the working model of our system are:

- Collecting footage and converting it into useful information/data

- Data acquisition and analysis

- Decision making to check fire activity

- Trigger the alert system via registered mail

B. Capturing Video And Turning It Into Usable Information

The video is captured using an IP camera that is connected to the remote organiser. The video is processed and broken down into visual outlines once it has been shot. Machine learning models are fed these image outlines. A lexicon of one-of-a-kind terms that appear in all of the dataset photo captions is produced and saved on disc during data cleaning. Informing. The corresponding data is subsequently passed into the following phase.

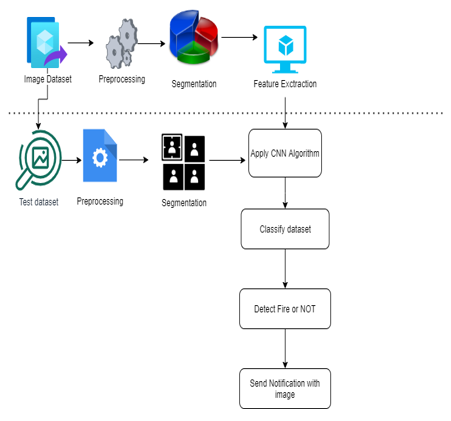

In the above figure, we get complete information of how image capture to the how image detected and how we get alert message

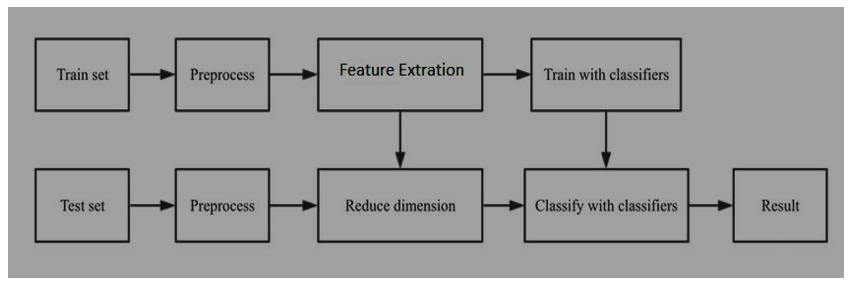

- Preprocessing: In this method we collect all raw data from capturing image frame and perform operation on it is called preprocessing. For example: Training algorithm i.e. CNN on photo which capture by it is result in not good classification results.

- Feature Extraction: A CNN is a neural network that extracts image attributes from an input image and classifies them using another neural network. The feature extraction network works with the input image. The neural network makes use of the feature signals extracted.

- Segmentation: R-CNN (Regions with CNN feature) is an application of region-based approaches in the real world. On the basis of the object detection results, it does semantic segmentation. R-CNN employs selective search to extract a large number of object ideas before computing CNN characteristics for each one.

C. Data Flow Diagram

In this diagram we can see how data is used in each step first it take as input then some preprossecing activity is done on it then after we apply CNN algorithm on that data. after that we can detect fire using this data in this process data can be converted into different format like video to frame.

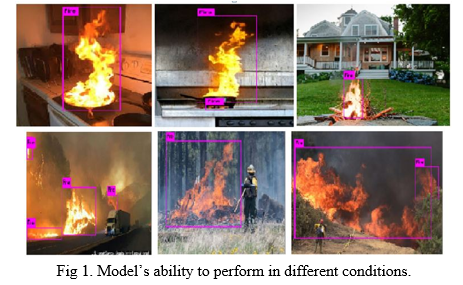

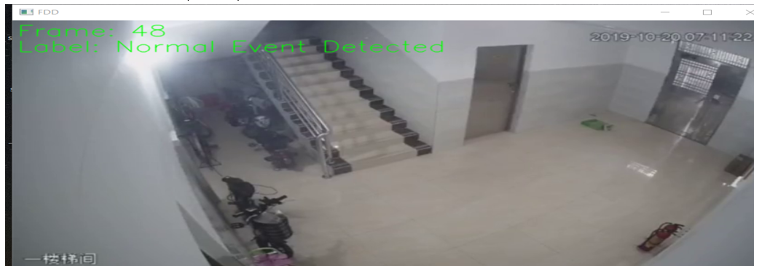

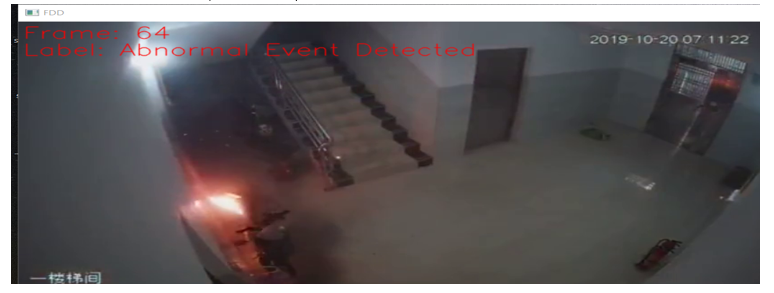

IV. OUTPUT RESULTS AFTER PROCESSING EACH FRAME ONE BY ONE

- When Normal Event Detected(No Fire)

2. When Abnormal Event Detected(Fire Detected)

A. Preprocessing of Dataset

- Dataset: There are a limited number of fire movies available on the Internet, as well as a limited choice of situations, flame size, and video quality. As a result, we developed a 10,000-picture high-quality fire dataset, which we used to train and test the model to improve its generalisation and robustness. Using the original public fire dataset, we found many high-quality multiscale fire pictures on the Internet. We also used flame films for video interception in a number of testing scenarios to increase the model's detection rate of small-scale fires and performance in complicated environments. We utilised the labelImg tool to label our self-created fire dataset. In comparison to the crowd-sourced labelling fire dataset, we will strive to unify labelling criteria and achieve high-quality flame labelling as much as possible to improve the accuracy of our model. Our self-built fire dataset contains a total of 10,000 fire photos. The training set contains 8,400 fire photographs, whereas the testing set contains 1,600 fire photographs. The fire dataset contains a variety of scenes, ranging from simple to complex backgrounds, small-scale to large-scale, single object to multiple objects, indoor to outdoor environments, and day and night illumination, ensuring that the improved YOLOv4 model proposed in this paper has good generalisation ability.

- Training Details: The data argument in this experiment is based on Mosaic and Cutmix technology. Our fire detection model was also trained on two Nvidia GTX 1080Ti GPUs. In our training, we utilised the SGD optimizer with the following settings: input 608*608, batch size 128, maximum epochs 60000, learning rate 0.001, momentum 0.949, and weight decay rate 0.0005. For this rate is reduced upto 0.0001 after 40,000 repetitions; after 55,000 repetitions, the this rate is further reset upto 0.00001 .It took around four days to complete the training.

- Results: To evaluate the fire detection effect of our method for the identification of multi-scale (particularly small-scale) flames in varied complicated settings, we tested our trained fire detection model on multiple actual and experimental fire movies. We tested our trained fire detection model on multiple actual and experimental fire videos to verify the fire detection effect of our method for the detection of multi-scale (especially small-scale) flames in different complex scenes. The self-built fire detection dataset was based on the flame scale to account for the proportion of the image, and we tested our trained fire detection model on multiple actual and experimental fire videos to verify the fire detection effect of our method for the detection of multi-scale (especially small-scale) flames in different complex scenes. Flame samples are available in small, medium, large, and extra-large sizes. Some of the forecasts' outcomes are listed below.

Conclusion

This paper proposed a new network architecture based on the YOLOv4 protocol and used the Darknet deep learning framework to develop a fire detection model. In the experiment, we used a large number of self-built fire datasets, including A multi-scale system is used for training and assessment. Our approach outperforms others in terms of fire detection, according to the data. Finally, an aerial-based forest fire identification system was evaluated using a large database of films of forest fires under various scene conditions. The chromatic and motion features of a forest fire are extracted first to increase the detection rate, and then the rule is utilised to straighten them and point out the fire area. Second, to get around the problem, we use our proposed technique to retrieve smoke.

References

[1] AAA lkhatib, “ Smart and Low Cost Technique for Forest Fire Detection using Wireless Sensor Network,” Int. J. Comput. Appl., vol. 81, no. 11, pp. 12–18, 2013. [2] J. Zhang, W. Li, Z. Yin, S. Liu, and X. Guo, “Forest fire detection system based on wireless sensor network,” 2009 4th IEEE Conf. Ind. Electron. Appl. ICIEA 2009, pp. 520–523, 2009. [3] A. A. A. Alkhatib, “A review on forest fire detection techniques,” Int. J. Dis- trib. Sens. Netw., vol. 2014, no. March, 2014. [4] P. Skorput, S. Mandzuka, and H. Vojvodic, “The use of Unmanned Aerial Ve- hicles for forest fire monitoring,” in 2016 International Symposium ELMAR, 2016, pp. 93–96. [5] F. Afghah, A. Razi, J. Chakareski, and J. Ashdown, Wildfire Monitoring in Remote Areas using Autonomous Unmanned Aerial Vehicles. 2019. [6] Hanh Dang-Ngoc and Hieu Nguyen-Trung, “Evaluation of Forest Fire De- tection Model using Video captured by UAVs,” presented at the 2019 19th International Symposium on Communications and Information Technologies (ISCIT), 2019, pp. 513–518. [7] C. Kao and S. Chang, “An Intelligent Real-Time Fire-Detection Method Based on Video Processing,” IEEE 37th Annu. 2003 Int. Carnahan Conf. OnSecurity Technol. 2003 Proc., 2003. [8] N. I. Binti Zaidi, N. A. A. Binti Lokman, M. R. Bin Daud, H. Achmad, and K. A. Chia, “Fire recognition using RGB and YCbCr color space,” ARPN J. Eng. Appl. Sci., vol. 10, no. 21, pp. 9786–9790, 2015. [9] C. E. Premal and S. S. Vinsley, “Image Processing Based Forest Fire Detection using YCbCr Colour Model,” Int. Conf. Circuit Power Comput. Technol. ICCPCT, vol. 2, pp. 87–95, 2014. [10] C. Ha, U. Hwang, G. Jeon, J. Cho, and J. Jeong, “Vision-based fire detection algorithm using optical flow,” Proc. - 2012 6th Int. Conf. Complex Intell. Softw. Intensive Syst. CISIS 2012, pp. 526–530, 2012. [11] K. Poobalan and S. Liew, “Fire Detection Algorithm Using Image Processing Techniques,” Proceeding 3rd Int. Conf. Artif. Intell. Comput. Sci., no. December, pp. 12–13, 2015. [12] Innovative Mechanisms for Industry Applications (ICIMIA) (pp. 335-339). IEEE.

Copyright

Copyright © 2022 Prof. Manisha Khadse, Sanket Nikam, Chandradeep Patil, Abhishek Nigade, Akash Wagh. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET43347

Publish Date : 2022-05-26

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online