Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Face Biometric Authentication System for ATM using Deep Learning

Authors: Dayana R, Abarna E, Saranya I, Swetha P

DOI Link: https://doi.org/10.22214/ijraset.2022.44413

Certificate: View Certificate

Abstract

Automated Teller Machines also known as ATM\'s are widely used nowadays by each and everyone. There is an urgent need for improving security in banking region. Due to tremendous increase in the number of criminals and their activities, the ATM has become insecure. ATM systems today use no more than an access card and PIN for identity verification. The recent progress in biometric identification techniques, including finger printing, retina scanning, and facial recognition has made a great effort to rescue the unsafe situation at the ATM. This project proposes an automatic teller machine security model that would combine a physical access card and electronic facial recognition using Deep Convolutional Neural Network. If this technology becomes widely used, faces would be protected as well as their accounts. Face Verification Link will be generated and sent to user to verify the identity of unauthorized user through some dedicated artificial intelligent agents, for remote certification. However, it obvious that man’s biometric features cannot be replicated, this proposal will go a long way to solve the problem of Account safety making it possible for the actual account owner alone have access to his accounts.

Introduction

I. INTRODUCTION

A. ATM: Overview

Automated Teller Machines, popularly referred to as ATMs, are one of the most useful advancements in the banking sector. ATMs allow banking customers to avail quick self-serviced transactions, such as cash withdrawal, deposit, and fund transfers. ATMs enable individuals to make banking transactions without the help of an actual teller. Also, customers can avail banking services without having to visit a bank branch. Most ATM transactions can be availed with the use of a debit or credit card. There are some transactions that need no debit or credit card.

B. Motivation

ATM Fraudulence occurring in the society has become very common nowadays. Skimming and Trapping of the ATM devices have been designed by many Burglars. Unauthorized usage of ATM cards by person other than the owner Shoulder Surfing Attack. Thus, there is a dire need for development of such system which would serve to protect the consumers from fraud and other breaches of security.

C. Scope

Face recognition can be used to secure ATM transaction and is used as a tool for authenticating users to confirm the card owner. Financial fraud is a very important problem for Banks and current secure information in the ATM card magnetic tape are very vulnerable to theft or loss. By using face recognition as a tool for authenticating users in ATMs can be confirmed as the card owner. Face Based ATM login Process the ATMs which are equipped with Face recognition technology can recognize the human face during a transaction. When there are “Shoulder Surfers" who try to peek over the cardholder’s shoulder to obtain his PIN when the cardholder enters it, the ATMs will automatically remind the cardholder to be cautious. If the user wears a mask or sunglasses, the ATM will refuse to serve him until the covers are removed.

Touchless - There is no need for remembering your passwords. Only looking at the ATM camera will login the card holder instantly. No physical contact is needed.

Secure - Since your face is your password, there is no need to worry for your password being forgotten or stolen. In addition, the face recognition engine locks access to the account and transaction pages for the card holder as the card holder moves away from the camera of the ATM and another face appears

Face based card holder authentication can be used as primary or as a secondary authentication measure along with ATM PIN. Face based authentication prevents ATM fraud by the use of fake card and stolen PIN or stolen card itself. Face verification is embedded with security features to prevent fraud, including liveness-detection technology that detects and blocks the use of photographs, videos or masks during the verification process.

D. Objective

Aim: The objective of this project is to proposes the alliance of Face Recognition System for authentication process, unknown face forwarder URL and enhancing the security in the banking region. To provide more security in the ATM, the system is proposed to avoid various types of criminal activities and unauthorized access. To Prevent unauthorized access using Face verification Link. To prevent theft and other criminal activities.

E. Methodology

ATM Simulator is a Next Generation testing application for XFS-based ATMs (also known as Advanced Function or Open-Architecture ATMs). ATM Simulator is a web technology to allow ATM testing with a virtualized version of any ATM.ATM Simulator uses virtualization to provide with realistic ATM simulation, coupled with automation for faster, more efficient testing for face authentication and unknown Face Forwarder Technique.

II. RELATED WORKS

A. Face Recognition Module

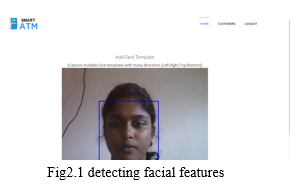

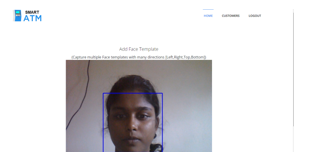

- Face Enrollment: This module begins by registering a few frontal face of Bank Beneficiary templates. These templates then become the reference for evaluating and registering the templates for the other poses: tilting up/down, moving closer/further, and turning left/right.

- Face Image Acquisition: Cameras should be deployed in ATM to capture relevant video. Computer and camera are interfaced and here webcam is used.

- Frame Extraction: Frames are extracted from video input. The video must be divided into sequence of images which are further processed. The speed at which a video must be divided into images depends on the implementation of individuals. From we can say that, mostly 20-30 frames are taken per second which are sent to the next phases

- Pre-processing: Face Image pre-processing are the steps taken to format images before they are used by model training and inference. The steps to be taken are:

Read image

RGB to Grey Scale conversion

Resize image

Original size (360, 480, 3) — (width, height, no. RGB channels)

Resized (220, 220, 3)

Remove noise (Denoise)

smooth our image to remove unwanted noise. We do this using gaussian blur.

Binarization

Image binarization is the process of taking a grayscale image and converting it to black-and-white, essentially reducing the information contained within the image from 256 shades of grey to 2: black and white, a binary image.

III. FACE DETECTION

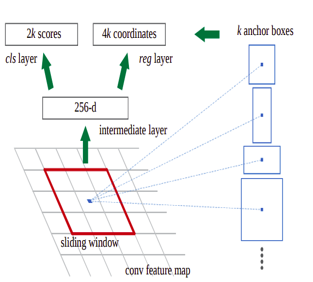

Therefore, in this module, Region Proposal Network (RPN) generates RoIs by sliding windowson the feature map through anchors with different scales and different aspect ratios. Face detection and segmentation method based on improved RPN. RPN is used to generate RoIs , and RoIAlign faithfully preserves the exact spatial locations.These are responsible for providing a predefined set of bounding boxes of different sizes and ratios that are going to be used for reference when first predicting object locations for the RPN.

A. Face Image Segmentation Using Region Growing (Rg) Method

The region growing methodology and recent related work of region growing are described here.

RG is a simple image segmentation method based on the seeds of region. It is also classified as a pixel-based image segmentation method since it involves the selection of initial seed points. This approach to segmentation examines the neighbouring pixels of initial “seed points” and determines whether the pixel neighbours should be added to the region or not based on certain conditions. In a normal region growing technique, the neighbour pixels are examined by using only the “intensity” constraint. A threshold level for intensity value is set and those neighbour pixels that satisfy this threshold is selected for the region growing.

B. RPN

A Region Proposal Network, or RPN, is a fully Convolutional network that simultaneously predicts object bounds and objectless scores at each position. The RPN is trained end-to-end to generate high-quality region proposals. It works on the feature map (output of CNN), and each feature (point) of this map is called Anchor Point. For each anchor point, we place 9 anchor boxes (the combinations of different sizes and ratios) over the image. These anchor boxes are cantered at the point in the image which is corresponding to the anchor point of the feature map.

C. Training of RPN.

To know that for each location of the feature map we have 9 anchor boxes, so the total number is very big, but not all of them are relevant. If an anchor box having an object or part of the object within it then can refer it as a foreground, and if the anchor box doesn’t have an object within it then we can refer it as background.

So, for training, assign a label to each anchor box, based on its Intersection over Union (IoU) with given ground truth. We basically assign either of the three (1, -1, 0) labels to each anchor box.

Label = 1 (Foreground): An anchor can have label 1 in following conditions,

If the anchor has the highest IoU with ground truth.

If the IoU with ground truth is greater than 0.7. ( IoU>0.7).

Label = -1 (Background): An anchor is assigned with -1 if IoU < 0.3.

Label = 0: If it doesn’t fall under either of the above conditions, these types of anchors don’t contribute to the training, they are ignored.

After assigning the labels, it creates the mini-batch of 256 randomly picked anchor boxes, all of these anchor boxes are picked from the same image.

The ratio of the number of positive and negative anchor boxes should be 1:1 in the mini-batch, but if there are less than 128 positive anchor boxes then we pad the mini-batch with negative anchor boxes.

Now the RPN can be trained end-to-end by backpropagation and stochastic gradient descent (SGD).

The processing steps are

Select the initial seed point

Append the neighbouring pixels—intensity threshold

Check threshold of the neighbouring pixel

Thresholds satisfy-selected for growing the region.

Process is iterated to end of all regions.

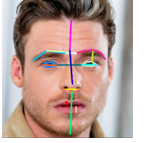

IV. FEATURE EXTRACTION

After the face detection, face image is given as input to the feature extraction module to find the key features that will be used for classification.With each pose, the facial information including eyes, nose and mouth is automatically extracted and is then used to calculate the effects of the variation using its relation to the frontal face templates.

A. Face Features

- Forehead Height: distance between the top edge of eyebrows and the top edge of forehead.

- Middle Face Height: distance between the top edge of eyebrows and nose tip.

- Lower Face Height: distance between nose tip and the baseline of chin.

- Jaw Shape: A number to differentiate between jaw shapes. this number can be replaced if you use Face Shape Recognition, see (this) notebook.

- Left Eye Area

- Right Eye Area

- Eye to Eye Distance: distance between eyes (closest edges)

- Eye to Eyebrow Distance: distance between eye and eyebrow (left or right is determined by whice side of the face is more directed to the -screen-)

- Eyebrows Distance: horizontal distance between eyebrows

- Eyebrow Shape Detector 1: The angle between 3 points (eyebrow left edge, eyebrow center, eyebrow right edge), to differentiate between (Straight | non-straight) eyebrow shapes

- Eyebrow Shape Detector 2: A number to differentiate between (Curved | Angled) eyebrow shapes.

- Eyebrow Slope

- Eye Slope Detector 1: A method to calculate the slope of the eye. it's the slope of the line between eye's center point and eye's edge point. this detector is used to represent 3 types of eye slope (Upward, Downward, Straight).

- Eye Slope Detector 2: Another method to calculate the slope of the eye. it's the difference on Y-axis between eye's center point and eye's edge point. this detector isn't a 'mathematical' slope, but a number that can be clustered into 3 types of eye slope (Upward, Downward, Straight).

- Nose Length

- Nose Width: width of the lower part of the nose

- Nose Arch: Angle of the curve of the lower edge of the nose (longer nose = larger curve = smaller angle)

- Upper Lip Height

- Lower Lip Height

B. Gray Level Co-occurrence Matrix

GLCM is a second-order statistical texture analysis method. It examines the spatial relationship among pixels and defines how frequently a combination of pixels are present in an image in a given direction Θ and distance d. Each image is quantized into 16 gray levels (0–15) and 4 GLCMs (M) each for Θ = 0, 45, 90, and 135 degrees with d = 1 are obtained. From each GLCM, five features (Eq. 13.30–13.34) are extracted. Thus, there are 20 features for each image. Each feature is normalized to range between 0 to 1 before passing to the classifiers, and each classifier receives the same set of features.

The features we extracted can be grouped into three categories. The first category is the first order statistics, which includes maximum intensity, minimum intensity, mean, median, 10th percentile, 90th percentile, standard deviation, variance of intensity value, energy, entropy, and others. These features characterize the Gray level intensity of the tumour region.

V. FACE IDENTIFICATION AND VERIFICATION

DCNN algorithms were created to automatically detect and reject improper face images during the enrolment process. This will ensure proper enrolment and therefore the best possible performance

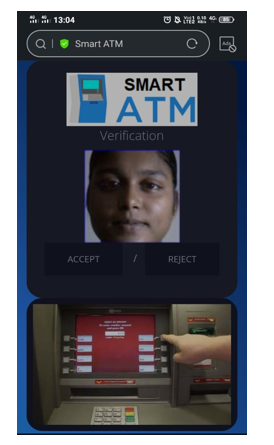

The CNN creates feature maps by summing up the convolved grid of a vector-valued input to the kernel with a bank of filters to a given layer. Then a non-linear rectified linear unit (ReLU) is used for computing the activations of the convolved feature maps. The new feature map obtained from the ReLU is normalized using local response normalization (LRN). The output from the normalization is further computed with the use of a spatial pooling strategy (maximum or average pooling). Then, the use of dropout regularization scheme is used to initialize some unused weights to zero and this activity most often takes place within the fully connected layers before the classification layer. Finally, the use of softmax activation function is used for classifying image labels within the fully connected layer. After capturing the face image from the ATM Camera, the image is given to face detection module. This module detects the image regions which are likely to be human. After the face detection using Region Proposal Network (RPN), face image is given as input to the feature extraction module to find the key features that will be used for classification. The module composes a very short feature vector that is well enough to represent the face image. Here, it is done with DCNN with the help of a pattern classifier, the extracted features of face image are compared with the ones stored in the face database. The face image is then classified as either known or unknown. If the image face is known, corresponding Card Holder is identified and proceed further.

VI. FUTURE SCOPE

In the future, the recognition performance should be further boosted by designing novel deep feature representation schemes.

VII. RESULT

Biometrics as means of identifying and authenticating account owners at the Automated Teller Machines gives the needed and much anticipated solution to the problem of illegal transactions. In this project, we have developed to proffer a solution to the much-dreaded issue of fraudulent transactions through Automated Teller Machine by biometrics and Unknown Face Forwarder that can be made possible only when the account holder is physically or far present. Thus, it eliminates cases of illegal transactions at the ATM points without the knowledge of the authentic owner.

Conclusion

Using a biometric feature for identification is strong and it is further fortified when another is used at authentication level.The ATM security design incorporates the possible proxy usage of the existing security tools (such as ATM Card) and information (such as PIN) into the existing ATM security mechanisms. It involves, on real-time basis, the bank account owner in all the available and accessible transactions.

References

[1] J. Liang, H. Zhao, X. Li, and H. Zhao, ``Face recognition system basedon deep residualnetwork,\'\' in Proc. 3rd Workshop Adv. Res. Technol. Ind.(WARTIA), Nov. 2017, p. 5. [2] I. Taleb, M. E. Amine Ouis, and M. O. Mammar, ``Access control usingautomated face recognition: Based on the PCA & LDA algorithms,\'\' inProc. 4th Int. Symp. ISKO-Maghreb, Concepts Tools Knowl. Manage.(ISKO-Maghreb), Nov. 2014, pp. 1-5. [3] X. Pan, ``Research and implementation of access control system basedon RFID and FNN-face recognition,\'\' in Proc. 2nd Int. Conf. Intell. Syst.Design Eng. Appl., Jan. 2012, pp. 716-719, doi: 10.1109/ISdea.2012.400. [4] A. A. Wazwaz, A. O. Herbawi, M. J. Teeti, and S. Y. Hmeed, ``RaspberryPi and computers-based face detection and recognition system,\'\' in Proc.4th Int. Conf. Comput. Technol. Appl. (ICCTA), May 2018, pp. 171-174. [5] A. Had, S. Benouar, M. Kedir-Talha, F. Abtahi, M. Attari, and F. Seoane,``Full impedance cardiography measurement device using raspberryPI3 and system-on-chip biomedical instrumentation solutions,\'\' IEEE J.Biomed. Health Informat., vol. 22, no. 6, pp. 1883-1894, Nov. 2018. [6] A. Li, S. Shan, andW. Gao, ``Coupled bias-variance tradeoff for cross-poseface recognition,\'\' IEEE Trans. Image Process., vol. 21, no. 1, pp. 305-315,Jan. 2012. [7] C. Ding, C. Xu, and D. Tao, ``Multi-task pose-invariant face recognition,\'\'IEEE Trans. Image Process., vol. 24, no. 3, pp. 980-993, Mar. 2015. [8] J. Yang, Z. Lei, D. Yi, and S. Li, ``Person-specific face antispoofong withsubject domain adaptation,\'\' IEEE Trans. Inf. Forensics Security, vol. 10,no. 4, pp. 797-809, Apr. 2015. [9] H. S. Bhatt, S. Bharadwaj, R. Singh, and M.Vatsa, ``Recognizing surgicallyaltered face images using multiobjective evolutionary algorithm,\'\' IEEETrans. Inf. Forensics Security, vol. 8, no. 1, pp. 89-100, Jan. 2013. [10] T. Sharma and S. L. Aarthy, ``An automatic attendance monitoring systemusing RFID and IOT using cloud,\'\' in Proc. Online Int. Conf. Green Eng.Technol. (IC-GET), Nov. 2016, pp. 1-4.

Copyright

Copyright © 2022 Dayana R, Abarna E, Saranya I, Swetha P. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET44413

Publish Date : 2022-06-17

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online