Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Gesture Vocalizer Using Flex Sensor and Software Visualization

Authors: Challa Rishitha , Mallikanti Bhavana , Pendem Sriya, Dr. Y. Sreenivasulu

DOI Link: https://doi.org/10.22214/ijraset.2022.43737

Certificate: View Certificate

Abstract

People have the voice ability for communicating among one another. Tragically, not every person has the ability to talk and hear. Gesture based communication utilized among the local area of individuals who are distinctive abled as the methods for correspondence. Communication through signing is a motion portrayal that includes concurrent joining hand shapes and development of the hands, arms or body, and looks to communicate. The people who can\'t talk utilizes the gesture based communications to speak with other individual vocally hindered individual and even with other ordinary individuals who knows the implications of gesture based communications or an interpreter is expected to decipher the implications of gesture based communications to others who can talk and do comprehend the gesture based communications. In any case, it\'s anything but consistently workable for a person to associate with constantly to decipher the communications via gestures and not every person can gain proficiency with the communications through signing. In this way, another option is that we can utilize a gadget Gesture Vocalizer as an arbiter. The Gesture Vocalizer can take the motions a contribution from the vocally impeded individual and interaction it\'s anything but a literary and sound type of yield. Hardware version of the project Gesture Vocalizer is a multi-microcontroller-based framework being intended to work with the correspondence among the imbecilic, hard of hearing and visually impaired networks and their correspondence with the ordinary individuals. This system can be flexibly changed to function as a \"smart device.\". In this project we showcases a gesture vocalizer based on a microcontroller and sensors. Gesture vocalizer designed is basically a hand glove and a microcontroller system

Introduction

DECLARATION

We, CHALLA RISHITHA, MALLIKANTI BHAVANA, PENDEM SRIYA bearing Roll No. 18311A04R2, 18311A04U8, 18311A04V3 respectively hereby certify that the dissertation entitled GESTURE VOCALIZER USING FLEX SENSOR AND SOFTWARE VISUALIZATION, carried out under the guidance of DR.Y.SREENIVASULU, Associate Professor of ECE is submitted to Department of ECE in partial fulfilment of the requirements for the course of work to award of the degree of B. Tech in ECE. This is a record of Bonafede work carried out by me and the results embodied in this dissertation have not been reproduced or copied from any source. The results embodied in this dissertation have not been submitted to any other University or Institute for the award of any other degree.

ACKNOWLEDGEMENT

We would like to thank our Guide DR.Y.Sreenivasulu, Associate Professor for giving me his constant guidance, support and motivation throughout the period this course work was carried out. His readiness for consultation at all times, his educative comments and assistance even with practical things have been invaluable. We are thankful that he gave us the freedom to do the work with our ideas.

We express our sincere gratitude to Prof. S.P.V. Subba Rao, Head of Department, and ECE for helping us in carrying out this project giving support throughout the period of our study in SNIST.

We also thanking our Principal Dr T.Ch. Siva Reddy for giving me his guidance and support, motivation throughout the period of my B. Tech course work was carried out.

I am also thankful to all the teaching and non-teaching staff of our department who has rendered their co-operation in completion of this seminar report.

I cannot close prefatory remarks without expressing my thankfulness and reverence to the authors of various papers I have used and referred to in order to complete my report work.I also thank my parents and friends and well-wishers who aided me in completion of the seminar report.

I. INTRODUCTION

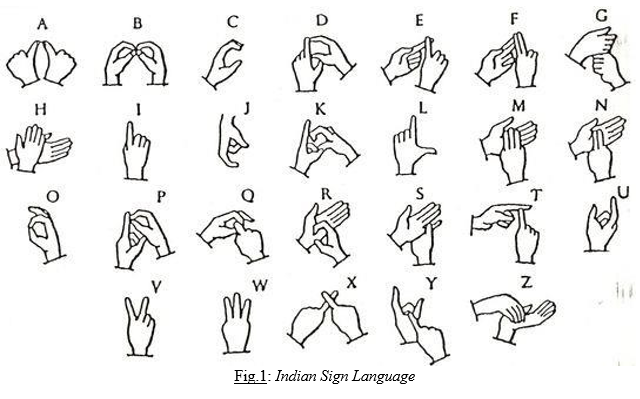

A. Deaf-Dumb Hand Gestures

In India, there are 22 official languages and 415 living languages. When it comes to communicating between villages, groups, and states, such variation in languages poses a barrier. The Deaf and Mute communities in India utilise Indian Sign Language (ISL) as one of their living languages. The total percentage of population of India that is handicapped with hearing cumulates to nearly 1.3 million people out of the total 21.9 million people with disabilities. Previously, India's educational system was based on the oral-aural approach. The situation is improving as more Indian Sign Language is used. In India, there are 22 official languages and 415 additional languages that are still spoken. When it comes to communicating between villages, groups, and states, such variation in languages poses a barrier. Indian Sign Language (ISL) is a live language in India that is spoken by Deaf and Mute people. Previously, Indian educational system was based on the oral-aural approach. The situation is improving as more Indian Sign Language is used.

Sign languages are languages that use the visual-manual modality to convey the meaning or messages. This language is expressed by a combination of manual signs and non- manual features. Sign languages are distinct natural languages with their own syntax and lexicon. This indicates that sign languages are not universal or mutually comprehensible, despite the fact that there are obvious parallels between them. It's uncertain how many sign languages are now in use around the world. Each country has its own native sign language, and some countries have many sign languages. Basic terms: This classification incorporates the terms that are utilized for our fundamental necessities like Alphabets (A, B, C, ...), Numbers (1, 2, 3, ...), Days (Sunday, Monday, ...), Months (January, February, ...), Etc.

B. Gesture Vocalizer

People have the voice capacity for cooperation and correspondence among one another. Lamentably, not every person has the capacity of talking and hearing. Communication via gestures utilized among the local area of individuals who can't talk or hear as the methods for correspondence. Communication through signing is a motion portrayal that includes at the same time joining hand shapes, direction and development of the hands, arms or body, and looks to communicate fluidly with a speaker's musings. Individuals who can't talk utilizes the communications via gestures to speak with other individual vocally weakened individual and even with other typical individuals who knows the implications of gesture based communications or a translator is expected to decipher the implications of gesture based communications to others who can talk and don't have the foggiest idea about the implications of gesture based communications.

The communication gap between -special person and normal person is one of the main obstacles that this unique person faces. Deaf and dumb persons have a hard time communicating with normal people. This enormous challenge makes them uneasy, and they believe they are being discriminated against in society. It's anything but consistently workable for a person to associate with constantly to decipher the gesture-based communications and not every person can get familiar with the gesture-based communications. On account of a breakdown in correspondence Deaf and moronic individuals accept they can't impart, and therefore, they can't pass on their feelings. In this manner, another option is that we can utilize a PC or an advanced mobile phone as an arbiter. The HGRVC (Hand Gesture Recognition and Voice Conversion) innovation finds and tracks the hand movements of hard of hearing and unable to speak people so they can speak with others. The PC or a PDA could take a contribution from the vocally disabled individual and give its text based just as and sound type of yield.

II. GESTURE VOCALIZER IMPLEMENTATION

A. Gesture Vocalizer Implementation

Gesture Vocalizer is a technology that aims to improve communication between the dumb, deaf, and blind groups, as well as between them and the general public. This system can be adjusted to serve as a "smart device" on the go.

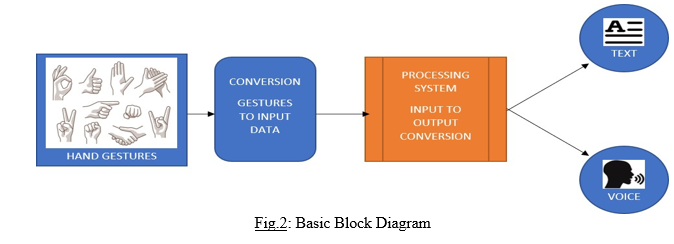

On the broader sense, the gestures have to converted from the hand movements that are performed by the deaf-dumb or hearing-impaired person to the spoken language that is legible by the people without the impairment. The handicapped person’s hands and the gestures by them the basis of the project. These gestures are collected by the computer that is going to process it as the input and then processing these recorded gestures from the inputs to the outputs. The output that can be communicated is in the form of the speech or voice and the other is the text conversion as the output. The output can also be viewed as the conversion of text-to-speech as the available form of processing.

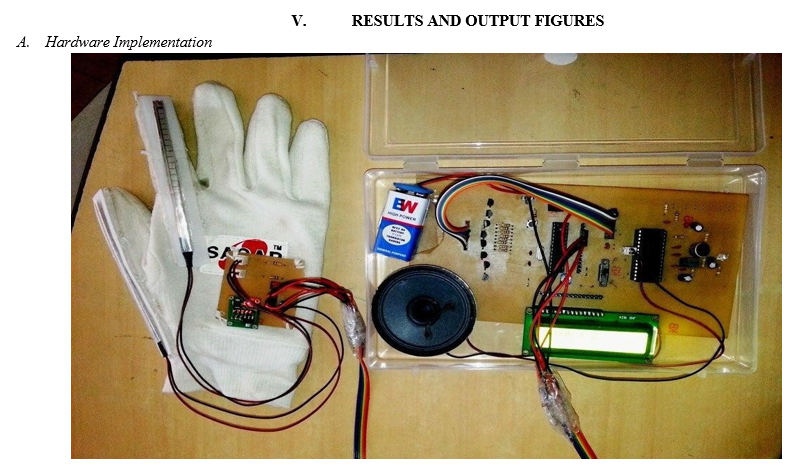

B. Hardware Version of the Implementation

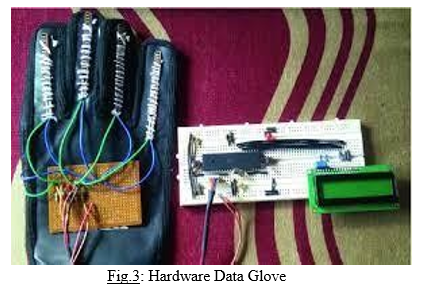

The equipment adaptation of the execution is fundamentally intended to limit the correspondence hole between the imbecilic individuals and the typical one. With this, the idiotic individuals can utilize the information gloves which is utilized to perform communication via gestures and it will be changed over into voice so ordinary individuals can without much of a stretch comprehend and furthermore show it on LCD so that individuals c peruse it on the screen. Magic glove or Data glove is nothing but the glove to which the flex sensors and other components are connected. And the magic glove converts the gestures to voice by recognizing the movement of the fingers. It consumes less power and is flexible to users. Anyone can use this system with basic knowledge

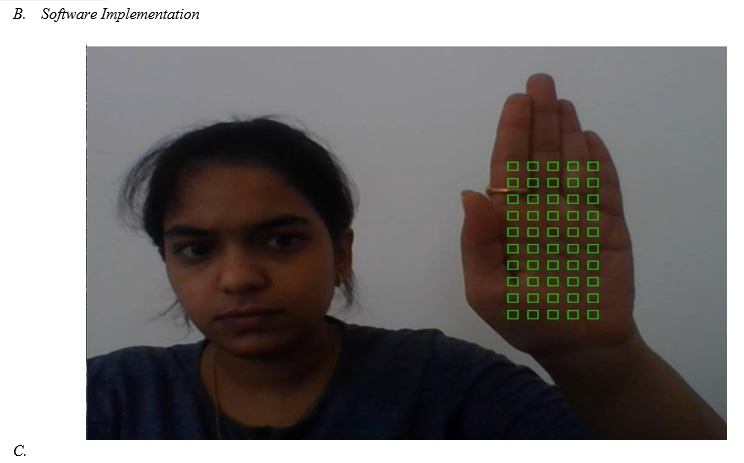

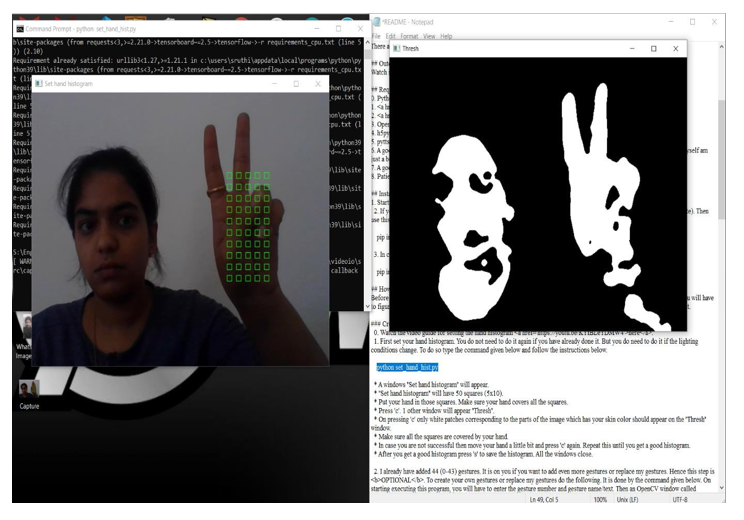

C. Software Version of the Implementation

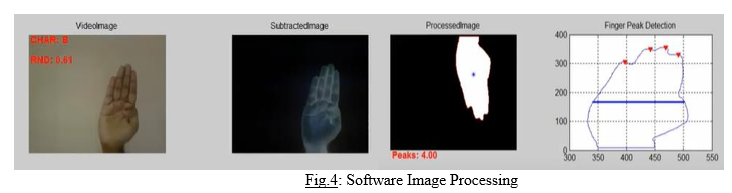

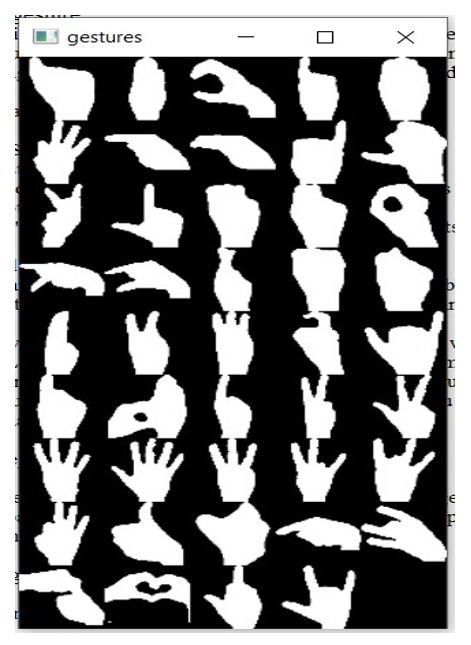

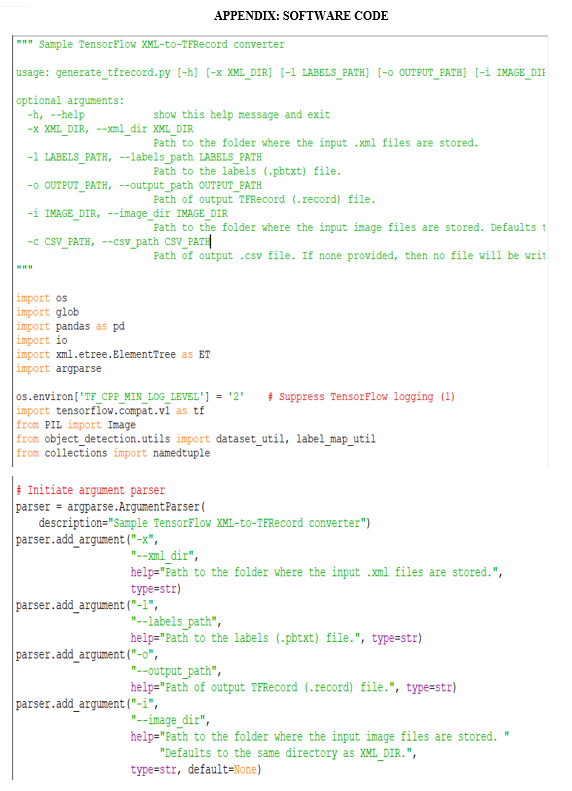

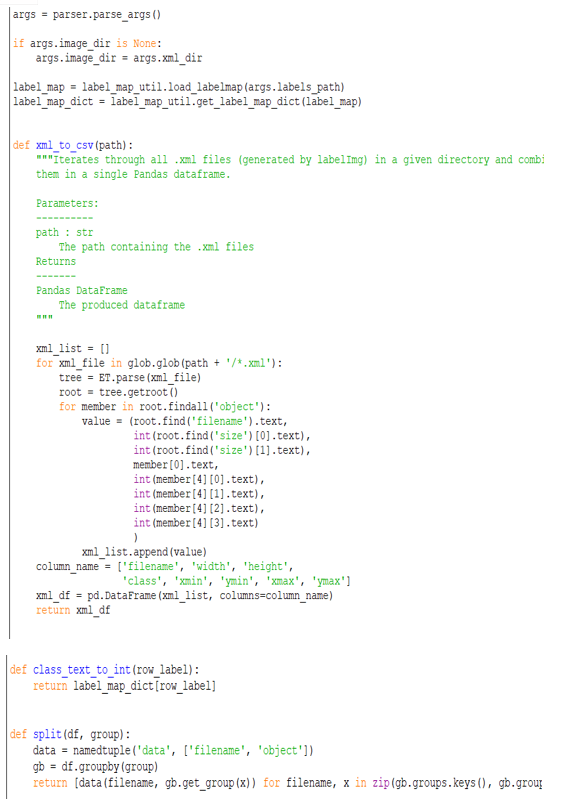

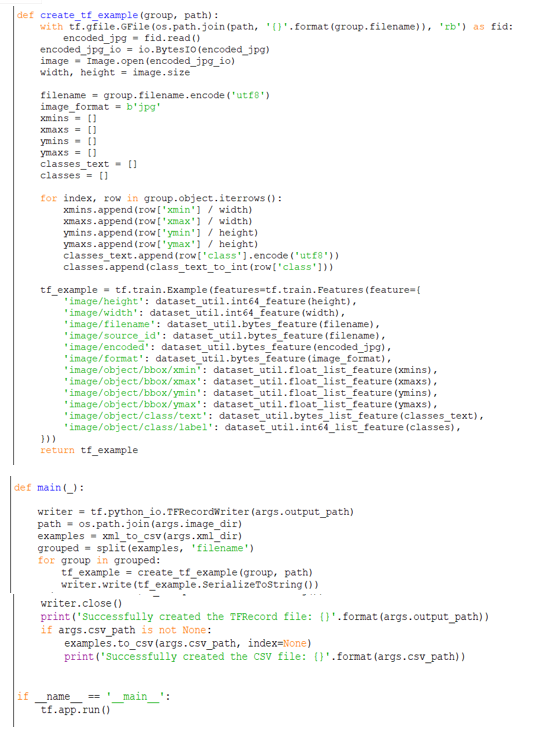

The software version of the implementation we are performing hand gestures recognition using Image Processing. For human-computer interaction, hand gesture recognition is critical. In this work, we present an ongoing technique for hand motion acknowledgment. The hand locale is extricated from the scenery in our system utilizing the foundation deduction strategy. The palm and fingers are then partitioned so the fingers might be distinguished and perceived. Finally, to predict hand gesture labels, a rule classifier is utilised. In this, the gesture is compared with a dataset consisting of ‘n’ number of images and then the sign of the gesture is identified and displayed on the screen by image processing. It is complex to capture ‘n’ number of images and form a data set. We have to use different datasets to recognize different gestures.

III. LITERATURE SURVEY

- “Real-Time Hand Gesture Recognition Using Finger Segmentation” paper written by Zhi- hua Chen, Jung-Tae Kim, Jianning Liang, Jing Zhang, and Yu-Bo Yuan has mentioned that for human-computer interaction, hand gesture recognition is critical. We describe a revolutionary real-time approach for hand gesture identification in this paper. The hand locale is separated from the setting in our system utilizing the foundation deduction technique.

- “Gesture Based Vocalizer for Deaf and Dumb” paper written by Supriya Shevate , Nikita Chorage, Siddhee Walunj, Moresh M. Mukhedkar have pointed out that gesture vocalizer is a socially conscious project. According to the results of the survey, deaf persons find it extremely difficult to communicate with others. Hard of hearing people typically speak with hand signals, making it hard for others to appreciate their communication through signing. We intend to execute a motion-based vocalizer that will perceive all hard of hearing individuals' motions, make an interpretation of them to discourse, and show them on a LCD screen

- “Hand Gesture Recognition and Voice Conversion for Deaf and Dumb” paper written by Cheshta has told that Communication is the primary means by which people communicate with one another. Birth defects, accidents, and oral disorders have all contributed to a rise in the number of deaf and dumb people in recent years.

4. The other few papers such as:

a. “Gesture vocalizer for Deaf and Dumb” paper written by Kshirasagar Snehal P, Shaikh Mohammad Hussain, Malge Swati S., Gholap Shraddha S., Mr. Swapnil Tambatkar

b. “Hand Gesture-Based Vocalizer for the Speech Impaired” paper written by Suyash Ail Bhargav Chauhan, Harsh Dabhi, Viraj Darji, Yukti Bandi

c. Priyanka R. Potdar and Dr. D. M. Yadav's work "Innovative Approach for Gesture to Voice Conversion"

d. “Hand Gesture-Based Vocalizer for the Speech Impaired” paper by Suyash Ail, Bhargav Chauhan, Harsh Dabhi, Viraj Darji

e. “Gesture Vocalizer” paper by R.L.R. Lokesh Babu, S. Gagana Priyanka, P.Pooja Pravallika, Ch. Prathyusha

Have expressed similar sentiments that the other papers mentioned in here have.

IV. PROJECT DESCRIPTION

A. Hardware Description

- Overview

Gesture to Speech Conversion is a tool that converts the gestures of differently abled people throughout the world into voice, or from gesture input to speech output. The proposed system is mobile and emphasizes two-way communication. The system's main purpose is to translate hand motions into aural speech so that mute and non-mute persons can communicate. As a data glove, there will be 5 flex sensors and an accelerometer. Each flex sensor will be attached to a different pin on the Arduino UNO controller. The flex sensors will provide data to the controlling element, which will show the output on an LCD display and transform the text into speech via a speaker.

2. Equipment

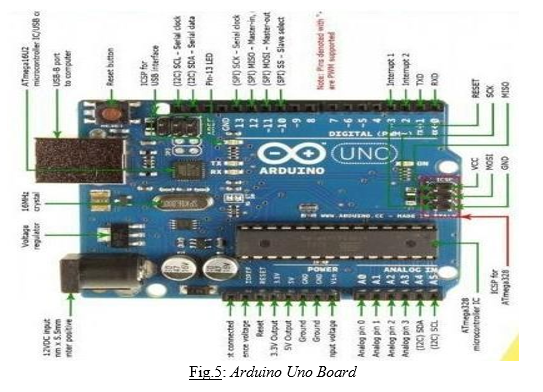

a. Arduino Uno: The Arduino Uno is an open-source microcontroller board designed by Arduino.cc and based on the Microchip ATmega328P microprocessor. The board is outfitted with sets of cutting edge and basic information/yield (I/O) sticks that may be interfaced to various expansion sheets (shields) and different circuits. The board has 14 mechanized I/O pins (six prepared for PWM yield), 6 straightforward I/O sticks, and is programmable with the Arduino IDE (Integrated Development Environment), through a sort B USB link. It very well may be energized by the USB interface or by a 9-volt battery, anyway it recognizes voltages some place in the scope of 7 and 20 volts. It resembles the Arduino Nano and Leonardo. The gear reference arrangement is appropriated under a Creative Commons Attribution Share-Alike 2.5 grant and is open on the Arduino site. Plan and creation archives for specific interpretations of the gear are moreover open.

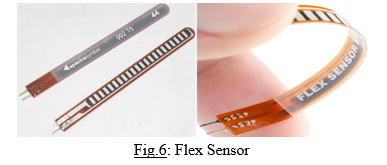

b. Flex Sensor: A flex sensor is a kind of sensor which is used to check the proportion of diversion regardless curving. The assembling of this sensor ought to be made by using materials like plastic and carbon. The carbon surface is put on a plastic strip as this strip is turned aside then the sensor's obstruction will be changed. Subsequently, it is furthermore named as a bend sensor. These sensors are assembled into two sorts subject to its size to be explicit 2.2-inch flex sensor and 4.5-inch flex sensor. The size, similarly as the deterrent of these sensors, is distinctive except for the working guideline. Therefore the sensible size can be supported relying upon the need. Here we talk about a blueprint of 2.2-inch flex-sensor. This sort of sensor is used in various applications like PC interface, reclamation, servo motor control, security system, music interface, power control, and any spot the client needs to change the deterrent all through bowing.

c. Pin Configuration: The pin game plan of the flex sensor showed up underneath. It's anything but a two- terminal device, and the terminals take after p1 and p2. This sensor doesn't contain any enchanted terminal, for instance, diode regardless capacitor, which suggests there is no sure and antagonistic terminal. The vital voltage of this sensor to incite the sensor goes from 3.3V

- 5V DC which can be gained from an interfacing.

[ flex-sensor-pin-setup ]

- “Pin P1: This pin is by and large associated with the +ve terminal of the force source”. (www.elprocus.com)[2]

- “Pin P2: This pin is for the most part associated with GND pin of the force source”. (www.elprocus.com)[2]

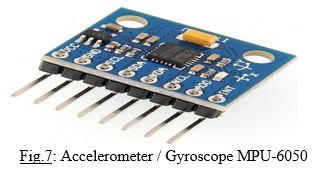

d. Accelerometer MPU-6050: An accelerometer is a sensor that detects changes in a device's gravitational acceleration. The piezoelectric effect is used to operate an accelerometer. Accelerometers are used in a variety of devices to monitor acceleration, tilt, and vibration. Gravity forces the motion to travel in the direction of the inclination whenever you tilt the position (At rest, an accelerometer measures 1g: the earth gravitational pull, which registers 9.81 metres per second or 32.185 feet per second).

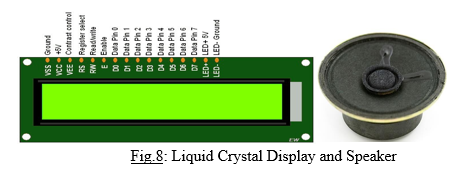

e. Liquid Crystal Display and Speaker: The light-regulating attributes of fluid precious stones are utilized in a fluid gem show (LCD), which is a level board show or an electronically controlled optical gadget. Fluid gems don't straightforwardly transmit light; all things considered, they utilize a backdrop illumination or reflector to make tone or monochrome pictures. LCDs can show subjective designs (as in a broadly useful PC show) or fixed illustrations with negligible data content that can be shown or covered up (as in an advanced clock), like preset content, digits, and seven- portion shows. They share a similar fundamental innovation, with the special case that irregular pictures are comprised of an immense number of little pixels, while different showcases have bigger parts. For sound yield and intensification. It will basically give a sound based circuit yield.

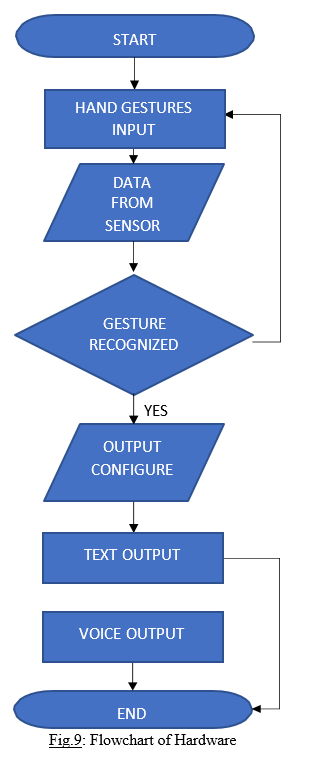

3. Flow Chart: The flow chart depicts how the system will respond in a sequential fashion. As soon as the system is turned on, the sensor will detect data via a hand gesture. If the sensed data sign is recognised, the text output will be shown, and the text output will be converted to an audio output. If the sensed data isn't recognised, the process will start all over again. This process will be repeated until the user's requirements are met.

4. Working: The user is wearing a glove with flex sensors. When the user wants to express something, he or she now makes movements with his or her fingers bent. As a result, multiple combinations are created by bending the flex sensors, resulting in varied resistance combinations for the Arduino's output pin to display distinct entities. The LCD display is connected to the Arduino. The flex sensor will provide input to Arduino when the individual bends their fingers, changing the angles of the flex sensor, and thus changing the resistance will cause the Arduino to provide the appropriate output as per the code we have prepared i.e., which resistance combination will result in whatever entity as my output. Furthermore, when I have the output, the linked speaker will provide the speech signal as my output.

B. Software Method

- Overview

Hand motions can be detected with the use of a web camera. With the help of pre- processing, the images are then converted to normal size. This project's goal is to create a system that can translate hand motions into text. The main goal of this project is to enter the images into the database and then convert them to text using database matching. Hand mobility is monitored during the detection process. The technology produces text output, which aids in the communication gap between deaf-mutes and the general public.

2. Softwares Utilized

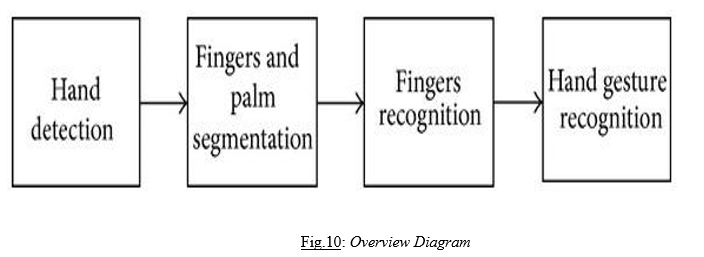

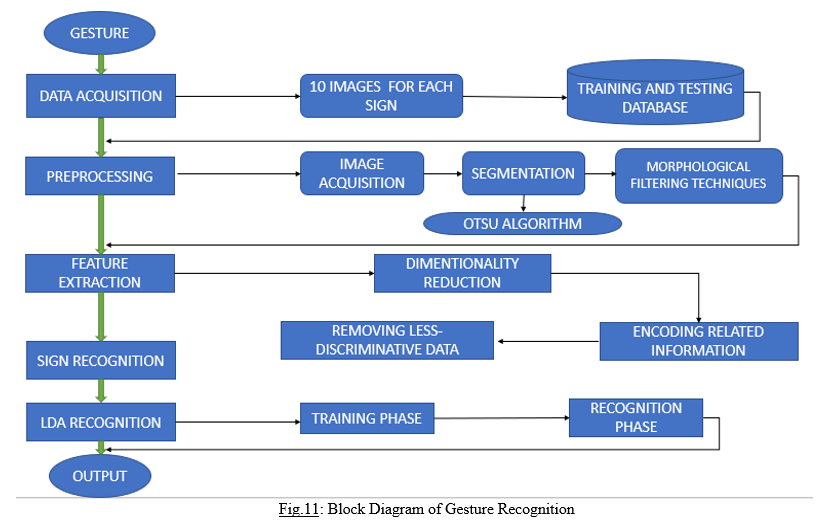

a. Block Diagram and Working

The below block diagram explains the following process:

- Data Acquisition: For each of the 26 signs, ten photos will be acquired in order to attain a high level of accuracy in the sign language recognition system. These photos are stored in a database for training and testing. The signer adjusts the acquired image at a distance to get the needed image sharpness.

- Pre-Processing: Image acquisition, segmentation, and morphological filtering procedures are all part of the pre-processing process.

- Image acquisition: Pre-processing begins with this stage. This is the procedure for detecting a picture. As a result, image acquisition is aided by "illumination." Pre-processing, such as scaling, will also be required. The image will be taken from a database during image acquisition.

- Segmentation: Segmentation is the technique of dividing an image into small segments in order to extract more accurate picture attributes. If the segments are self-contained (two segments of an image should not have any identical information) then the image's representation and description will be accurate, but the outcome of rough segmentation will not be accurate. Hand segmentation is used here to isolate the object from the backdrop. For segmentation, the Otsu algorithm is utilized. Certain traits are depicted in the segmented hand image.

- Morphological Filtering: Morphological Filtering tools extract the image components, which are useful for form representation and description. The picture attribute is unquestionably the process's result.

???????

???????

Conclusion

This examination presents another technique for hand signal distinguishing proof in this investigation. The foundation deduction approach is utilized to recognize the hand area from the scenery. The palm and fingers are portioned after that. The fingers in the hand picture are found and perceived dependent on the division. Hand motion acknowledgment is performed utilizing a straightforward guideline classifier. On an informational collection of 1300 hand photos, the exhibition of our strategy is tried. The consequences of the investigations propose that our strategy works adequately and is appropriate for continuous applications. Besides, on a picture assortment of hand developments, the recommended procedure beats the cutting edge FEMD. The proposed technique\'s presentation is altogether reliant \"on the consequences of hand location. On the off chance that there are moving articles with a shading that is like that of the skin, the things are distinguished because of the hand identification and decrease the hand signal acknowledgment execution. AI calculations, then again, can recognize the hand from the setting. ToF cameras give profundity data that can help upgrade hand discovery execution. To address the confounded foundation issue and improve the heartiness of hand distinguishing proof, AI calculations and ToF cameras might be applied in future exploration. Currently, research efforts have primarily concentrated on recognizing static ISL indications from pictures or video sequences captured under controlled conditions. The dimensionality of the sign recognition process will be minimized by employing the LDA method. Noise will be decreased and with excellent accuracy as a result of dimensionality reduction. This project will be improved in the future by determining the numbers that will be displayed in words. We attempted to construct this system by combining numerous image processing approaches and fundamental picture features. The recognition of gestures has been accomplished using LDA algorithms. Remembering that every God creature has value in society, let us endeavor to incorporate hearing challenged persons in our daily lives and live together.

References

[1] Sanish Manandhar, Sushana Bajracharya, Sanjeev Karki, Ashish Kumar Jha, “Hand Gesture Vocalizer for dumb and deaf people”, SCITECH Nepal,2019. [2] www.elprocus.com [3] en.wikipedia.org [4] www.hindawi.com [5] www.ijarcce.com [6] Y C Venkatesh,A. S Srikantappa, P Dinesh. “Smart Brake Monitoring System with Brake Failure Indication for Automobile Vehicles”, IOP Conference Series:Materials Science and Engineering,2020. [7] Circuitdigest.com [8] Sawant, Shreyashi Narayan, and M.S.Kumbhar.”Realtime sign language Recognition using PCA”,2014 IEEE International Conference on Advanced Communications Control and Computing Technologies,2014. [9] Yikai Fang, Kongqiao Wang,Jian Cheng, Haning Lu.”A Real-Time Hand Gesture Recognition Method”,Multimedia and Expo,2007 IEEE International Conference on,2007 [10] mafiadoc.com [11] link.springer.com [12] dl.acm.org [13] D. Bagdanov, A. Del Bimbo, L. Seidenari, and L. Usai, “Real-time hand status recognition from RGB-D imagery,” in Proceedings of the 21st International Conference on Pattern Recognition (ICPR \'12), pp. 2456–2459, November 2012.View at: Google Scholar [14] M. Elmezain, A. Al-Hamadi, and B. Michaelis, “A robust method for hand gesture segmentation and recognition using forward spotting scheme in conditional random fields,” in Proceedings of the 20th International Conference on Pattern Recognition (ICPR \'10), pp. 3850–3853, August 2010.View at: Publisher Site | Google Scholar [15] C.-S. Lee, S. Y. Chun, and S. W. Park, “Articulated hand configuration and rotation estimation using extended torus manifold embedding,” in Proceedings of the 21st International Conference on Pattern Recognition (ICPR \'12), pp. 441–444, November 2012.View at: Google Scholar

Copyright

Copyright © 2022 Challa Rishitha , Mallikanti Bhavana , Pendem Sriya, Dr. Y. Sreenivasulu. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET43737

Publish Date : 2022-06-02

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online