Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Human Activity Recognition With Smartphone

Authors: Shubham Bhandari, Aishwarya Biradar, Aditi Kshirsagar, Preeti Biradar, Jayshri Kekan

DOI Link: https://doi.org/10.22214/ijraset.2022.43295

Certificate: View Certificate

Abstract

Human Activity Recognition (HAR) is designed to classify activities performed by human beings using responsive sensors inbuilt in smartphones that are affected from their movements. HAR is one of the most important technology that has wide applications in medical research, human survey system and security system, fitness etc. In this project we are predicting what a person is doing based on their trace movement using smartphone sensor. Movements include normal indoor human physical activities such as standing, sitting, walking, running, walking upstairs, walking downstairs. Smartphones has various built-in sensors like accelerometer and gyroscope. This system captures the raw sensor data from mobile sensors as input, process it and predicts a human activity using machine learning techniques. We analyze the performance of two classification algorithms that is Decision Tree (DT), Convolutional Neural Network (CNN). In this project, we build a robust activity detection system based on smartphones. Experiment results show that the classification rate of algorithms reaches 98%. In this project we propose a platform for identifying real-time human activity.

Introduction

I. INTRODUCTION

In past years, remarkable research has been concentrated on experimenting with solutions that can recognize Daily Living Activities with use of inertial sensors. This is possible due to two factors: the increase in low-cost hardware and process signals opens opportunities in the variety of applications contexts such as surveillance, healthcare. HAR system is required to recognise six basic human activities such as walking, sitting, standing, walking upstairs, walking downstairs, laying by training supervised learning model and displaying activities result as per input received from sensors.

The need of acknowledging human activities have grown in medical domain, mainly in elder care support, cognitive disorders, diabetes, and rehabilitation assistance. If sensors could help caregivers record and monitor patients all the time and report any abnormal behaviour detected automatically, a huge number of resources could be saved. Numerous studies have successfully identified activities using wearable sensors with very low error rates. Readings on multiple body-attached sensors achieve low error-rates, but complex settings are not feasible practically.

The project uses low-cost, economically available smartphones as sensors to detect human activity. The increasing popularity of smartphones and it’s computing power make it an ideal source for non-invasive body-attached sensors. The purpose of this research is to identify, evaluate the action performed by a human subject using competent specific sensors related data. According to U.S. Mobile Consumer Statistics, about 44% of mobile subscribers in 2011 have smartphones, and 96% of these smartphones have built-in inertia sensors, such as accelerometers or gyroscopes. Research has shown that a gyroscope can help identify activity even if its contribution is not as good as an accelerometer alone. Since the gyroscope does not have easy access to the accelerometer in cell phones, our system uses readings only from a 3-dimensional accelerometer. In our design, the phone can be placed at any position around the waist, such as jacket pocket and pants pocket, with arbitrary orientation. These are the most common places where people carry mobile phones.

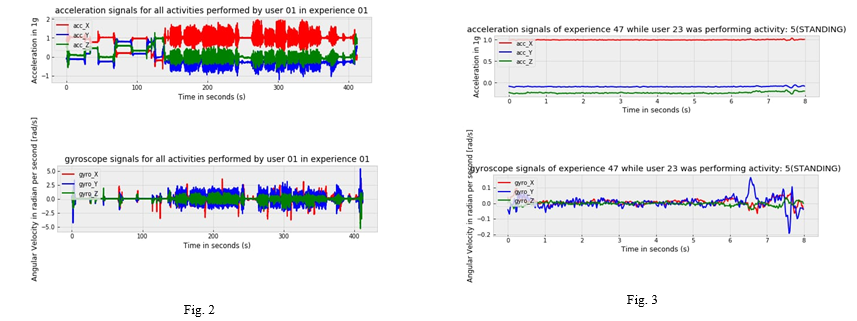

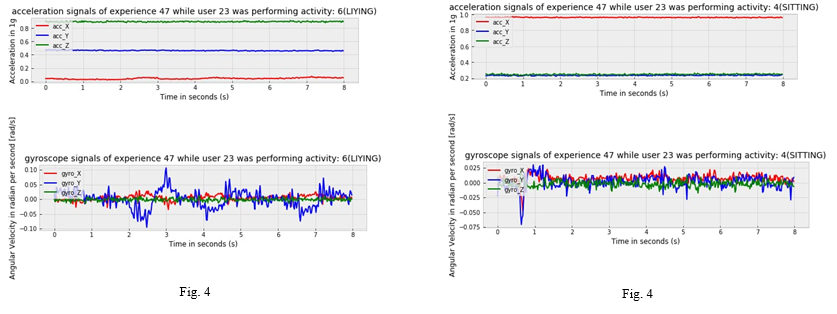

We captured sensor data on the basis of six activities like standing, sitting, laying, walking, walking upstairs and walking downstairs.

The training process is always necessary when new activity is added to the system. Parameters of the same algorithm may need to be trained and adjusted when the algorithm runs on different devices due to differences in sensors. A wide range of activities encompasses HAR including sitting, standing, laying, walking, walking-upstairs, walking-downstairs etc. As people are becoming more conscious about their health, exercise and sleep tracking. It has become a fashionable trend for many health lovers that depend completely on such devices and sensors for such purposes.

The project aims to create a lightweight and precise system on smartphones that can detect human activity. By testing and comparing different learning algorithms, we find the most suitable one in our system in terms of scalability and reliability on smartphones.

II. LITERATURE REVIEW

- A light weight deep learning model for human activity recognition on edge proposed by Preeti Agarwal, Mansaf alam. Here the architecture of proposed light weight model is developed using shallow recurrent neural network (RNN) combined with long short-term memory (LSTM) deep learning algorithm then the model is trained and tested for six HAR activities on resource constrained edge device like RaspberryPi3, using optimized parameters. Experiment is conducted to evaluate efficiency of the proposed model on WISDN dataset containing sensor data of 29 participants performing six daily activities jogging, walking, standing, sitting, upstairs and downstairs. The model’s performance is measure in terms of accuracy, f-measure, precision, confusion matrix and recall.

- Wearable sensor-based human activity recognition using hybrid deep learning techniques proposed by Huaijun Wang, Jing Zhao, Junhuai Li, Ling Tian, Pengjia Tu, Ting Cao, Yang An, Kan Wang, and Shancang Li. This approach proposes a deep learning base scheme that can recognize both specific activities and transition between two different activities of short duration and frequency for health care applications. This paper adopts the international standard dataset, smartphone-based recognition of human activities and postural transitions dataset to conduct an experiment, abbreviated as HAPT dataset.

- Automated Daily Human Activity Recognition for Video Surveillance Using Neural proposed by Mohanad Babiker, Kyaw Kyaw Htike, Othman O. khalifa, Muhamed Zaharadeen and Aisha Hassan. Description: surveillance video systems are gaining increasing attention in the field of computer vision due to its demands of users for the seek of security. Observing human movements and predicting such movements is reassuring. There is a need to develop a surveillance system to monitor normal and suspicious events without any absence and to facilitate the control of huge surveillance system networks, relying on human resources for monitoring. In this paper, an intelligent human activity system identity is developed. A series of digital image processing techniques were used at each stage of the proposed system, such as background subtraction, binarization and morphological operation. A strong neural network was created based on a database of features of human activity, extracted from frame sequences. Multi-layer feed forward perceptron network used to classify activity models in datasets. The classification results show a high performance in all the stage so training, testing and validation. Ultimately, these results lead to a promising performance in activity recognition rates.

- Detailed Human Activity Recognition using Wearable Sensor and Smartphones proposed by Asmita Nandy, Jayita Saha, Chandreyee Chowdhury, Kundan P.D. Singh Abstract: Use of Human activity recognition is increasing day by day for smart home, eldercare, and remote health monitoring and surveillance purpose. To better serve these purposes, detailed identification of activities is required, e.g., Sitting on a chair or on the ground, walking slowly, bearing loads, etc. He is recovering from surgery. In this work, a solution for this purpose has been proposed with the help of wearable and smartphone-embedded sensors. Accordingly, the contribution of this work is to present a framework for the identification details of the robot Static and dynamic activities, as well as their intense parts by designing a combination of classifiers. The ensemble is designed that applies weighted major it is voting for classification of test instances. Weight so the base classifier as determined by feeding their output performance for training dataset in a neural network.

III. METHODS

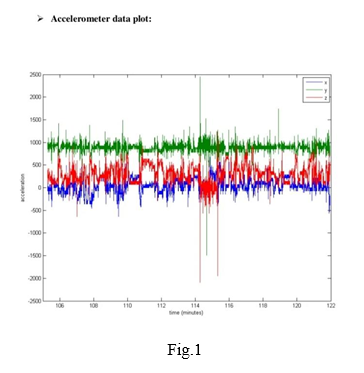

A. Feature Generation

To collect acceleration data, each subject holds the smartphone for a few hours and does some activity. In this project, five kinds of common activities are studied, including walking, sitting, standing, walking upstairs, and walking downstairs. The position of the phone can be anywhere, but it will give more accuracy if placed near to waist. We captured 3-axial linear acceleration and 3-axial angular velocity at a constant rate of 50Hz using an embedded accelerometer and gyroscope.

B. Classification Algorithm

In this project, two types of algorithms are used to classify the activity as explained below.

- Decision Tree: Decision Tree is a Supervised learning method which is used for classification as well as Regression problems, but frequently it is used for solving Classification problems. It is a tree-structured classifier, where internal nodes represent the characteristics of a dataset, branches represent the decision rules, and each leaf node represents the result. Decision tree has two nodes, first is Decision Node and second is Leaf Node. Decision nodes are used to make any decision and have many branches, while Leaf nodes are the output of those decisions and do not have further branches.

When implementing a decision tree, the main problem arises how to choose the best properties for the root node and sub-node.

So, there is a technique for solving such problems called attribute selection solution or ASM. With these measurements, we can easily select the best properties for tree nodes. There are two popular methods for ASM, which are:

a. Information Gain: Obtaining information is a measure of the change in entropy after segmentation of a dataset based on an attribute. This feature calculates how much information you provide about the class.

Information Gain= Entropy(S)- [(Weighted Avg) *Entropy (each feature)

Entropy: Entropy is a metric for measuring impurity in a given property. This specifies the randomness in the data. Entropy can be calculated as follows:

Entropy(s)= -P(yes)log2 P(yes)- P(no) log2 P(no)

Where,

S= Total number of samples

P(yes)= probability of yes

P(no)= probability of no

b. Gini Index: The Gini Index is a measure of the impurity or purity used to create a decision tree in the CART (Classification and Regression Tree) algorithm.

Attributes with a lower Gini index than a higher Gini index should be preferred.

It only generates binary splits, and the CART algorithm uses the Gini index to generate binary splits.

Gini index can be calculated as follows:

Gini Index= 1- ∑jPj2

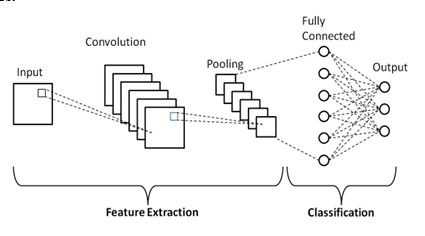

2. Convolutional Neural Network (CNN): A convolutional neural network (CNN), is a network architecture for deep learning which learns directly from data, removing the need of manually feature extraction. CNN is especially useful for finding patterns in images to identify objects, faces and views. They can also be effective for classifying non-image data, such as audio, time series, and signal data.

The two major parts of CNN architecture are

A convolution tool that distinguishes and identifies the various features of the image for analysis in a process called as Feature Extraction. A fully connected layer that uses the output in the convolution process and estimates the class of the image based on the features drawn in the previous stages.

a. Feature Extraction

- Convolution Layer: Convolution puts the input images through a set of convolutional filters, each of which activates certain features from the images.

- Pooling Layer: This layer simplifies the output by performing nonlinear down sampling, reducing the number of parameters that the network needs to learn.

b. Classification: The Fully Connected (FC) layer: It consists of the weights and biases along with the neurons and is used to connect the neurons between two different layers.

IV RESULTS AND DISCUSSIONS

A. Data Collection

- Feature Selection: The body was then drawn in linear acceleration and angular velocity time to receive the jerk signal (tBodyAccJerk-XYZ and tBodyJerk-XYZ). Also, the magnitude of these three-dimensional signals is measured using the Euclidean norm (tBodyAccMag, tGravityAccMag, tBodyAccJerkMag, tBodyGyroMag, tBodyGyroJerkMag). Finally, a Fast Fourier Transform (FFT) is applied to some of these signals producing fBodyAcc-XYZ, fBodyAccJerk-XYZ, fBodyGyro-XYZ, fBodyAccJerkMag, fBodyGyroMag, fBodyGyroJerkMag. The f indicates frequency domain signals.

- Signal Processing: Noise filtering: The features selected for this database come from accelerometer and gyroscope 3-axis RAW signals t_Acc-XYZ and t_Gyro-XYZ. This time domain signal (prefix 't' to indicate time) was captured at a constant rate of 50 Hz. It was then filtered using a medium filter with a corner frequency of 20 Hz and a 3rd order low pass Butterworth filter to remove the noise.

B. Model

- Data Splitting: This Raw-Data from dataset was randomly partitioned for generating the training dataset and real time sensor data is used for testing.

C. Deployment of Model

- Activity Selection: The real time sensor data is compared with the trained dataset of system and extract activity labels related only to the activities which are: standing, sitting, laying, walking, walking upstairs and walking downstairs.

V. FUTURE WORK

Future work could consider more activities and implement a real-time system on smartphones. Other query strategies such as variance reduction and density-weighted methods can be examined to enhance the performance of the active learning schemes proposed here.

For future work for HAR systems to reach their full potential, more research is needed. Since each researcher uses a different dataset to identify activity, comparisons between HAR systems are hampered and invalidated. A simple public dataset will help researchers benchmark their system and fully develop the system. The activities identified in existing systems are simple and atoms, which may be part of a more complex composite behaviour. Identifying composite activities can enrich context awareness. There is also a great research opportunity to identify overlapping and concurrent activities. Deep learning algorithms, one-dimensional and two-dimensional convoluted neural networks, hybrids of convoluted networks and LSTM, should be further studied to determine their suitability for solving the problem of identifying human activity from raw signal data. Existing HAR systems are primarily focused on individual activities but can be extended to identify patterns and activity trends for groups of people using social networks. Finally, an identification system that can be predicted by a user before an action takes place can be a revolutionary development in certain applications.

Conclusion

In this project, we designed a smartphone-based recognition system that recognizes five human activities: walking, standing, sitting, laying, walking upstairs, walking downstairs. We have presented the general architecture used to build a system for detecting human activity and focused on design issues such as sensor selection, constraint, flexibility, and so on. Which is being evaluated independently depending on the type of system. The paper further focuses on the importance of selecting key features from the data and provides a quantitative analysis of the implementation time and accuracy metrics. The results indicate that implementation time and computing costs are greatly reduced by using feature selection methods, without compromising accuracy. Great feature selection methods and improvements tuning parameters can further help improve accuracy and reduce computer costs. The research paper also provides a solution to reduce and eliminate the dependency of the requirement of domain knowledge to create hand-crafted features from the raw signals obtained from the sensor data. The activity data were trained and tested using 2 algorithms: decision tree, convolutional neural networks. The accuracy achieved by the use of recurrent neural networks on raw signal data is on par with other classification models built on handicraft features. Adding further levels to the network or increasing complexity increases the accuracy of identification of deep learning algorithms. The best classification rate in our experiment is 98%, which is achieved by CNN. The classification performance is robust to the orientation and the position of smartphones.

References

[1] Andreas Dengel, Bishoy Sefen, Sebastian Baumbach, Slim Abdennadher, “Human activity recognition using sensor data of smartphones and smartwatches.” German Research Center for Arti?cial Intelligence (DFKI), Kaiserslautern, Germany, February 2016. [2] Prof. Dr. Fakhreddine Ababsa and. Cyrille Migniot, “Human activity recognition based on image sensors and deep learning.” Presented at the 6th International Electronic Conference on Sensors and Applications, 14 November 2019. [3] Anna Ferrari, Daniela Micucci, Marco Mobilio & Paolo Napoletano, “Trends in Human Activity recognition using smartphones.” Journal of Reliable Intelligent Environments volume 7, 2021. [4] Preeti Agarwal, Mansaf alam, “A light weight deep learning model for human activity recognition on edge devices.” International Conference on Computational Intelligence and Data Science, 16 April 2020. [5] Marcin Straczkiewicz, Peter James & Jukka-Pekka Onnela, “A systematic review of smartphone based Human Activity Recognition methods for health research.” npj Digital Medicine, 2021. [6] Warren Triston D’souza, Kavitha R, “Human activity recognition using accelerometer and gyroscope sensors.” International Journal of Engineering and Technology, April 2017 [7] Ankita, Shalli Rani, Himanshi Babbar, Sonya Coleman, Aman Singh, and Hani Moaiteq Aljahdali, “An efficient and lightweight deep learning model for human activity recognition using smartphones.” DOI: 10.3390/s21113845, 2021. [8] Amin Rasekh, Chien-An Chen, Yan Lu, “Human activity recognition using smartphone.” DOI:10.35940/ijrte.d4521.118419, January 2014. [9] Ruchita Deshmukh, Sneha Aware, Akshay Picha, Abhiyash Agrawal, “Human activity recognition using embedded smartphone sensors.” International Research Journal of Engineering and Technology, Apr-2018 [10] Duc Ngoc Tran, Duy Dinh Phan, “Human activities recognition in android smartphone using support vector machine.” 7th International Conference on Intelligent Systems, 16 March 2017 [11] Charlene V. San Buenaventura, Nestor Michael C. Tiglao, “Basic human activity recognition based on sensor fusion in smartphones.” IFIP/IEEE Symposium on Integrated Network and Service Management (IM), 8-12 May 2017 [12] Davide Anguita, Alessandro Ghio, Luca Oneto, Xavier Parra and Jorge L. Reyes-Ortiz, “Human activity recognition on smartphones using a multiclass hardware-friendly support vector machine.” International Workshop on Ambient Assisted Living, 2012 [13] Charissa Ann Ronao, Sung-Bae Cho, “Human Activity Recognition with smartphone sensors using deep learning neural networks.” https://doi.org/10.1016/j.eswa.2016.04.032, 15 October 2016 [14] Hansa Shingrakhia, “Human activity recognition using deep learning.” Journal of Emerging Technologies and Innovative Research, May-2018 [15] JenniferR. Kwapisz, Gary M. Weiss, Samuel A. Moore, “Activity recognition using cell phone accelerometers.” https://doi.org/10.1145/1964897.1964918, March 2011 [16] Vimala Nunavath, Sahand Johansen, Tommy Sandtorv Johannessen, Lei Jiao, “Deep learning for classifying physical activities from accelerometer data.” https://www.mdpi.com/1424-8220/21/16/5564, August 2021 [17] AkramBayat, MarcPomplun, Duc A. Tran, “Study on human activity recognition using accelerometer data from smartphones.” The 11th International Conference on Mobile Systems and Pervasive Computing, 2014 [18] Amin Rasekh, Chien-An Chen, Yan Lu, “Human activity recognition using smartphone.” https://doi.org/10.48550/arXiv.1401.8212, January 2014 [19] Ashwani Prasad, Amit Kumar Tyagi, Maha M. Althobaiti, Ahmed Almulihi, Romany F. Mansour, Ayman M. Mahmoud, “Human activity recognition using cell phone-based accelerometer and convolutional neural network.” DOI:10.3390/app112412099, 2021.

Copyright

Copyright © 2022 Shubham Bhandari, Aishwarya Biradar, Aditi Kshirsagar, Preeti Biradar, Jayshri Kekan. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET43295

Publish Date : 2022-05-25

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online