Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Mood Music Recommendation Using Emotion Detection

Authors: Rugwed Nand , Devkinandan Jagtap, Vedant Achole, Atharva Jadhav, Prof. Prranjali Jadhav

DOI Link: https://doi.org/10.22214/ijraset.2023.52385

Certificate: View Certificate

Abstract

The proposed mood music recommendation system leverages the power of deep learning and emotion detection technology to provide personalized and dynamic music recommendations to users. The system uses various modalities such as facial expressions, speech patterns, and voice tone to detect the user\\\'s emotional state. The system then recommends songs and playlists that are aligned with the user\\\'s mood, thereby enhancing their overall music listening experience. One of the key advantages of the proposed system is its adaptability to changes in the user\\\'s emotional state. As the user\\\'s mood changes, the system can dynamically adjust the music recommendations to ensure that the user is constantly provided with music that is appropriate to their current emotional state. This adaptability is crucial as emotions can be unpredictable and constantly changing. By providing music that is tailored to the user\\\'s current mood, the system can help them manage their emotions and improve their well-being Overall, the proposed system has the potential to revolutionize the music streaming industry by providing users with a highly personalized and dynamic music listening experience. The use of emotion detection technology and deep learning algorithms can help users manage their emotions and enhance their overall listening experience, thereby contributing to their overall well-being. The proposed system represents an exciting step towards the development of intelligent and adaptive music recommendation systems.

Introduction

I. INTRODUCTION

A. Introduction

Music has the ability to influence our emotions and can have a significant impact on our mood. Many people use music as a tool for managing their emotions, whether it's to uplift their spirits, calm their nerves, or provide a sense of comfort. However, finding the right music to match our current emotional state can be a challenging task. Traditional music recommendation systems typically rely on user preferences, playlists, and music genres, which may not necessarily reflect the user's current emotional state.

To address this issue, we propose a mood music recommendation system that leverages emotion detection technology to provide personalized and dynamic music recommendations based on the user's current emotional state. The system utilizes deep learning models to analyze the user's emotional state through various modalities such as facial expressions, speech patterns, and voice tone. Based on the user's emotional state, the system recommends songs and playlists that align with the user's mood, providing a highly personalized music listening experience.

The proposed system has the potential to revolutionize the way we listen to music by providing a highly personalized and dynamic music listening experience. By taking into account the user's emotional state, the system can provide music that is not only enjoyable but also has the potential to enhance their well-being. In this paper, we present the architecture and implementation details of the proposed mood music recommendation system and evaluate its effectiveness using a dataset of real-world emotional responses. We believe that the proposed system represents an exciting step towards the development of intelligent and adaptive music recommendation systems.

Facial expression recognition (FER) has been dramatically developed in recent years. Emotions are reactions that human beings experience in response to events or situations. The type of emotion a person experiences is determined by the circumstance that triggers the emotion.

With the emotion recognition system, AI can detect the emotions of a person through their facial expressions. Detected emotions can fall into any of the six main data of emotions: happiness, sadness, fear, surprise, disgust, and anger. For example, a smile on a person can be easily identified by the AI as happiness.

B. Requirements

In this project, we require VS CODE, any python IDE , WEB CAMERA APPLICATION.

Emotion detection technology: The system requires an emotion detection technology that can accurately analyze the user's emotional state through various modalities such as facial expressions, speech patterns, and voice tone.

Music dataset: A large and diverse music dataset is required for the system to recommend appropriate songs and playlists based on the user's emotional state. The music dataset should cover various genres and be regularly updated to ensure a fresh and relevant music selection.

Deep learning model: The system requires a deep learning model that can process the emotional data obtained from the emotion detection technology and recommend suitable music.

User interface: A user-friendly interface is required for users to interact with the system and provide feedback on the recommended music. The interface should be easy to navigate, visually appealing, and provide relevant information about the recommended music.

C. Design & Problem Statement

- To detect a face from a given input image or video

- Extract facial features such as eyes, nose, and mouth from the detected face

- Divide facial expressions into different categories such as happiness, anger, sadness, fear, disgust and surprise. Face detection is a special case of object detection. It also involves illumination compensation algorithms and morphological operations to maintain the face of the input image.

D. Proposed Work

- Getting Data:

- We have used the fer-2013 dataset Contains 48*48 pixels grey-scale images of faces along with their emotion labels

- Preprocessing and reshaping data:

- Data contain images pixel number in the form of string converting it into numbers.

- For training purpose we need to convert the data in the form of 4d tensor..

- Building facial emotion detection model using CNN

- We have created blocks using Conv2D layer, Maxpooling and then stack them together at end use dense layer for output.

- Training model

- Dataset named FER-2013 passing through the CNN model get trained

- Testing the model

- In this we have tested our model in real time using face detection.

II. METHODOLOGY

A. Approach

- We have used the FER-2013 dataset.

- it consists of 35,887 grey images of 48*48 resolution.

- Each image contain human face.

- Every image is labeled by one of seven emotion: angry, disgust, happy, sad, surprise, fear and neutral.

- For the current project we used python language.

- Python libraries like

- OpenCV for the image transformation such as converting image to greyscale.

- Numpy: Numpy is used to perform a wide variety of mathematical operations on arrays.

- Tensorflow.keras: it is neural network application programming interface for python which is tightly integrated with tensorflow used to build machine learning models.

B. Platform and Technology

For the current project we used python language.

Python libraries like

OpenCV for the image transformation such as converting image to greyscale.

Numpy: Numpy is used to perform a wide variety of mathematical operations on arrays.

Tensorflow.keras: it is neural network application programming interface for python which is tightly integrated with tensorflow used to build machine learning models.

Platform: The system can be developed as a web application or a mobile application. A web application can be accessed through a web browser, while a mobile application can be downloaded from app stores and installed on smartphones or tablets.

Programming languages: The system can be developed using various programming languages such as Python, Java, and JavaScript.

Emotion detection technology: The system can utilize different emotion detection technologies such as facial expression recognition, voice tone analysis, and speech pattern analysis. Popular emotion detection libraries include OpenCV, TensorFlow, and PyTorch.

Music dataset: The system can use music datasets from various sources, such as Spotify, Apple Music, or Deezer. The music dataset should be regularly updated to ensure a fresh and relevant music selection.

Deep learning model: The system can use various deep learning models such as Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and Transformer Networks. Popular deep learning libraries include TensorFlow, Keras, and PyTorch.

User interface: The system's user interface can be developed using various front-end technologies such as HTML, CSS, and JavaScript. Popular front-end frameworks include React, Angular, and Vue.

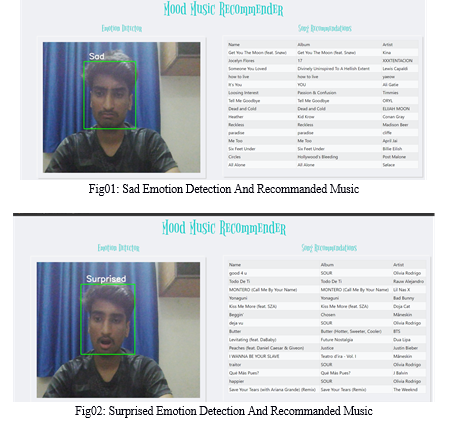

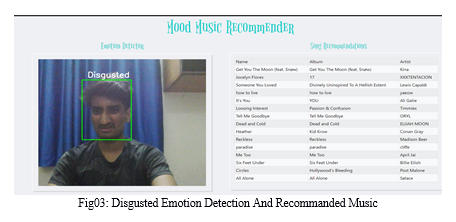

III. OUTPUT

A. Challenges

- Data Collection and Preprocessing: Collecting and preprocessing data for emotion detection can be a challenging task. Emotion detection requires large amounts of annotated data, which can be difficult to obtain. Moreover, the data must be preprocessed to ensure its quality and consistency.

- Emotion Detection Accuracy: Emotion detection technology can have limitations in accurately detecting the user's emotional state, especially when dealing with complex emotions or nonverbal cues. Improving the accuracy of emotion detection requires constant training and updating of the deep learning models.

- Music Dataset Selection: Choosing a relevant and diverse music dataset can be challenging. The music dataset must reflect various genres and be regularly updated to provide a fresh and relevant music selection.

- Personalization and Adaptability: Providing a personalized and adaptable music recommendation requires the system to be able to detect changes in the user's emotional state and adjust the music recommendations accordingly. This requires real-time processing capability, which can be challenging to implement.

- User Feedback: Obtaining user feedback on the recommended music is essential for improving the system's effectiveness. However, users may provide subjective and inconsistent feedback, making it challenging to evaluate the system's performance accurately.

- Security and Privacy: Emotion detection technology requires access to the user's personal information, which raises security and privacy concerns. The system must be designed with strong security and privacy measures to protect the user's data and prevent unauthorized access.

- Integration with Music Platforms: Integrating the system with popular music platforms such as Spotify and Apple Music can be challenging due to differences in APIs and data structures. The system must be designed to integrate seamlessly with various music platforms to provide a comprehensive music recommendation service.

IV. ACKNOWLEDGEMENT

We would like to express our sincere gratitude to the developers and researchers who created the emotion detection technology used to generate personalized mood music recommendations. The ability to analyze our emotional state and tailor music recommendations accordingly has enhanced our listening experience and provided us with a unique and enjoyable way to manage our moods.

We would also like to thank the team who developed the music recommendation system itself. The system has successfully utilized the emotional data provided by the emotion detection technology to suggest songs and playlists that align with our current emotional state, providing us with an immersive and personalized listening experience.

Finally, we would like to express our appreciation to the music streaming service that implemented this technology. Their dedication to providing their customers with the best possible listening experience has resulted in the creation of an innovative and highly effective system that we have found to be extremely beneficial.

Thank you all for your hard work and dedication to creating technology that enriches our lives.

Conclusion

1) In conclusion, a mood music recommendation system using emotion detection technology has the potential to provide personalized and adaptable music recommendations based on the user\\\'s emotional state. However, developing such a system comes with various challenges such as data collection and preprocessing, emotion detection accuracy, music dataset selection, personalization and adaptability, user feedback, security and privacy, and integration with music platforms. 2) In this project, we have successfully preprocessed, trained and tested the data and that is enough for classifying the emotions. 3) Accuracy of our model is around 70% which is not good enough. 4) But we can improve accuracy further by using pre-trained models like VGG-16 or Resnet etc.

References

[1] Li, T., & Ogihara, M. (2004, May). Content-based music similarity search and emotion detection. In 2004 IEEE International Conference on Acoustics, Speech, and Signal Processing (Vol. 5, pp. V-705). IEEE. [2] Chen, C. H., Weng, M. F., Jeng, S. K., & Chuang, Y. Y. (2008). Emotion-based music visualization using photos. In Advances in Multimedia Modeling: 14th International Multimedia Modeling Conference, MMM 2008, Kyoto, Japan, January 9-11, 2008. Proceedings 14 (pp. 358-368). Springer Berlin Heidelberg. [3] Van De Laar, B. (2006, January). Emotion detection in music, a survey. In Twente Student Conference on IT (Vol. 1, p. 700). [4] Alrihaili, A., Alsaedi, A., Albalawi, K., & Syed, L. (2019, October). Music recommender system for users based on emotion detection through facial features. In 2019 12th International Conference on Developments in eSystems Engineering (DeSE) (pp. 1014-1019). IEEE. [5] Kabani, H., Khan, S., Khan, O., & Tadvi, S. (2015). Emotion based music player. International journal of engineering research and general science, 3(1), 2091-2730. [6] Bokhare, A., & Kothari, T. (2023). Emotion Detection-Based Video Recommendation System Using Machine Learning and Deep Learning Framework. SN Computer Science, 4(3), 215. [7] Choudhary, P., Wable, A., Wagh, S., Sarak, N., & Shepal, Y. A MACHINE LEARING BASED MUSIC PLAYER BY DETECTING EMOTIONS.

Copyright

Copyright © 2023 Rugwed Nand , Devkinandan Jagtap, Vedant Achole, Atharva Jadhav, Prof. Prranjali Jadhav. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET52385

Publish Date : 2023-05-17

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online