Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Music Player Using Facial Expression

Authors: Dr. M. Srinivas, Harshini , Mayuka , Himani

DOI Link: https://doi.org/10.22214/ijraset.2022.44513

Certificate: View Certificate

Abstract

In the day to day life of a human and in the technologies that is emerging, music is incredibly significant. Conventionally, it is the work of a user to search for a song manually from the list of songs in a music player. Here, an efficient and accurate model is introduced to generate a playlist on the basis of users present emotional state and behaviour. Existing methods for automated playlist building are computationally inefficient, inaccurate, and can involve the usage of additional gear such as EEG or sensors. Speech is the most primitive and natural form of communicating feelings, emotions, and mood, and its processing is computationally intensive, time-consuming, and expensive. This suggested system uses real-time face emotion extraction as well as audio feature extraction.

Introduction

I. INTRODUCTION

Music is crucial factor of fun for music listeners as well as music lovers, it plays a significant role in uplifting an individual's life. There are various music players available in market today, which contains features such as fast forward, backward, changeable playback speed, genre segregation, streaming playback with multicast streams, and volume modulation, among others, with the increasing advancements in the field of multimedia and technology. These capabilities fulfills user’s basic needs, but the user will still have to actively search the song library and select songs that suit their current mood and behaviour. Facial expression based music player is a revolutionary concept that gives user a facility to play songs automatically considering feelings as basis. Its goal is to give user-favorite music based on the emotions sensed. In the current system, the user must manually select the songs because randomly played songs may not match the user's mood. Instead, it is the task of a user to segregate songs into different emotional categories and then select a song based on particular emotion manually before playing songs. The music will be played from the predefined folders based on the emotion. There are certain number of songs available in each sub directory, in which each sub directory corresponds to certain emotion. Programmer have access to change, replace or delete these songs which are available in these songs which are available in these sub folders according to user requirement. There is a possibility that at times, user might want to listen different songs in a particular mood. Let us consider an example, if a user is in sad mood, then it is completely his/her choice to choose a song according to their mood. Considering this scenario, the following are two certain possibilities:

- User would like to carry forward his present emotion of being sad.

- Else, user would like to refresh his/her mood turning it into happiness.

Therefore, depending on the choice of users the songs in the sub directories can be changed as the program runs successfully on system.

II. LITERATURE REVIEW

In market, many applications are available which gives us provision to create a playlist in music player but with the usage of emotions of user, a variety of techniques and approaches have been proposed and progressed. Only few fundamental emotions are focused with the usage of complex methodologies such as Voila and Jones.

Several scientific publications that give a brief overview of the concept are:

- Music is extremely significant in human culture as well as in modern technological technologies. Typically, the user is faced with the burden of manually searching through a playlist of songs in order to select one. Considering user’s present emotion, a list of songs are generated with the help of an effective and precise algorithm. Existing methods for automated playlist building are computationally inefficient, inaccurate, and can involve the usage of additional gear such as EEG or sensors Speech, being the most ancient and natural manner of conveying sentiments, mood and its processing are the most ancient and natural ways of doing so. The proposed system can be used to identify user’s facial expressions as well as to draw facial landmarks from the expressions, which can be further categorized to identify the present mood of user. The user will be presented songs that fit their emotions when the emotion has been recognized facial Expression Based Music Player is a fantastic app for music lovers with smart phones and internet access. The application is accessible to everyone who creates a profile on the system. The following are the requirements that can be fulfilled with this programmer:

a. Making an account or signing up for one, and then logging in.

b. Adding music

c. Getting rid of songs

d. Keeping the music up to date

e. Recommendations and a playlist tailored to you

f. Feelings captured automatically, resulting in a reduced calculation cost.

2. A music collection is sorted with the help of an intelligent agent on the basis of emotions that each song gives and then recommends user a playlist according to their mood. The user's local music collection is initially clustered based on the emotion conveyed by the song, i.e. the song's mood. Song’s lyrics and music are taken into account to frequently assess. When a user wants to create a playlist that is mood-based, they take a snap of themselves at that time. The user's sentiment is recognized using facial detection and emotion identification techniques on this photograph. The user is then given a playlist of music that best suits this emotion

3. Stress factor is growing high in people because of factors like lousy economy, financial responsibilities and other factors. Listening to music can be a beneficial pastime for reducing stress. However, if the music and listeners present emotion does not match, it will be ineffective. Furthermore, we do not have any music player in existence which can pick songs on the basis of the listener’s mood.

To address this issue, this study presents a facial expression-based music player that may suggest songs that support the user's emotions of sadness, happiness, neutrality, and anger. From a smart band or a mobile camera, the appliance receives the user's pulse or a facial image. The categorization algorithm is then used to detect the user's sentiment. This paper discusses two types of categorization approaches: gut rate-based and, as a result, facial image-based methods. The device then plays music that are in the same mood as the user's emotion. Because the proposed approach is able to precisely characterize the pleasant mood based on the trial data. Our way of life revolves around music. People tend to listen to music in many situations, could be in any way actively or passively, consciously or unconsciously feeling it as a kind of emotion expressions.

III. METHODOLOGY

In order to classify user’s sentiment, the suggested system extract facial landmarks by detecting user’s facial expressions.. After the emotion has been identified, the user will be shown songs that match their emotions.

Facial Expression Based Music Player is a great tool for music fans who have a smartphone and access to the internet. The application is accessible to the one who creates profile on system. The application is meant to address the following user needs, as listed below:

- Registering for an account or signing up, and then logging in

- Adding music

- Discarding songs

- Updating the music

- Recommendations and a personalized playlist

- Emotions Capture

IV. ARCHITECTURE DIAGRAM

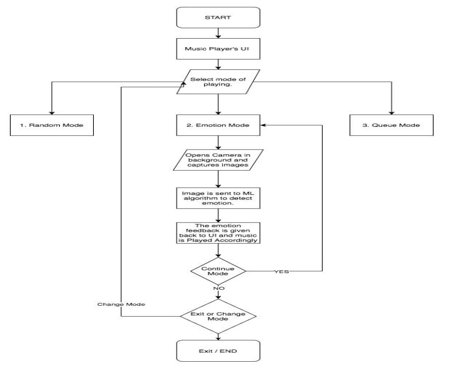

The main aim of the project is to play the music according to the emotion of the user, which is detected from the facial expression of the User. This is made possible by using 2 technologies:

- One is the machine learning algorithms which are used to detect the emotion of the user from their facial expression.

- The other one is the web development tools for developing the UI or frontend of the music player.

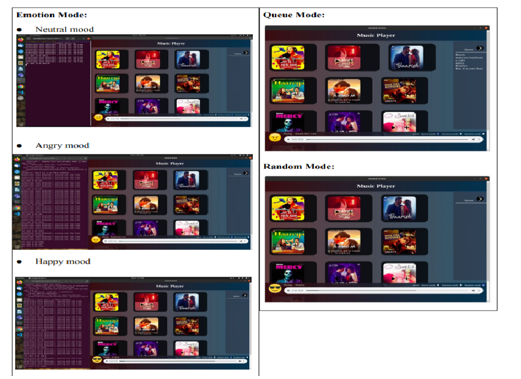

The music player opens with an interactive UI and offers 3 modes for the user to select from.

- Random mode

- Emotion mode

- Queue mode.

In Random mode the music is played randomly irrespective of the emotion. In queue mode, the music is played according to the playlist defined by the user. The main feature of the project is the emotion mode in which the music is played according to the emotion of the user. As soon as the user selects the emotion mode the algorithm accesses the device’s camera and using the OpenCV library of python the image of the user is captured and sent to the algorithm for emotion analysis. The image captured is first grayscale and resized so as to predict the facial expression from the trained model. Then the trained model xml file is loaded for the Fisher face module which will predict the emotion of the user. The Fisher face module works by analyzing images then it will reduce the dimension of the data by calculating its statistical value according to the given categories and stores the numeric values in an xml file. While prediction it also calculates the same for a given image and compares the value with the computed dataset values and gives the corresponding result with confidence value. The emotion is then predicted by the algorithm in the categories of Neutral, Happy, Sad and angry. This is the working methodology of the back end that we have designed. For the User interface the music player is designed by using technologies such as HTML, CSS and JS. The UI is designed in an interactive format with users given the choice to choose from 3 different modes. When the user selects the Emotion mode the camera captures the image of the User and sends it to the back end algorithm. The emotion detected is retrieved from the backend and then the music is being played accordingly.

A. Modules Implemented

Expression Detection and Recognition

This part contains 5 modules namely,

- Image Capture

- Emotion Detection

- Training

All these work together to detect the mood of the user and display a corresponding emoji on the music player and play songs according to the emotion of the user.

- Recommendation Module

The system will detect human emotions and classify them using SVM.

- Neutral mood

B. Fisherface Algorithm

The image captured is first grayscale and resized so as to predict the facial expression from the trained model. Then the trained model xml file is loaded for the Fisherface module which will predict the emotion of the user. The Fisherface module works by analyzing images then it will reduce the dimension of the data by calculating its statistical value according to the given categories and stores the numeric values in an xml file. While prediction it also calculates the same for a given image and compares the value with the computed dataset values and gives the corresponding result with confidence value. The emotion is then predicted by the algorithm in the categories of Neutral, Happy, Sad and angry. This is the working methodology of the backend that we have designed. For the User interface the music player is designed by using technologies such as HTML, CSS and JS. The UI is designed in an interactive format with users given the choice to choose from 3 different modes. When the user selects the Emotion mode the camera captures the image of the User and sends it to the backend algorithm. The emotion detected is retrieved from the backend and then the music is being played accordingly.

V. RESULTS

Conclusion

Facial expression detection is a difficult subject in the field of image analysis and computer vision that has gotten a lot of interest in recent years due to its wide range of applications. We made a music player containing three modes Emotional, Random, Queue. The center of attraction “Emotion mode” which will play music according to the mood of the user. We made an user centric system and tried to make it easier for the user to operate the system. We developed an User Interface using front end so that this can be operated in any system. The field of expression recognition research can be further explored and improved. Despite the small dataset size, the training is done with a fisher face classifier, which achieves a 91 percent accuracy. We\'ll widen the emotions in future study by incorporating more datasets. These emotions can be recognized more accurately when a dataset with a higher number of photos is used. This would also help us expand our song catalogue and improve song matching based on the user\'s preferences. . This project can be further enhanced by bringing in more emotions like disgust, fear, dissatisfaction , confused etc. which can be done by increasing the dataset. Authorization of every registered human can be made stronger by training over larger dataset so that there is no chance of wrong analysis. With every emotion analyzed in various situations for a particular person, a hypothesis of his mental health can be produced to further use this in medical diagnosis and psychological experiments. It can also be used to be made specialized or each and every user based on their likes and dislikes of the songs.

References

[1] 2017 Smart music player integrating facial emotion and music mood recommendation [2] https://ieeexplore.ieee.org/document/8299738 [3] 2011 UKSim 5th European Symposium on Computer Modeling and Simulation https://ieeexplore.ieee.org/document/6131215 [4] S. Deebika, K. A. Indira and Jesline, \"A Machine Learning Based Music Player by Detecting Emotions,\" 2019 Fifth International Conference on Science Technology Engineering and Mathematics (ICONSTEM), Chennai, India, 2019, pp. 196-200, doi: 10.1109/ICONSTEM.2019.8918890. [5] Arora, A. Kaul and V. Mittal, \"Mood Based Music Player,\" 2019 International Conference on Signal Processing and Communication (ICSC), NOIDA, India, 2019, pp. 333-337, doi: 10.1109/ICSC45622.2019.8938384. [6] Alrihaili, A. Alsaedi, K. Albalawi and L. Syed, \"Music Recommender System for Users Based on Emotion Detection through Facial Features,\" 2019 12th International Conference on Developments in eSystems Engineering (DeSE), Kazan, Russia, 2019, pp. 1014-1019, doi: 10.1109/DeSE.2019.00188. [7] Altieri et al., \"An Adaptive System to Manage Playlists and Lighting Scenarios Based on the User’s Emotions,\" 2019 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 2019, pp. 1-2, doi: 10.1109/ICCE.2019.8662061. [8] 2017 An Efficient Real-Time Emotion Detection Using Camera and Facial Landmarks.

Copyright

Copyright © 2022 Dr. M. Srinivas, Harshini , Mayuka , Himani . This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET44513

Publish Date : 2022-06-18

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online