Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

OCR Studymate

Authors: Kavita Pathak, Shreya Saraf, Sayali Wagh, Dr. Saroja Vishwanath

DOI Link: https://doi.org/10.22214/ijraset.2022.41103

Certificate: View Certificate

Abstract

As we are familiar that there are many languages around the world, which many of us might even not be aware of but due to globalization and digitalization we have got to see and learn many languages. During those days of colonization, English became an integral part of many people’s language and still continues to be in dominance. Hence, inarguably English is one of the prominent languages that is spoken worldwide. But we also cannot deny the fact that there are still many people across the world who aren’t familiar with this language and face difficulties when most of the texts are written in this particular language. For instance, Indian being one of the countries where there are more than 121 languages and every state has its own dialects. So, it becomes difficult for the people who are less familiar with English or any other language that they aren’t familiar with. To overcome these issues and help people who are from vernacular medium or whose native language is other than English, there are already many applications and systems around the world which is appreciable. Most of the existing systems that are present currently have built separate application for each and every step which could be little overwhelming for someone who is not very mobile friendly specifically people with age group above 60. So, the applications are divided according to the steps like capturing text image through camera, then another application for translation which could be done through any translation API and lastly for scanning we have Optical Character Recognition (OCR) scanner for text. But problem is that people expect that the application that they are using should be easy to use, simple to reach and does everything in one go. So, the application that we have proposed makes it easy for people and provides them with a new idea to translate the unfamiliar language (specifically English) into their native language or the languages that are known to them. So, this mobile application captures images which are text based and has important messages or any documents from real world that is important to be translated, and once capturing of image is done then the text is extracted and at the same time identification of language is done of extracted text, and then using Google translation (we have used this translation api) language is translated into their chosen language( we have more than 50 languages to choose) and then the result is displayed on the screen.

Introduction

I. INTRODUCTION

As known and also discussed very often, that in real world there are many significant messages, important documents which also include many historical, geographical as well as scientific papers which could be useful to us but unfortunately most of these documents that are present or discovered are written in different languages which depends on many factors such as it could be host country language, or the person who had written it, it could be in his mother tongue or the language that he is familiar with.

If the messages that are present in these documents are very useful and have significance but is unreachable to mankind just because of one factor that is language barrier, it might have bigger impact and we could miss out on some of the important messages which otherwise would have been possible if the base language used was known by every other person in this world. But we know this scenario is highly impossible because there are so many languages spoken around the world that it will be impossible for someone to know all the languages.

So, many researchers also found out that recognizing as well as translating the text is very important process especially in today’s scenario where everything is being digital and all the information is stored and retrieved online.

In order to overcome these issues, which could be due to linguistic barrier, we have come up with our application “OCR STUDYMATE” which is an android based application that uses one of the ML techniques known as Optical Character Recognition. Main purpose of our application is to help mankind to translate the unfamiliar language into their native language or the language that we are familiar with.

It captures text based image and then after their extraction translate them into other languages using the translator which is done using ML kit technique.

II. PROBLEM STATEMENT

With the advancement in technology, the world is considered as "connected" to each other. But often at times, communication over the world has linguistic barrier issues. While traveling to another country, studying for a particular subject, the need to instantly understand the meaning of the unknown texts and translate it to one's preferred language is evident. As a result, a tool is required that can help users to extract these unknown texts from images or even handwritten texts for ease of global connectivity.

OCR (Optical Character Recognition) is considered a prominent problem in machine learning and artificial intelligence. While most people assume it is a simple example, the difficulty arises when the data is imprecise and unregulated, as it is in the case of handwritten text recognition. To overcome these issues, we have proposed a one-stop solution that uses OCR technologies to not only extracts texts but also identifies the language and user can translate it to their preferred language for further understanding.

III. LITERATURE REVIEW

- “Extraction of Text from an Image and its Language Translation Using OCR” by G.R.Hemalakshmi, M.Sakthimanimala J.Salai Ani Muth:

In this paper, this is a discussion on using optical character recognition to translate text from one language into another language. This paper presents a simple, efficient and minimum cost approach to construct OCR for reading any document that has fix font size and style or handwritten style. Traditional OCR methods are used here.

2. “Multilingual Speech and Text Recognition and Translation using Image” by Sagar Patil, Mayuri Phone, Siddharth Prajapati, Saranga Rahane, Anita Lahane:

The paper presents a simple application with an aim to develop a system that has capability to perform Translation, Converting text to speech, Speech Recognition, Text Extraction. It proposes a system for users facing language barriers and the interface is kept minimal.

3. “Design of an Optical Character Recognition System for Camera based Handheld Devices” by Ayatullah Faruk Mollah , Nabamita Majumder, Subhadip Basu and Mita Nasipuri:

This study shows that compared to Tesseract, acquired recognition accuracy (92.74%) is good enough. Analysis revealed that the recognition system proposed in this research is computationally efficient, making it suitable for low-resource computers like mobile phones.

4. "Text Extraction Approach towards Document Image Organisation for the Android Platform" by M. Madhuram, and Aruna Parameswaran:

This paper proposes a simple method for content-based categorization of document images using a Bag-of-Words model with an objective to provide an automated interface for performing document image processing, text extraction, and organization of relevant information.

5. "Detecting text based image with optical character recognition for English translation and speech using Android" by Sathiapriya Ramiah, Tan Yu Liong and Manoj Jayabalan:

In this paper, a system is developed to assist tourists in overcoming linguistic barriers while visiting another country where different languages are used to portray information.

6. "LANGUAGE IDENTIFICATION USING ML KIT" by Koushik Modekurti and K. N. V. R. S. Sai Kiran:

This research proposes a system which uses Google Firebase’s Machine Learning (ML) Kit’s Language Identification Application Program Interface (API) for the process of Language Identification.

IV. PROPOSED SYSTEM

Our proposed system allows user to capture the text based image (base language is English) and then show the translated text in their preferred or chosen language (User has option to select his own language, application provides with over 50 languages). After the image is captured, then the text is extracted and meanwhile, language is identified and then with the help of translator text is translated into user’s preferred language and result is displayed on the screen.

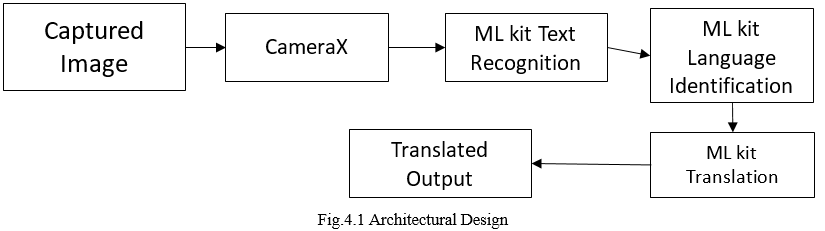

- From the figure 4.1 we can see that input can be taken from the device's camera. The first step consists of pre-processing the image which then will convert it into the image into a preferred format that would be suitable for processing later on. This can be done using CameraX Library.

- This scanned image can be unstructured and noisy. So it is important to enhance it by noise removal and then conversion of image to binary format. By applying noise removal we can increase the accuracy of text recognition.

- After the pre-processing is done, the individual characters are separated using the segmentation process. Then whatever significant data would be there will be retrieved from the data which is unrefined and raw in nature through the feature extraction step.

- The app will use the ML Kit Text Recognition on-device API to recognize text from the real-time camera feed. It'll use ML Kit Language Identification API to identify the language of the recognized text. Lastly, the system will be able to translate the text to any (preferred) language, users will have the option to choose their preferred language from 50 languages available in the system and all this will be done using the ML Kit's Translation API.

- The Translated words generated are the output of our system. The output will be shown on our UI.

V. PROPOSED FEATURES

- Text Extraction–Users can scan text from images which are both printed and handwritten.

- Language Identification – Users can identify the language of the scanned text.

- Text Translation – Users can translate the extracted text to their preferred language.

VI. RESULT DISCUSSION

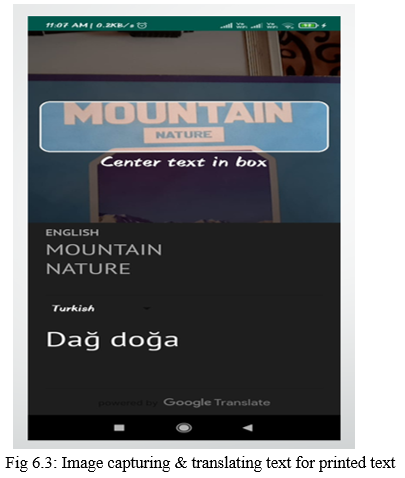

UI of the system is kept simple and clean, so that it could be understood by user of any age group and also for those who aren’t familiar with the tech stuff. We’ve analyzed our system in two different circumstances: On printed text and on handwritten text/manual.

A. Printed Text

In the above screen, we need to grant permission to ML kit to take pictures and record videos.

In the above screen, we can see the main UI of the system wherein we’ve two sections. First section is to have picture and the second section is where we will see our translated text.

In the above screen we can see that the image is captured i.e. “Mountain Nature” in the first section. In the second section we can see the default text language that is in English and the translated text is in Turkish language. So our translated text is “Da? do?a”.

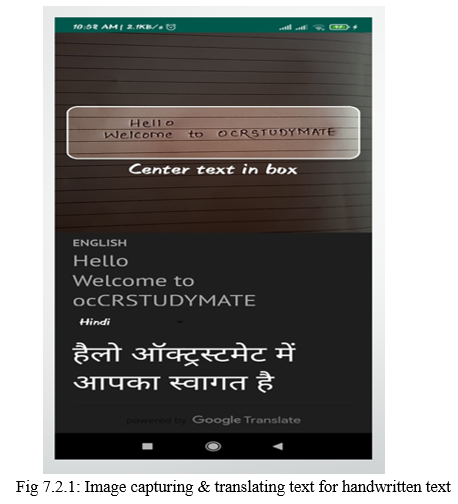

B. On Handwritten text / Manual

The first two figures would be same as the one that we get in printed text. Only difference is that instead of using printed text, we tested our system on handwritten text.

VII. ACKNOWLEDGEMENT

We sincerely wish to thank the project guide Dr. Saroja Vishwanath for her encouraging and inspiring guidance that helped us to make our project a success. Our project guide made sure we were on track at all times with her expert guidance, kind advice, and timely motivation which helped us determine our project. We would like to thank our project coordinator Prof. Reena Deshmukh for all the support she provided concerning our project. We also express our deepest thanks to our HOD Dr. Uttara Gogate whose benevolence helped us by making the computer facilities available to us for our project in our laboratory and making it a true success. Without his kind and keen co-operation, our project would have been stifled to a standstill. Lastly, we would like to thank our college principal Dr. Pramod Rodge for providing lab facilities and permitting us to go on with our project. We would also like to thank our colleagues who helped us directly or indirectly during our project.

Conclusion

As we see with the rapidly increasing technology everything is going online, especially during the unprecedented covid times. Many surveys even reported that there is a sudden surge in the number of people investing their time being online be it of any age group. Everything is remotely accessible to any person across the globe on tip of his/her finger. Likewise, the documents also are preserved online through various technologies be it your trivial documents or the most important ones. So with this great surge in storing the information found in paper documents in digital information, recognition of text from images is an important process. So to help mankind, we’ve proposed an app wherein it will help in preserving the information, easy storage and also permit retrieval of information as and when required, plus with the help of the translator, the user will be able to translate the extracted text.

References

[1] Sahana K Adyanthaya, Text Recognition from Images: A Study : https://www.ijert.org/research/text-recognition-from-images-a-study- IJERTCONV8IS13029.pdf [2] Sathiapriya Ramiah, Tan Yu Liong, Manoj Jayabalan, Detecting text based image with OCR for English translation and speech using android, Dec 2015 : https://www.researchgate.net/publication/301443412_Detecting_text_based_image_with_optical_character_recognition_for_English_translation_and_speech_using_Android [3] Ayatullah Faruk Mollah , Nabamita Majumder , Subhadip Basu and Mita Nasipuri, Design of an Optical Character Recognition System for Camerabased Handheld Devices : https://arxiv.org/ftp/arxiv/papers/1109/1109.3317.pdf [4] G.R.Hemalakshmi, M.Sakthimanimala, J.Salai Ani Muthu, Extraction of Text from an Image and its Language Translation Using OCR: https://www.technoarete.org/common_abstract/pdf/IJERCSE/v4/i4/Ext_14086.pdf [5] Happy Jain, Akshata Choudhari, Mohan Sharma, Jagdeep Yadav, Krunal J. Pimple, OCR WITH LANGUAGE TRANSLATOR : https://www.ijtra.com/view/ocr-with-language- translator [6] Shalin A. Chopra1 , Amit A. Ghadge2 , Onkar A. Padwal3 , Karan S. Punjabi4 , Prof. Gandhali S. Gurjar5, Optical Character Recognition : https://ijarcce.com/wp- content/uploads/2012/03/IJARCCE2G_a_shalin_chopra_Optical.pdf

Copyright

Copyright © 2022 Kavita Pathak, Shreya Saraf, Sayali Wagh, Dr. Saroja Vishwanath. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET41103

Publish Date : 2022-03-30

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online