Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Object Tracking Using HSV Values and OpenCV

Authors: Gowher Shafi, Dr. Pratik Garg

DOI Link: https://doi.org/10.22214/ijraset.2021.39238

Certificate: View Certificate

Abstract

This research shows how to use colour and movement to automate the process of recognising and tracking things. Video tracking is a technique for detecting a moving object over a long distance using a camera. The main purpose of video tracking is to connect target objects in subsequent video frames. The connection may be particularly troublesome when things move faster than the frame rate. Using HSV colour space values and OpenCV in different video frames, this study proposes a way to track moving objects in real-time. We begin by calculating the HSV value of an item to be monitored, and then we track the object throughout the testing step. The items were shown to be tracked with 90 percent accuracy.

Introduction

- INTRODUCTION

The task of remotely watching people's activities and/or activity is known as observing. Security cameras are the most extensively used gadget. Industrial operations, traffic, and reducing crime are all monitored by these cameras. Despite their widespread use, security cameras still have several drawbacks. One disadvantage is that because the cameras are fixed to mechanical hinges, they can only monitor at specified angles [1], and the security system can only be breached through concealed regions. Another issue is the involvement of human operators [2, who generally monitor several camera inputs]. Criminal or other unwanted behaviours may go undetected because such operators are susceptible to boredom, tiredness, and distraction. As a result, a mobile robot may be used to address these issues.. A robot might roam the monitoring zones freely and continuously, making its own judgments and detecting unwanted behaviours or activities, then reacting as needed, such as issuing notices..

The use of computer vision to track things is a key part of robotic monitoring. The goal of object detection and tracking is to keep track of where moving objects are in a video feed. This can be accomplished by identifying and monitoring a certain attribute, such as the colour of a moving object. The technique may then be used to trace the moving item's routes over time. The majority of existing colour tracking techniques are developed to track a fixed colour. However, because of the shifting surroundings, the tracking colour feature may not be as noticeable as the camera travels. In this case, tracking may be used to track a fictitious item. As a result, new ways of calculating the colour function based on the camera's operating environment are required.

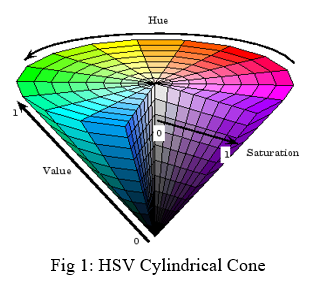

A. Hue Saturation and Value (HSV)

Unlike RGB and CMYK, HSV is more closely related to human perception of colour. It is made up of three parts: hue, saturation, and value. The colour space contains the shades and brightness values of colours (tones or hues) (saturation or quantity of grey). Although both HSV and HSB are the same, certain colour receivers, such as those in Adobe Photoshop, utilise the HSB acronym, which substitutes the word "brightness" with "value." The HSV colour wheel might take the form of a cone or a cylinder, but it always has three parts:

Hue refers to the colour component of the model, which is represented by a number ranging from 0 to 360 degrees.

The colour red has a temperature range of 0° C to 60° C.

Yellow appears between 61 and 120 degrees.

From 121 degrees to 180 degrees, green declines.

Cyan lies between 181 and 240 degrees.

The colour blue has a range of 241 to 300.

Magenta's temperature ranges between 301 to 360°C.

Saturation: Saturation refers to the percentage of grey in a colour that ranges from 0% to 100%. When this component is set to 0, the image becomes greyer and more faded. Saturation can occur somewhere between 0 and 1, with 0 grey and 1 dominant colour.

Value (brightness): Value is used in conjunction with saturation to express the brightness or colour intensity of a colour, ranging from 0 to 100 percent, with 0 being completely black and 100 being the brightest and most colourful.

B. OpenCV

OpenCV is a set of computing utilities aimed mostly at real-time computer vision. In basic language, the library is used for image processing. It's mostly utilised for all image processing. Photos and videos might be analysed in this way to distinguish objects, individuals, or even a human's handwriting. Python can handle the OpenCV analysis array structure when used in conjunction with several modules like as Numpy. To recognise visual patterns and their many features, we use vector space and execute mathematical operations on these qualities.

II. PROBLEM STATEMENT

Monitoring is the task of keeping track of people's behaviour and/or activities from afar. Security cameras are the most commonly used gadgets. Industrial operations, transportation, and criminal protection applications all employ these cameras. Despite their widespread use, security cameras still have severe shortcomings. One of the flaws is that because the camera is locked on mechanical hinges, it can only monitor at specific angles, and the safety system may be hacked through these concealed areas. In addition, a person must be assigned to watch the camera on a regular basis, and if he fails to do so, a variety of issues may occur.

As a result, a mobile robot can be used to address these potential issues. A robot might travel around the observation areas on its own, making personal judgements while seeing potentially dangerous behaviours or activities, and then responding, such as issuing warnings. The goal of object tracking is to keep track of where moving things are in a video clip. You may achieve this by detecting and tracking a certain property of the moving object, such as its colour. The technique may then be used to trace the moving item's routes over time. The most well-known systems for colour tracking are created in order to track a particular colour feature. However, if the camera is moving, the tracked colour characteristic may become less noticeable as the environment changes. In this case, tracking might be used to follow an inaccurate item. As a result, new methodologies are required to determine the colour function according to camera operating environment.

III. LITERATURE REVIEW

People have recently begun to establish large-scale camera networks as a result of the arrival of low-cost cameras. This expanding number of cameras may open the door to new signal processing applications that make use of a large number of sensors across a large area. Object tracking is a novel technique for detecting moving objects in video sequences by monitoring them with the camera over time. Its main goal is to relate the target items in following video sequences to their shape or attributes.

Shen et al. suggested a new hierarchical moving targeting technique based on spatiotemporal saliency (2013). Additionally, information on temporal and spatial output was used to improve detection outcomes. The results of this test show that our approach recognises moving objects in aerial video with high accuracy and efficiency. Furthermore, unlike the HMI method, this method has no time delay. However, this system classified item placements as false, unavoidable alarms in all video frames.

To track items, Guo et al. (2012) suggested using an object detection approach in video frames. The simulation results show that this technique is effective, exact, and durable for detecting generic object classes with good performance. Furthermore, the focus must be on enhancing categorization accuracy in real-time object identification.

Ben Ayed et al. suggested a method for text recognition based on video frame analytics and texture (2015). Ben Ayed and his colleagues The films are divided into discrete parts of a certain size and analysed using har wavelet technique. In addition, a neural network was used to classify text and non-text chunks. However, the focus of this study should be on removing the noisy regions from the areas and eliminating places such as texts.

Viswanath et al. (2015) suggested the idea of using panoramic backdrop modelling. They rendered the entire visual element in a Gaussian space-time using this way. The simulation findings indicate that the moving chemicals might be recognised with fewer false alarms. However, this method fails when the section's proper capability is not available.

Soundrapandiyan and Mouli were provided a novel approach of adjusting to pedestrian detection (2015). Furthermore, the foreground elements were distinguished from the background by the picture's pixel intensities. To enhance the front edges, they used a high boost filter. The findings of the subject assessment and objective evaluation show that the proposed strategy is successful, with a detection rate of about 90% in the pedestrian relative to other current single picture techniques. They planned to improve the method's performance in the future by raising its detection rate and lowering false positives, in accordance with sequence image methods.

Ramya and Rajeswari suggested a modified frame differentiation technique that uses a relationship between blocks in the present and background pictures to identify pixels as foreground and background. The blocks in the present image that are strongly related with the backdrop picture are considered as a background. The pixel-based comparison classifies the second block as either front or bottom. These studies have revealed that the frame difference approach improves, particularly when precision and speed are combined. However, this research needs focus on extra information available on blocks, such as shape and edge, in order to improve detection accuracy.

Risha and Kumar proposed an optic flow with morphological operation for video object identification (2016). To obtain clearly flowing objective pictures, a morphological method was used. The static camera was the only focus of this experiment. As a result, you must focus on moving the camera and recognising many objects in video frames.

IV. OBJECTIVES

The goal of this project is to precisely track the item. The HSV value of an item with relation to its surroundings is calculated, and the same object is then tracked using the previously calculated HSV values. There are two models in our work. One is used to calculate an item's HSV value, while the other is utilised to track the object. OpenCV is used to monitor the object.

V. METHODOLOGY

There are two steps to the approach that I use in my work.

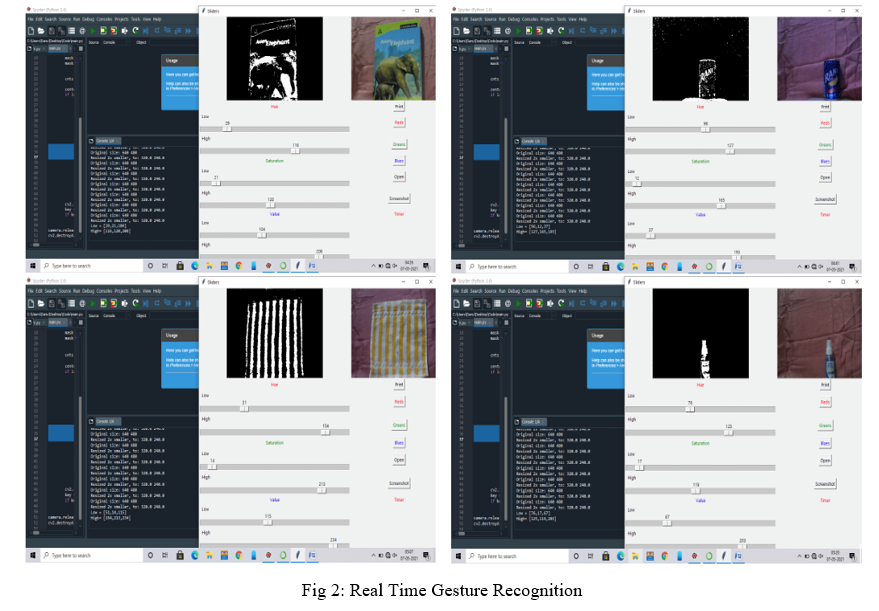

A. HSV value detection:

This is done in real time by maintaining the item in front of the camera while the model is executed. When the code is run, the image of the item appears on the screen. The image on the screen is coloured. The next step is to convert this RGB image to an HSV image and calculate the values. For this, we've created a Graphical User Interface (GUI) model. There are six values that must be discovered Hue (min), Hue (max), Saturation (min), Saturation (max), Value(min) and Value (max). The use of a graphical user interface (GUI) simplifies the process of determining these values. We must slide the vertical tabs of all six values to the point where the monitored item goes white and the surrounding area turns black. The object's HSV value is calculated in this manner, and it is then utilised in the following phase of tracking it. The six values that are determined are all in the range of 0 to 255. The selection of the Region of Interest is the first stage (ROI). It consists of four key stages:

- Background Subtraction: To remove the backdrop, the live RGB recordings are first converted into grayscale images. Because the processing speed of grey photographs is slower than that of colour photos, they are used as inputs for the background removal technique [3,4]. IABMM[6] then uses white pixels to identify moving objects as foreground items and shows them in a binary image, while black pixels are used to assign all stationary objects to backgrounds.

- Noise Elimination: After background subtraction, noise reduction is performed to eliminate any noises caused by reflexions or motion flushes. Median filtering and binary morphological procedures are used to remove noise. The median filter[6] is used to remove and restore "salt-and-pepper" noise while preserving important information. Noise caused by a change in backdrop or lighting situation might lead foreground items to be misidentified and isolated as background pixels, resulting in gaps or problems. For morphological processes, dilatation and erosion[7] are used to reduce noise by connecting plausible front-end regions and removing any erroneous ones. The image pixel is replaced with the maximum value below the anchor after computing the kernel-overlapped maximum pixel value. Furthermore, erosion is the inverse function, working with lowest rather than maximum values. By mixing dilatation and erosion, morphological closure is achieved, causing bright spots to become blobs and increasing foreground detection (represented by white blobs).

- Object Tracking: The object-tracking procedure starts with the (if any) blob-tracking of the output binary noise-elimination image, which is tracked using the LTCLA algorithm[8], which is a fast labelling technique that identifies the connected components and their outlines at the same time. An essential feature of this approach is a contour tracing technology that uses a tracer to identify the exterior and inner shapes of each component. Once an outline point has been found, the tracer examines its eight neighbours in a clockwise fashion for more outline points. In the object tracking stage, the next step is to decide which blob to follow. The monitoring object is identified as the largest moving blob discovered in this article. Although the methodology may monitor several targets, the number of monitored objects has been found to have a significant impact on the method's efficacy. This method just chooses and follows the largest blob.

- Behaviour Analysis: After selecting the largest blob, the ROI method's final step is to investigate the blob's behaviour. The item's area, centroid, and speed may all be calculated, as well as the item's behavioural traits. In order to compute these behaviour characteristics, a ROI is formed by a bounding box that encompasses the goal item and is specified with the greatest width and height of that item. The area of the object is approximated by counting the number of pixels in the tracked blob.

The colour filter will analyse both the ROI and the image's complete colour space after the ROI has been found.

HSV is chosen over RGB because it is better at detecting things such as shadows, shades, and highlights under a variety of lighting conditions. As a result, compared to RGB [9, 10], the filter includes fewer segments. Furthermore, the RGB colour space tends to combine neighboring color objects of various colours and produce boring outcomes, whereas the HSV colour space separates the intensity from the color data and thus the outcome tends to distinguish between neighboring colour objects of different colours by sharing the limits and retaining colour details for each pixel [11]. The offered colour filter was created to cover the whole HSV colour range. The number of segments of HSV space can be adjusted by the user due to hardware restrictions such as camera resolution and the processing speed required for the algorithm.

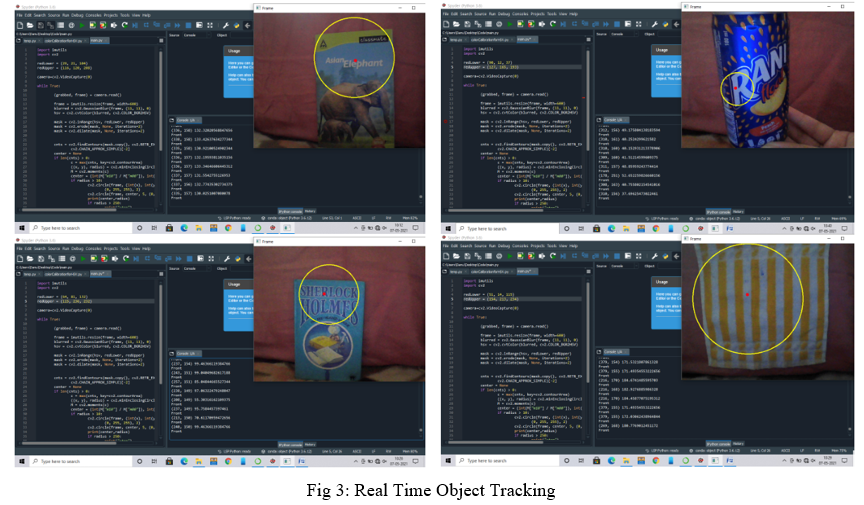

B. Tracking the Object

The HSV values acquired in the first stage are utilised to track the object in the second model. For this, we utilise the OpenCV library.

VI. RESULTS AND OBSERVATIONS

The OpenCV library is used to track the objects. The tracked item is positioned in front of the camera, and the model then encircles the tracked object. This model's accuracy was determined to be 90%.

VII. FUTURE WORK

Although my approach properly tracks the object, further work might be accomplished by monitoring many objects in a single frame.

Conclusion

The HSV value of an item was discovered in this study, and the object was tracked. It was discovered that tracking an item using the HSV value is extremely precise and seldom fails. This strategy may be applied to defence as well as other sectors

References

[1] Aggarwal, A., Biswas, S., Singh, S., Sural, S. & Majumdar, A.K., 2006. Object Tracking Using Background Subtraction and Motion Estimation in MPEG Videos, in 7th Asian Conference on Computer Vision. SpringerVerlag Berlin Heidelberg, pp. 121–130. doi:10.1007/11612704_13 [2] Aldhaheri, A.R. & Edirisinghe, E.A., 2014. Detection and Classification of a Moving Object in a Video Stream, in: Proc. of the Intl. Conf. on Advances in Computing and Information Technology. Institute of Research Engineers and Doctors, Saudi Arabia, pp. 105–111. doi:10.3850/ 978-981-07-8859-9_23 760 Mukesh Tiwari and Dr. Rakesh Singhai [3] Ali, S.S. & Zafar, M.F., 2009. A robust adaptive method for detection and tracking of moving objects, in: 2009 International Conference on Emerging Technologies. IEEE, pp. 262–266. doi:10.1109/ICET.2009.5353164 [4] Amandeep, Goyal, M., 2015. Review: Moving Object Detection Techniques. Int. J. Comput. Sci. Mob. Comput. 4, 345 – 349. [5] Athanesious, J. & Suresh, P., 2012. Systematic Survey on Object Tracking Methods in Video. Int. J. Adv. Res. Comput. Eng. Technol. 1, 242–247. [6] Avidan, S., 2004. Support vector is tracking. IEEE Trans. Pattern Anal. Mach. Intell. 26, 1064–1072. doi:10.1109/TPAMI.2004.53 [7] Badrinarayanan, V., Perez, P., Le Clerc, F. & Oisel, L., 2007. Probabilistic Color and Adaptive Multi-Feature Tracking with Dynamically Switched Priority Between Cues, in: 2007 IEEE 11th International Conference on Computer Vision. IEEE, pp. 1–8. doi:10.1109/ICCV.2007.4408955 [8] Bagherpour, P., Cheraghi, S.A. & Bin Mohd Mokji, M., 2012. Upper body tracking using KLT and Kalman filter. Procedia Comput. Sci. 13, 185–191. doi:10.1016/j.procs.2012.09.127 [9] Balasubramanian, A., Kamate, S., & Yilmazer, N., 2014. Utilization of robust video processing techniques to aid efficient object detection and tracking. Procedia Comput. Sci. 36, 579–586. doi:10.1016/j.procs.2014.09.057 [10] Ben Ayed, A., Ben Halima, M., & Alimi, A.M., 2015. MapReduce-based text detection in big data natural scene videos. Procedia Comput. Sci. 53, 216– 223. doi:10.1016/j.procs.2015.07.297 [11] Blackman, S.S., 2004. Multiple hypotheses tracking for multiple target tracking. IEEE Aerosp. Electron. Syst. Mag. 19, 5–18. doi:10.1109/MAES.2004.1263228 [12] Chakravarthy, S., Aved, A., Shirvani, S., Annappa, M., & Blasch, E., 2015. Adapting Stream Processing Framework for Video Analysis. Procedia Comput. Sci. 51, 2648–2657. doi:10.1016/j.procs.2015.05.372 [13] Chandrajit, M., Girisha, R., & Vasudev, T., 2016. Multiple Objects Tracking in Surveillance Video Using Color and Hu Moments. Signal Image Process. An Int. J. 7, 15–27. doi:10.5121/sipij.2016.7302 [14] Chate, M., Amudha, S., & Gohokar, V., 2012. Object Detection and tracking in Video Sequences. ACEEE Int. J. Signal Image Process. 3.

Copyright

Copyright © 2022 Gowher Shafi, Dr. Pratik Garg. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET39238

Publish Date : 2021-12-02

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online