Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

A Survey on Sign Language Translation Systems

Authors: Prof. R. B. Joshi, Shraddha Desale, Himani Gaikwad, Shamali Gunje, Aditi Londhe

DOI Link: https://doi.org/10.22214/ijraset.2022.41295

Certificate: View Certificate

Abstract

Sign language is a way of communicating using hand gestures, movements and facial expressions, instead of spoken words. It is the medium of communication used by people who are deaf or have hearing impairments to exchange information between their own community and with normal people. In order to bridge the communication gap between people with hearing and speaking disabilities and people who do not use sign language, a lot of research work using machine learning algorithms has been done. Hence, Sign language translator came into picture. Sign Language Translators are generally used to interpret signs and gestures from deaf and hard hearing people and convert them into text.

Introduction

I. INTRODUCTION

Sign language is a visual form of language that uses body movements and facial expression to convey meaning between people. Sign language is a non-verbal language that Deaf people exclusively count on, to connect with their social environment. It is based on visual signals through the body parts like hands, eyes, face. The gestures or symbols in sign language are organised in a lingual way. It is a rich combination of hand gestures, body language, facial expressions and anything else that communicates thoughts or ideas without the use of speech. There are several spoken languages across the world and each language is different from other in one sense or the other. Similarly, there are several sign languages with different types of hand gestures and visual representations. Some of them are Pakistani Sign Language (PSL), American Sign Language (ASL), British Sign Language (BSL), French Sign Language (LSF), Indian Sign Language (ISL), etc. The sign language translator framework provides a helping-hand for deaf, dumb and speech-impaired people to communicate with the normal people using sign language. This leads to stamp out of the middleman who generally acts as a medium of translation. Modules of conversion include Text to Sign Conversion and Sign to Text Conversion.

The thrust of this survey paper is to have an overview and comparative study between the sign language translator systems that already exist and have been researched previously. This paper has been divided into 4 sections. Section 1 gives introduction to sign language. Section 2 includes the previous works on sign language generation (Literature survey). Section 3 describes the techniques and algorithms of existing systems. Section 4 concludes this survey paper.

II. LITERATURE SURVEY

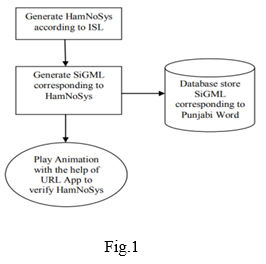

A. Indian Sign Language Animation Generation System [1]

As the name specifies this system is developed for Indian Sign Language (ISL). The proposed system takes English word as input and generates corresponding animation. To generate animation corresponding to word, first HamNoSys based on ISL will be generated. Then corresponding to HamNoSys SiGML is generated. To check the accuracy of HamNoSys they have used a tool named JA SIGML URL APP. The accuracy of animated signs is tested with Indian Sign Language Dictionary. This system can generate HamNoSys for all basic words used in daily routine. They have covered one handed and two-handed sign symbols only.

B. American Sign Language Interpreter [2]

This hardware product is a hand glove which can be used for implementing sign language teaching programme. It can also be used to practice sign language. This glove deals with the 26 letters of English alphabet and that can be translated into American Sign Language (ASL). This glove can work in two modes: teach mode and learn mode. In teach mode hand gestures of ASL are stored in database and in learn mode user can learn the sign language by making hand gestures so as to match the existing database. This prototype has many applications in public places.

C. Hand Gesture Recognition for Indian Sign Language [3]

In this system it takes input through in-built web camera. They have used Camshift method for Hand tracking and Genetic Algorithm for gesture recognition. Then final result is converted into text and voice. The proposed system consists of 4 modules: Hand Tracking, Segmentation, Feature Extraction and Gesture Recognition.

- Hand Tracking: It involves tracing of hand gestures using camshift algorithm.

- Segmentation: The purpose behind the HSV Color model is to segment the hands from the background.

- Feature Extraction: Several general-purpose features are extracted and relationship between features and classes is inferred by an appropriate classifier.

- Gesture Recognition: After extracting features of the input character, features are searched into the database and consider the most similar features as the result. Then Genetic algorithm is used for hand gesture analysis.

D. Sign Language Recognition System for Deaf and Dumb People [4]

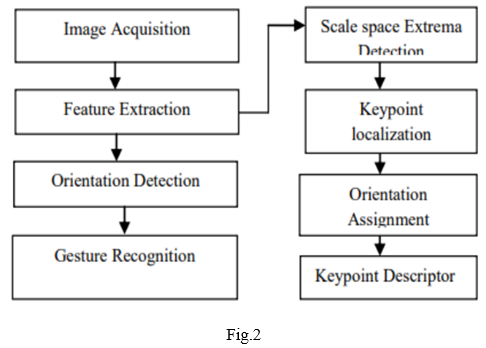

In this system the proposed algorithm consisted of four major steps which are namely Image Acquisition, Feature Extraction, Orientation Detection and Gesture Recognition.

- Image Acquisition: In this step, the captured images will be stored in the directory and the recently captured images will be compared with the images stored for specific letter in the database using the SIFT algorithm and that comparison will give the gesture and the translated text.

- Feature Extraction: Then for image feature generation, the SIFT approach, takes an image and transforms it into a large collection of local feature vectors.

- Orientation Detection: In orientation detection system will take the input of hand movement in any form or any orientation. The gesture will be detected through feature extraction as the SIFT algorithm also includes the orientation assignment procedure.

- Gesture Recognition: In this process a single dimensional array of 26 characters corresponding to alphabets has been passed and the image number stored in database is provided in the array. At last, the image is picked up from the array and corresponding alphabet is display.

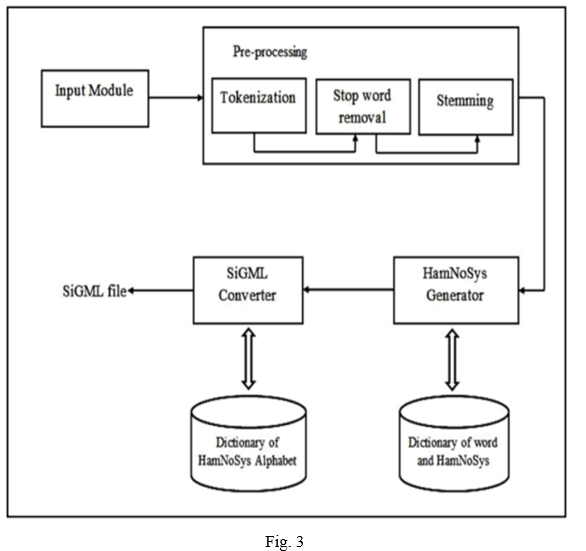

E. English to SiGML Conversion for Sign Language Generation [5]

The proposed system includes following modules: data collection, input module, pre-processing module, HamNoSys conversion module, and at last SiGML file conversion module.

- Data Collection: Each data collected is stored as a pair of keys and values. Where ordinary English root words are keys and values are the HamNoSys string of that corresponding key. Also, another database was created that contains all the HamNoSys alphabets and their available meanings. By doing this step the text matching process becomes easier.

- Input Module: User will give the input text to be translated into sign language as in the form of a normal English sentence.

- Pre-processing module – It contains,

a. Tokenization: Tokenization is the process of dividing the given sentence into pieces which called tokens.

b. Stop Word Removal: The process of removing most frequent words like the, a, of, for, in, an etc., which does not carry much meaning.

c. Stemming: Reduction of derived words to their word stem, root or base form.

4. HamNoSys Conversion Module: After the pre-processing of inputted sentence, the output is given to the HamNoSys translation module in which each word is compared with the words stored in database. If a match is found, then the corresponding HamNoSys notation will be outputted. Otherwise, the translation will not be performed.

5. SiGML Conversion Module: For the conversion of HamNoSys into SiGML file, the system keeps a repository of HamNoSys alphabets and corresponding meaning.

E. Indian Sign Language to Speech [6]

This system is developed to convert gestures made in sign language to speech using image processing. First the system takes images from camera, then images are processed and corresponding speech output is given to the speaker.

The proposed system is divided into following stages:

- Image Acquisition: In this step, at a time five frames per trigger of a single image was initially captured using a higher resolution digital camera. The digital camera was attached to PC and was installed with MATLAB through USB-port. Then acquired image was processed.

- Segmentation: The acquired image was in RGB format. So, in this step image processing is done. Using color transformations acquired images are converted to binary output images.

- Feature Extraction

a. Statistical method (based on parameters)

b. Centroid algorithm

Using these algorithms gesture recognition is done and text was obtained.

4. Speech Output: Then the text was further processed using TTS algorithms in MATLAB. The obtained text is converted into speech and voice is obtained as an output.

F. Domain Bounded English to Indian Sign Language Translation Model [7]

The proposed model translates English text to Indian Sign Language. The system accepts input text and then translates it by making an avatar to display signs of each word. There is direct word to word mapping. As given in the name, domain bounded, the system is for railway reservation counters for enquiry.

The system consists of following modules:

- Input Module: Takes the English text as input for translation.

- Tokenizer Module: Splits the input text into words.

- Resource: It contains the respective ISL signs for the English words.

- Translator: It will check for the signs in the resource for the entered words.

- Accumulator: It filters the words to be translated by ignoring the words for which there is not respective sign in the resource and then accumulates the words in the sequence they were entered in the input module.

- Display Module: Finally the 3D character i.e., avatar will display the sign for the entered text.

G. A New Instrumented Approach for Translating American Sign Language into Sound and Text [8]

The system is developed for capturing and translating isolated gestures of American Sign Language into spoken and written words. The proposed system comprises two main elements: an AcceleGlove and a two-link arm skeleton. Sensors and wires of the AcceleGlove were arranged on a leather glove to improve usefulness without losing portability. This glove can detect hand shapes accurately for different hand sizes. To indicate the beginning and ending of a gesture, the capturing system was built. A capturing system had two push buttons that can be pressed by the user. The instrumented part merges an AcceleGlove and a two-link arm skeleton. Gestures of the ASL are broken down into unique sequences of phonemes that is Poses and Movements. These phonemes were recognized by software modules. Software modules are trained and tested with different hand sizes and signing ability independently on volunteers.

H. Sign Language Translation [9]

This system aims at implementing computer vision which can take the sign from the users and convert them into text in real time. The system is divided into four main modules: Image capturing, pre-processing, classification and prediction.

- By using image processing the segmentation can be done.

- Sign gestures are captured and processed using OpenCV python library.

- The captured gesture is resized, converted to grey scale image and the noise is filtered to achieve prediction with high accuracy.

- The classification and predication are done using convolution neural network (CNN).

Aim of this system is to provide communication between normal people and people with hearing disability without need of any specific color background or hand gloves or any sensors. Some systems have used datasets of '.jpg' images. But in the proposed system the pixel values of each image are saved in a csv file which reduces the memory requirement of the system. Also, the accuracy of prediction is high when csv dataset is used.

I. Real Time Sign Language Recognition using PCA [10]

The system recognizes 26 gestures from the Indian Sign Language by using MATLAB. They have used Principal Component Analysis (PCA) algorithm for gesture recognition and recognized gesture is converted into text and voice format. The sign recognition procedure includes four major steps.

- Data Acquisition -The runtime images for test phase are captured using web camera.

- Pre-processing and segmentation

- Pre-processing has image acquisition, segmentation and morphological filtering methods.

- -Then to separate object and the background, the Segmentation of hands is done.

- -Otsu algorithm is used for segmentation purpose.

- Feature extraction

- It is a method of reducing dimension of data by encoding related information in a compressed representation and removing less discriminative data.

- Sign recognition

- -Sign reorganization using PCA is a dimensionality reduction technique based on extracting the desired number of principal components of the multi-dimensional data.

- Sign to text, voice conversion.

III. MAJOR TECHNIQUES

A. HamNoSys

The Hamburg Notation System (HamNoSys) is a system which is used to transcribe signs. HamNoSys is capable of describing all signs used in all sign languages thus can be used internationally. In this system signs are elaborated in forms of signing parameters. Signing parameters contains hand shapes, hand location, hand orientation and hand movement.

- Hand Shapes: The Hand Shapes are mainly grouped as Fist, FlatHand, Separated Fingers and Thumb combinations.

- Hand orientation: By combining two components: extended finger direction and palm orientation, HamNoSys describes the orientation of the hand for a given sign.

- Hand location: To tell the location of the hands of the signer, the location specifications are used. These are split into 2 parts: first part determines the location of the hand and second part determines the distance of the hand from the selected part of body.

- Hand movement: The movement types are distinguished as straight, curved, wavy, zigzag, circular and spiral movements.

B. Camshift Algorithm

Continuously adaptive mean-shift (CAMShift) is an efficient and light-weight tracking algorithm developed based on mean-shift.

- Choose the initial region of interest which contain the hands we want to track

- Make the color histogram of that region as the object model.

- Build a probability distribution of frame using the color histogram.

- Predicted on the probability distribution image find the centre mass of the search window using mean-shift method.

- Centre the search window to the point taken from step 4 and rehearse step 4 until convergence.

- Process the further frame with the search window position from the step 5.

C. SIFT algorithm (Scale Invariant Feature Transform)

SIFT is a technique for detecting salient, stable feature points in an image. For every specific point, it also provides a set of “features” that “characterize/describe” a small image region around the point. These features are invariant to rotation and scale.

Steps of SIFT algorithm-

- Determine approximate location and scale of salient feature points (also called keypoints).

- Refine their location and scale

- Determine orientation(s) for each keypoint.

- Determine descriptors for each keypoint.

D. Algorithm for ENGLISH to SiGML

SiGML is Signing Gesture Mark-up Language. It describes HamNoSys symbols into XML tags form.

Step 0: Configure data dictionary that contains English words and corresponding HamNoSys symbols.

Step 1: Configure another dictionary that contains for each HamNoSys symbol its corresponding meaning.

Step 2: Read the input to be translated.

Step 3: Perform pre-processing of the given input sentence.

Step 4: First letter of each word should be Capital.

Step 5: Search the dictionary to find the HamNoSys that corresponds to word given and pass HamNoSys into SiGML conversion module.

IV. OBSERVATIONS

|

Sr No.

|

Paper Name |

Input-Output |

Methodology (algorithms) |

||

|

I. |

Indian Sign Language Animation Generation System |

English Text -> Sign language animation |

HamNoSys and SiGML |

||

|

II. |

American Sign Language Interpreter |

English alphabet -> ASL |

Microcontroller Hand glove |

||

|

III. |

Hand Gesture Recognition for Indian Sign Language |

Hand Gestures -> Text and voice |

Camshift |

||

|

IV. |

Sign Language Recognition System for Deaf and Dumb People |

Captured image -> Translated text (Alphabet) |

Scale Invariant Feature Transform (SIFT) |

||

|

V. |

English To SiGML Conversion For Sign Language Generation |

English Text -> SiGML |

HamNoSys and SiGML |

||

|

VI. |

Indian Sign Language to Speech |

Sign -> Speech |

Image processing |

||

|

VII. |

Domain Bounded English to Indian Sign Language Translation Model |

English text -> ISL |

Translation using Tokenizer, Translator, Accumulator |

||

|

VIII. |

A New Instrumented Approach for |

ASL -> Sound and Text |

AcceleGlove and |

||

|

IX. |

|

Sign Language Translation |

Sign -> Text |

Real time conversion using CNN |

|

|

X. |

|

Real Time Sign Language Recognition using PCA |

Sign -> Text and Voice |

MATLAB and PCA |

|

Conclusion

In this survey paper we have reviewed 10 research articles on sign language translator. Researchers have used various techniques like HamNoSys, SiGML, Camshift, SIFT. Overall, these study researches have been insightful since it shows different approaches for sign language translation.

References

[1] Sandeep Kaur, Maninder Singh; Indian Sign Language Animation Generation System; 2015 1st International Conference on Next Generation Computing Technologies (NGCT) [2] Kunal Kadam, Rucha Ganu, Ankita Bhosekar, S.D. Joshi; American Sign Language Interpreter; 2012 IEEE Fourth International Conference on Technology for Education [3] Archana S. Ghotkar, Rucha Khatal, Sanjana Khupase, Surbhi Asati, Mithila Hadap; Hand Gesture Recognition for Indian Sign Language; 2012 International Conference on Computer Communication and Informatics (ICCCI -2012), Jan. 10 – 12, 2012, Coimbatore, INDIA [4] Sakshi Goyal, Ishita Sharma, Shanu Sharma; Sign Language Recognition System for Deaf and Dumb People; IJERT, Volume 02, Issue 04 (April 2003) [5] Megha Varghese, Sindhya K Nambiar; English To SiGML Conversion for Sign Language Generation; 2018 International Conference on Circuits and Systems in Digital Enterprise Technology (ICCSDET) [6] Kusumika Krori Dutta, B.Sunny Arokia Swamy, Anil Kumar G S,Konduru Satheesh Kumar Raj; Indian Sign Language to Speech; International Journal of Advances in Engineering Research [7] Gouri Shankar Mishra, Ashok Kumar Sahoo; Domain Bounded English to Indian Sign Language Translation Model; International Journal of Computer Science and Informatics International Journal of Computer Science and Informatics, Volume 4, Issue 1, Article 6 July 2014 [8] J.L. Hernandez-Rebollar, N. Kyriakopoulos, R.W. Lindeman; A New Instrumented Approach for Translating American Sign Language into Sound and Text; Sixth IEEE International Conference on Automatic Face and Gesture Recognition, 2004. Proceedings. [9] Harini; R. Janani, S. Keerthana, S. Madhubala, Venkatasubramanian; Sign Language Translation; 2020 6th International Conference on Advanced Computing and Communication Systems (ICACCS) [10] Shreyashi Narayan Sawant, M. S. Kumbhar; Real Time Sign Language Recognition using PCA; 2014 IEEE International Conference on Advanced Communications, Control and Computing Technologies

Copyright

Copyright © 2022 Prof. R. B. Joshi, Shraddha Desale, Himani Gaikwad, Shamali Gunje, Aditi Londhe. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET41295

Publish Date : 2022-04-07

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online