Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Similarity Measure of Images using SIFT and ORB Feature Matching

Authors: Shivani Singh, Sahithya Siramdas, K. Tanmayi, Laxmi Koli

DOI Link: https://doi.org/10.22214/ijraset.2022.44319

Certificate: View Certificate

Abstract

In this study, similarities between images are identified and examined. A collection of images made from an image of the same scene or item taken under varied atmospheric conditions, such as different perspectives or lighting settings, and then altered in myriad ways by the very same starting image. The process of determining picture similarity is comparing visual information to evaluate how similar it is. Based on the input, it finds good matches and presents the proportion percent exceptional matches between the photos. It compares the supplied input to calculate the similarity measure. For decades, picture resemblance has been a fundamental problem in the fields of object recognition.When two photos are compared, Image Similarity generates a result that represents how physically similar they are. The measure of comparison of the two photographs is given a numerical number in this test. The similarity score between two photos ranges from 0 to 1 respectively.We have proposed a solution using SIFT & ORB Feature Matching.

Introduction

I. INTRODUCTION

The act of comparing two photographs to evaluate how similar their apparent content is is known as image similarity detection. Similarity - based detection methods may be divided into two groups depending on the functionality they use: global-feature-based detection and local-feature-based detection. The global feature function is the use of either one of a few extracted features to reflect the entire content of a picture. Global features such as visual features, frame characteristics, and a wide variety of conditions are all common. The calculation performance for visual content similarity identification using spatial information is frequently relatively rapid due to the limited number of feature points. The global feature is very sensitive to changes and local adjustments due to the uniqueness of its feature extraction and the harshness of the description image.

Several attribute studies are now underway. The SIFTS (Scale Invariant Feature Transform) approach is used to find similarities between the pictures. It performs admirably in image recognition applications. We recover SIFT characteristics from a sequence of images and its weighted counterpart to determine the measure of correlation, then correlate the SIFT characteristics from the provided image to the outcome and compute the distance between two photographs for SIFT characteristics. In this project, the similarity outcome between the two photographs is determined using many similarity approaches. Computer vision is widely used in automated processes, medicine, engineering, astronomy [1]. Image matching has become a significant factor that affects the performance vision in recent years. It has been used in machine vision, 3D reconstruction, remote sensing image security applications, and other applications. As a consequence, a more comprehensive study of depth photographs is required.Image match compares and fuses photos of the same scene obtained under different historical conditions, from different angles of sight, also with different sensors using an effective similarity measure. Gray-level-based techniques are simple and quick. Feature-based approaches are more durable in difficult settings and easier to implement for real-time matching.similarity measures were used in applications such as image segmentation, information retrieval, object identification, and classification[1][2].

By comparing two photographs, you can see how close they are. Whether any of the parallelisms are matched, we use the OpenCV tool to compute the similarity measure seen between two photographs based on the relevant similarities.

II. LITERATURE SURVEY

In recent years, several academics have explored using local factors to determine picture similarity. Local image characteristics, as opposed to global features, are widely employed in the field of content images and video information extraction because they display some substantial invariance for the image's lighting, rotation, and scale. In a relevant region, local feature spots are frequently extreme local locations with more evident features than the rest of the region's cells. The qualities of life locations are defined as a combination of the attributes of the primary point and the attributes of the surrounding region, ensuring quality is locally invariant[3].

The SIFT approach for transforming scale-invariant characteristics was developed by Dr. David G. Lowe from Canada. SIFT is a dynamic segmentation and description approach that can keep separability in natural pictures with scaling, translation, rotations, illumination, and affine.

It was initially published in 2004 as an overview of existing invariant-based feature identification algorithms by Professor David Lowe. SIFT's main goal is to turn an image match into a feature vector match. SIFT has been widely used in the field of image recognition, and because of its high durability and speedy computing performance, it is currently a top priority in research domains such as terminal guidance, computer science, and data analytics.

A variety of metrics can be used to calculate the reference architecture or metric. To acquire the similarity matrix for each measure, many forms of similarity assessments are used in this study.

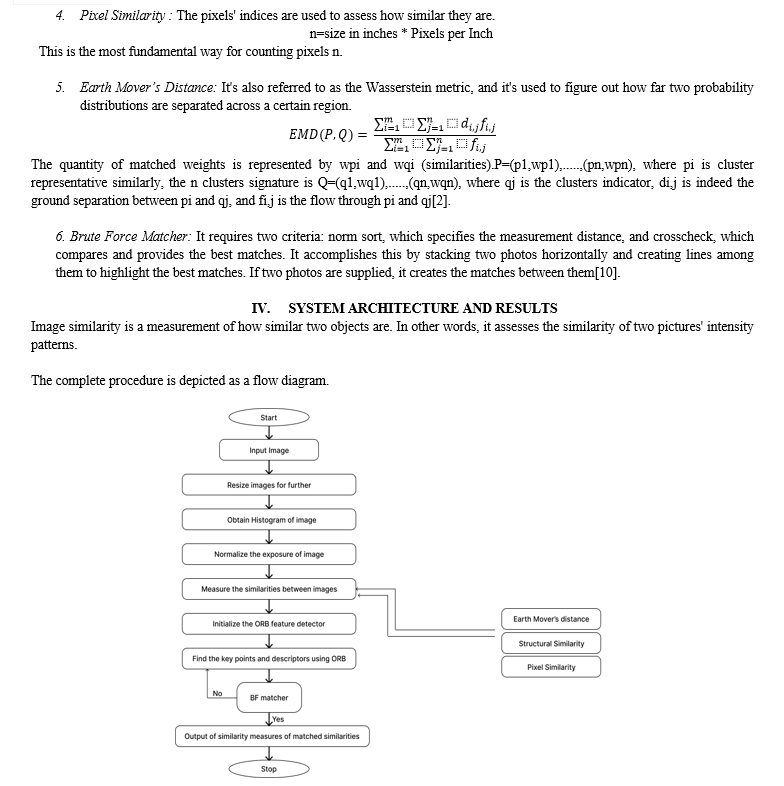

III. METHODOLOGY

There are several approaches for visual similarity identification. In such a case, combining feature extraction methods like ORB and SSIM alongside classification algorithms is easier. Second, we present a criterion for analyzing picture resemblance and creating a similarity score based on multiple methodologies such as ORB, SSIM, Pixel Resemblances, and Earth mover's distance[5].

A.The Scale-invariant Feature Transform (SIFT)

SIFT is a point-finding method based on computer vision. SIFT assists in the discovery of a picture's original properties, often known as the "crucial necessary key points'' of the image. SIFT features have the advantage of being independent by image size or exposure, unlike edge or overeater features.[4]

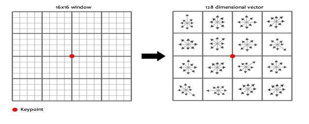

B. Oriented FAST Rotated Brief (ORB)

ORB is a feature detection algorithm for computer vision. The ORB description is built on the foundation of the Quick key point detector. (i) FAST- Measures up the luminance of an image pixel p in an array to the lighting of the 16 pixels around p in a tiny circle. Following that, every element is split into 3 sections i.e, 1.lighter than p, 2.darker than p and 3. similar to p. (ii) BRIEF- Brief creates a binary feature representation from all of the important points found by the quick algorithm as a feature vector that only contains the integers 1 and 0.

C. Measures of Similarity Check

Creating a similarity-based detection model that examines the similarity metric and finds the commonalities that exist is required to determine the picture similarity score[4][5].

- Global and Local Features: The two primary types of characteristics that may be extracted from an image are global and local features. Global characteristics represent the image as a whole such as shape formulations, sensory and physical, and texture features include contour vectors and the Gradients Histogram (HOG). Picture patches and all key places inside an image are represented by local characteristics[2].

- SIFT and ORB Descriptor: The SIFT signifier was a method for detecting feature points in a gray-level image by gathering figures of normalized pattern paths from input images to include an outline of local image paradigms in a local neighborhood on each area of focus, as well as a synopsis of the factors that increase the likelihood. The purpose of using this description is to find comparable points of interest in different photographs.

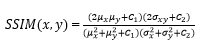

- SSIM (Structural Similarity Index): The Structural Resemblance (SSIM) indicator is a form of comparison tool for comparing two photographs.

The SSIM index is defined as follows: between two pictures provided x and y, with x = i=1,...so on., M and y= i=1,...so on., M are two positive numbers, which are the mathematical abstractions for x and y, and are the variance of x and y, and are the variations for x and y, and are the variances. If the two photos are identical, the highest SSIM score is 1, which would be attained (identical). With respect to accuracy, SSIM provides a good percentage of quality measure based on the image quality-based evaluation.

- Resizing the Images: Using two photos as input, we first use the dimension and width parameters to resize and normalize the image to a set size. This will come in very handy for image processing and normalization for the future.

- Histogram of the Images: The histograms of the photos are then obtained and used to contrast the photographs. The frequency of significant color variables in a picture is represented visually by a histogram. A grayscale 8-bit picture's histogram is a 256-unit vectors, with the nth value representing the proportion of image pixels that are at the particular level of darkness. Normalizing the brightness of the pictures is necessary for subsequent processing.

Finding the Key Points: The existing pixel is denoted by an X. The neighbours are shown with green circles. This approach is used to perform a wide range of tests. If X is the highest or smallest of all 26 neighbors, it is called a “key point”.

4. Eliminating the bad keypoints: Some of the important points developed in the previous step are too close together or lack enough contrast. They really aren't considered features in any case. We're also getting rid of it.

5. Keypoint Matching: we evaluate each image pair for each important feature, and their qualities are assessed for each picture and then compared between both the images.The top two matching for each key point are discovered, and the characteristic separation proportion around them is determined as the best match's uniqueness measure.

6. Similarity measure of the images: Now, we compute the matching score between two pictures by sending the images' paths as inputs to each measure of similarity, which computes and outputs the float-valued similarity score. The supplied photos have a higher level of resemblance if the similarity measure is near to 1, Such that, there is indeed a higher chance that the photographs will be the same.The images exhibited were not particularly comparable if the score was near to 0.

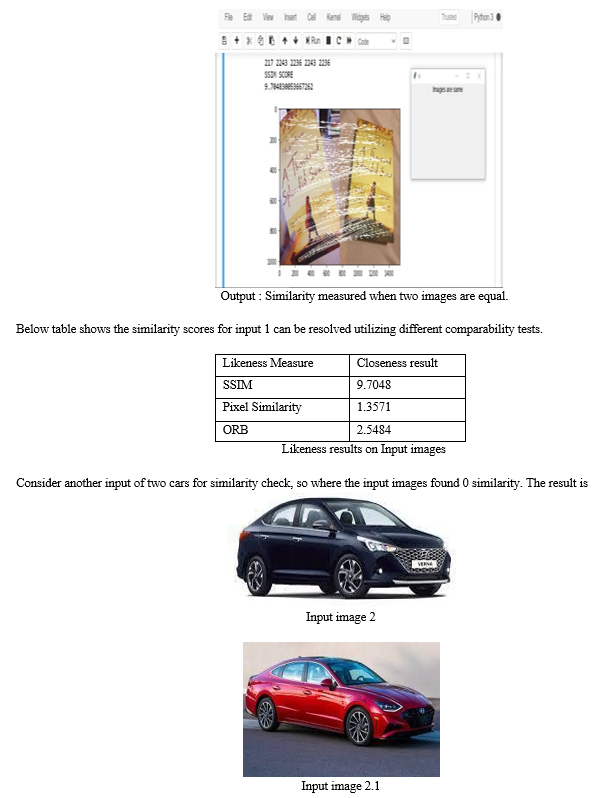

V. RESULTS

The provided photos are downsized and normalised during the process, and pictures of any dimension are used as inputs to assess overall similarity between two images using various similarity metrics. It finds correlations between two photos and draws matches using a brute force matcher, as shown in the results below. Each measure has its own technique of measuring similarity, other approaches are employed to generate similarity scores, including the Structural Similarity Measure (SSIM), Pixel Resemblance, Orientation Fast Rotated Brief (ORB), and Earth mover's proximity. As a consequence, each measure's similarity scores are presented here, allowing us to compare how comparable they are. Two distinct books are utilized as info pictures in this model, as found in below. We get the accompanying likeness measures as execution from looking at them, as displayed in below table shows how similitude scores for input 1 can be resolved utilizing different comparability tests.

Conclusion

In this research, the SIFT (Scale Invariant Transformation) technique is used to discover similarities across pictures, and critical spots are detected and matched using the ORB Based Matcher. In the event of big information sets, this Matcher is more efficient. The main advantage of SIFT features over edge or hog features is that they are unaffected by the image\'s size or orientation. It provides a lot of flexibility in terms of picture translation and rotation. We can determine the similarity metric of the photos by supplying the input images. Using the SIFT technique, we may compare two or more photos and get the similarity measure. Because of its slowness, this method may not be the ideal solution for some real-time applications. However, when compared to prior algorithms, it is accurate and efficient.

References

[1] Srikar Appalaraju, and Vineet Chaoji “Image siTsung-Ting Kuo, Hugo Zavaleta Rojas and Lucila Ohno- Machado(2019) Comparison of blockchain platforms: a systematic review and healthcare examples. [2] Dietrich Van der Weken. Mike Nachtegael. Etienne E. Kerre, “An Overview of Similarity measures for images”, IEEE International Conference on Acoustics, Speech, and Signal Processing,2002. [3] Jian Zhao,Yiliu Feng, Shandong Yuan, Wan Zheng Cai, “A more brief and efficient SIFT image matching algorithm for computer vision”, in IEEE International Conference. [4] Bing Zhong, Yubai Li, “Image Feature Point Matching Based on Improved SIFT Algorithm”, in IEEE 4th International Conference on Image, Vision and Computing. [5] Hyun-Bin Joo, Jae Wook Jeon, “Feature-point extraction based on an improved SIFT algorithm”, in 17th International Conference on Control, Automation and Systems (ICCAS), 2017. [6] Routray S., Ray A.K., Mishra C., “Analysis of various image feature extraction methods against noisy image: SIFT, SURF and HOG”, in Proceedings of the 2017 2nd IEEE International Conference on Electrical, Computer and Communication Technologies, ICECS 2017. [7] Kavutse Vianney Augustine, Huang Dongjun, “Image similarity for rotation invariants image retrieval system”, in International Conference on Multimedia Computing and Systems, 2019. [8] Alexandra Gilinsky, LihiZelnik Manor, “SIFT pack: A Compact Representation for Efficient SIFT Matching”, in IEEE International Conference on Computer Vision,2013. [9] Patil J.S., Pradeepini G., “SIFT: A comprehensive”, in International Journal of Recent Technology and Engineering,2019. [10] Amila Jakubovic, Jasmin Velagi, Sarajevo, Bosnia and Herzegovina, “Image Feature Matching and Object Detection using Brute-Force Matchers”, at International Symposium ELMAR, IEEE, November2018. [11] Vineetha Vijayan, PushpalathaKp, “FLANN Based Matching with SIFT Descriptors for Drowsy Features Extraction”, in Fifth International Conference on Image Information Processing (ICIIP),2019.

Copyright

Copyright © 2022 Shivani Singh, Sahithya Siramdas, K. Tanmayi, Laxmi Koli. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET44319

Publish Date : 2022-06-15

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online