Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Twitter Data Sentiment Analysis

Authors: Mohd. Naved Qureshi, Anas Iqubal, Hasan Adeeb, Dr. Inderpreet Kaur

DOI Link: https://doi.org/10.22214/ijraset.2023.50174

Certificate: View Certificate

Abstract

Twitter sentiment analysis has gained significant attention in recent years due to the vast amount of data generated on social media platforms and the potential applications of sentiment analysis in various domains. This paper presents an overview of Twitter sentiment analysis, including its applications, challenges, and state-of-the-art techniques. We discuss the various methods and algorithms used for sentiment analysis and highlight their advantages and limitations. We also examine the ethical considerations associated with sentiment analysis and the need for responsible and transparent use of this technique. Through a comprehensive analysis of the existing literature, we explore the potential of Twitter sentiment analysis for understanding public opinion and its implications for various domains, including marketing, politics, and social sciences. Finally, we present a case study to demonstrate the application of sentiment analysis in the context of real-world data. Our analysis shows that Twitter sentiment analysis has significant potential for understanding public opinion, tracking trends, and providing valuable insights into various domains. However, it also highlights the need for careful consideration of ethical concerns and the importance of responsible and transparent use of this technique.

Introduction

I. INTRODUCTION

Twitter sentiment analysis has emerged as a valuable tool for understanding public opinion on various topics. With over 300 million active users, Twitter provides a vast amount of data that can be analysed to gain insights into people's attitudes and emotions towards various issues. Sentiment analysis refers to a computational technique in the field of natural language processing, which involves the automatic identification and classification of the sentiment expressed in text, such as positive, negative, or neutral, based on the language used. In recent years, this technique has gained increasing attention from researchers in various fields, including computer science, social sciences, and marketing. This research paper aims to provide an overview of Twitter sentiment analysis, including its applications, challenges, and current state-of-the-art techniques. Through a comprehensive analysis of the existing literature, this paper will explore the potential of Twitter sentiment analysis for understanding public opinion and its implications for various domains. The emergence of social media platforms such as Twitter has transformed the manner in which individuals communicate their viewpoints and disseminate knowledge. With millions of tweets being generated every day, Twitter has become a valuable source of data for researchers to analyse public sentiment and track trends. The process of analysing sentiments on Twitter is a natural language processing technique that involves categorizing tweets into positive, negative, or neutral sentiments. This technique has gained significant attention in recent years, especially in the field of data science and machine learning, due to its potential applications in various domains, such as marketing, politics, and social sciences. In this research paper, we present an overview of Twitter sentiment analysis, including its underlying algorithms, challenges, and applications. We also discuss the ethical considerations associated with sentiment analysis and the need for responsible and transparent use of this technique. By exploring the latest developments in Twitter sentiment analysis, this paper aims to provide insights into the potential of this technique for understanding public opinion and its impact on society. This research paper aims to provide an overview of Twitter sentiment analysis, including its applications, challenges, and current state-of-the-art techniques. Through a comprehensive analysis of the existing literature, this paper will explore the potential of Twitter sentiment analysis for understanding public opinion and its implications for various domains.

II. LITERATURE SURVEY

Sentiment analysis of Twitter data has gained considerable attention in recent times due to its potential applications in various industries. However, the complex data structure and speech variation pose significant challenges for analysis. Researchers have conducted several studies to explore the potential of sentiment analysis in understanding public opinion, tracking trends, and providing insights into different domains.

In analyzing Twitter sentiment, Aliza Sarlan, Shuib, and Chayanit used a simple method of extracting tweets in Jason format and assigning polarity using a Python lexicon dictionary. On the other hand, Mandava Geeta, Bhargavav, and Duvvada utilized learning methods, achieving better accuracy by collecting cryptocurrency data and applying algorithms such as naïve bayes and SVM (Support Vector Machine). The experiments confirmed that the naïve bayes classifier is more accurate than SVM.

Agarwal, Xie, Vovshaa, I., Rambow, O., and Passonneau conducted a study comparing a unigram model to other models based on features and kernel tree. The results indicated that the feature-based model outperformed the unigram model by a small margin, while both the unigram and feature-based models were outperformed by the kernel tree-based model by a significant margin.

Akshi Kumar and Teeja Mary Sebastian employed a unique approach that combined both corpus-based and lexicon-based techniques, which is a rare combination in the current trend of machine learning techniques dominating the field. They utilized adjectives and verbs as features, employing corpus-based techniques to determine the semantic orientation of various adjectives present in tweets and a lexicon dictionary to determine the polarity of verbs. The total sentiment polarity of tweets was expressed using a linear equation.

K.Arun et al collected data related to various aspects of demonetization from Twitter and used R language for analyzing the tweets. In addition to the analysis, the results were also visualized using word clouds and other plots, which indicated that more people were in favor of demonetization compared to those who were against it. Vaibhavi N. Patodkar and Imran R. Shaikh endeavored to forecast the emotions of audiences towards a random TV show as either positive or negative. They obtained comments about various TV shows and employed them as the dataset for training and testing their model. The chosen classifier was the Naïve Bayes classifier, and the results were represented in a pie chart which indicated that the polarity of tweets was more negative than positive.

The potential use of sentiment analysis in politics was explored by Tumasjan et al., who used it to predict the results of the 2009 German federal elections. They collected around 100,000 tweets related to different political parties and used the Linguistic Inquiry and Word Count (LIWC2007) software to derive sentiments from them. The results obtained were found to be similar to the actual election results. Another study conducted by Dr. Rajiv and colleagues focused on applying sentiment analysis in crisis situations. They collected data on the 2014 Kashmir floods, consisting of 8490 tweets, and applied naïve Bayes classification technique to it. Their research showed that analyzing emotions in crisis situations could help the government save lives.

III. DATA CHARACTERISTICS

Although there are numerous social networking sites available, this paper focuses specifically on Twitter, which has gained immense popularity due to its unique writing format. Some of the characteristics of tweets include:

- Tweets are short messages consisting of a maximum of 280 characters.

- With an average of about 1.2 billion tweets posted each day, Twitter has emerged as a widely used social networking platform.

- Tweets can cover a wide variety of topics, from politics to entertainment to technology.

- Tweets are often written in an informal style, with spelling mistakes, slang, and emoticons commonly used.

- Twitter is a real-time platform, with tweets updated frequently.

- Emoticons are used to represent facial expressions in a written form, often created with the use of punctuation and other characters.

- The "@" symbol is used to mention other Twitter users in a tweet, directing the message to them.

- The "#" symbol is used to indicate the topic of a tweet, known as a hashtag.

- The "RT" symbol is used to indicate that a tweet is a retweet, meaning it has been posted again by another user.

In conclusion, Twitter data is a valuable source for sentiment analysis due to its vast amount of real-time user-generated content. With its unique format and characteristics such as hashtags, user mentions, and emoticons, Twitter offers a wide range of information that can be used to understand public opinion on various topics. By utilizing natural language processing techniques, researchers can gain insights into the sentiment of tweets and use this information for various applications such as predicting election results, analysing brand perception, and crisis management. The potential of Twitter data for sentiment analysis is vast, and with further advancements in technology, we can expect to see even more sophisticated analysis and applications in the future.

IV. METHODOLOGY

The proposed method for sentiment analysis in this paper could be represented in 5 stages, each of which are listed below:

A. Data Collection

Data collection is the first phase for analysis as there needs to be data for us to do analysis on. In our experimentations we have used python programming language as a tool. Being that said, data collection in this particular analysis could be carried out in two ways in our project we used the first one.

First way is to collect preorganized data from different sites such as Kaggle. On these sites this preorganized data is uploaded by the developers of sites themselves or is posted by different researchers for free. All one needs to do to acquire this data is to create a free account on these sites. Second way is to manually extract data from twitter using some API available for twitter. For this we have chosen tweepy as an API for extraction of tweets. Tweepy does not compatible with the new versions of python (python 3.7). So, for using this particular API an older version of python is needed (python 2.7). To access tweets on twitter using API first we need to authenticate the console from which we are trying to access twitter. This could be done by following steps listed below:

- Creation of a twitter account.

- Logging in at the developer portal of twitter.

- Select "New App" at developer portal.

- A form for creation of new app appears, fill it out Fill.

- After this the app for which the form was filled out will go for review by twitter team.

- Once the review is complete and the registered app is authorized then and only then the user is provided with ‘API key’ and ‘API secret’

- After this "Access token" and "Access token secret" are given.

These keys and tokens are unique for each user and only with the help of these can one access the tweets directly form twitter. For this paper we have extracted a large data set consisting of almost 11k tweets. These tweets are taken using #pfizer and BioNTech thus are about different study about the vaccines. We have used textblob package of python for pre- data annotation of polarity for these tweets.

|

Data set |

No. of tweets |

|

Training data |

8434 |

|

Testing data |

2109 |

Table 1: - Data Distribution

B. Data Preprocessing

The pre-processing of data implies the processing of raw data into a more convenient format which could be fed to a classifier in order to better the accuracy of the classifier. Here, in our case the raw data which is being get from Kaggle using this data set we remove availability of various useless characters seems very common in it.

For this matter we remove all the unnecessary characters and words from this data using a module in python known as Regular Expressions, are for short. This module adopts symbolic techniques to represent different noise in the data and therefore makes it easy to drop them. Specifically in twitter terminology there are various common useless phrases and spelling mistakes present in the data, which need to be removed to boost the accuracy of our resultant. These could be summoned up as follows:

- Hash Tags: these are very common in tweets. Hash tags represent a topic of interest about which the tweet is being written. Hashtags look something like #topic.

- @Usernames: these represent the user mentions in a tweet. Sometimes a tweet is written and then is associated with some twitter user, for this purpose these are used.

- Retweets (RT): as the name suggests retweets are used when a tweet is posted twice by same or different user.

- Emoticons: these are very commonly found in the tweets. Using punctuations facial expression are formed in order to represent a smile or other expressions, these are known as emoticons.

- Stop Words: Stop words are those which are useless when it comes to sentiment. Words such as it, is the etc are known as stop words.

C. Feature Selection

In this paper, it has been noted that various researchers have utilized different features for classifying tweets. Our experiment employs similar features such as bar charts, pie charts, and N-grams.

D. Model Selection

After pre-processing the data, it is fed to a classification model for further processing. There are various classification algorithms available, but for this paper, we chose the Logistic Regression and SVM models. Logistic Regression is a statistical model used for classification and predictive analytics by estimating the probability of an event based on independent variables. SVMs are linear classifiers that aim to maximize the margin and achieve excellent generalization performance by minimizing structural risk.

To train the classification model, we used the Textblob library in Python to automatically set target values for each tweet. Most of the literature surveys manually set these values to positive, negative, or null. We divided our dataset of 11,020 tweets into training and testing sets, with 8,434 tweets in the training portion and 2,109 tweets in the testing portion. Both sets were transformed into binary values using the sklearn module in Python, which contains multiple classification models and encoders for model selection, label encoding, and model evaluation. These methods will be discussed in the next section.

E. Model Evaluation

Table 2 below shows a generalized form of a confusion matrix, which is one of the most commonly used and appropriate techniques for evaluating a classifier.

|

|

Predicted Positive |

Predicted Negative |

|

Actual Positive |

True positive (tp) |

False Negative (fn) |

|

Actual Negative |

False positive (fp) |

True Negative (tn) |

Table 2: - General Confusion Matrix

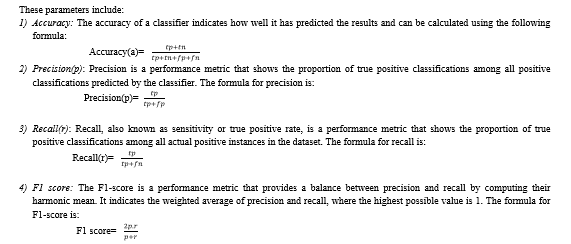

In the matrix, TP (True Positive) refers to the number of correctly classified positive instances, FN (False Negative) represents the number of actual positive instances that were incorrectly classified as negative, FP (False Positive) represents the number of actual negative instances that were incorrectly classified as positive, and TN (True Negative) represents the number of correctly classified negative instances. By analysing these values, various performance metrics such as accuracy, precision, recall, and F1-score can be calculated to evaluate the classifier's performance. By applying this technique, we can derive the generalized evaluation parameters.

V. RESULTS OF PROPOSED SYSTEM

The experiments conducted by us showed a model accuracy of 84.64 for Logistic Regression classifier. The confusion matrix guised after completion of testing of classifier is given in table 3 below:

|

|

Negative |

Neutral |

Positive |

|

Negative |

72 |

116 |

38 |

|

Neutral |

4 |

1008 |

9 |

|

Positive |

8 |

149 |

705 |

Table 3: - Output Confusion Matrix

Other important model evaluation parameters as mentioned in section before, for this experimentation are given in the table 4 presented below:

Table 4: - Evaluation Parameters

Further we have accuracy of 87.34% for SVM classifier. The confusion matrix guised after completion of testing of classifier is given in table 5 below

|

|

Negative |

Neutral |

Positive |

|

Negative |

101 |

91 |

34 |

|

Neutral |

6 |

1007 |

8 |

|

Positive |

14 |

114 |

734 |

Table 5: - Output Confusion Matrix

Other important model evaluation parameters as mentioned in section before, for this experimentation are given in the table 6 presented below:

|

|

Precision |

Recall |

F1- Score |

Support |

|

Positive |

0.83 |

0.45 |

0.58 |

226 |

|

Neutral |

0.83 |

0.99 |

0.90 |

1021 |

|

Negative |

0.95 |

0.85 |

0.90 |

862 |

|

Accuracy |

|

|

0.87 |

2109 |

|

Macro average |

0.87 |

0.76 |

0.79 |

2109 |

|

Weighted average |

0.88 |

0.87 |

0.87 |

2109 |

Table 6: -Evaluation Parameters

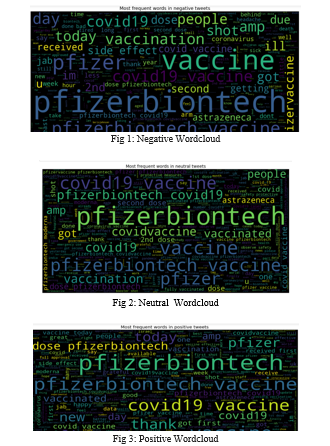

The positive, neutral and negative could also be represented using wordclouds. Wordclouds for our data set are given below:

Conclusion

In conclusion, the task of sentiment analysis in micro-blogging is still in the developmental stage and has room for improvement. The use of more complex models, such as incorporating the proximity of negation words to the unigrams being analyzed, could enhance the accuracy of the analysis. Additionally, exploring the effect of bigrams and trigrams could further improve the performance of the analysis. However, this would require a larger labeled dataset than the one used in this study. Overall, the field of sentiment analysis in micro-blogging is promising and offers opportunities for future research to refine and enhance the techniques used for sentiment classification.

References

[1] “Arun k, Sinagesh a & Ramesh m, “twitter sentiment analysis on demonetization tweets in India using r language”, international journal of computer engineering in research trends,vol.4, no.6, (2017), pp.252-258” [2] “Aliza Sarlan, Chayanit Nadam, Shuib Basri ,” twitter sentiment analysis 2014 international conference on information technology and multimedia (ICIMU), November 18 – 20, 2014, Putrajaya, Malaysia 978-1- 4799-5423-0/14/$31.00 ©201 IEEfE 212” [3] “Mandava Geetha Bhargava, Duvvada Rajeswara Rao,” sentiment analysis on social media data using R”, international journal of engineering & technology, 7 (2.31) (2018) 80-84” [4] “Agarwal, A., Xie, B., Vovsha, I., Rambow, O., and Passonneau, R. “Sentiment analysis of twitter data.” In Proceedings of the ACL 2011, Workshop on Languages in social media, pp. 30–38, 2011. [5] ” IJCSI International Journal of Computer Science Issues, Vol. 9, Issue 4, No 3, July 2012 ISSN (Online): 1694-0814” [6] ” International Journal of Innovations & Advancement in Computer Science IJIACS ISSN 2347 – 8616 Volume 6, Issue 7 July 2017” [7] “Tumasjan, A., Sprenger, T., Sandner, P., and Welpe. “Predicting elections with twitter: What 140 characters reveal about political sentiment..” In Proceedings of theFourth International AAAI Conference on Weblogs and social media (2010), pp. 178–185, 2010”. [8] E. Kouloumpis, T. Wilson, J. Moore, Twitter sentiment analysis: The good the bad and the omg! Proc. 5th Int. AAAI Conf. Weblogs social media, pp. 538-541, 2011. [9] D. Terrana, A. Augello, G. Pilato, Automatic unsupervised polarity detection on a Twitter data stream, Proc. IEEE Int. Conf. Semantic Comput., pp. 128-134, Sep. 2014. [10] H. Saif, Y. He, M. Fernandez, H. Alani, Semantic patterns for sentiment analysis of Twitter, Proc. 13th Int. Semantic Web Conf., pp. 324-340, Apr. 2014. [11] H. Saif, Y. He, H. Alani, Alleviating data sparsity for Twitter sentiment analysis, Proc. CEUR Workshop, pp. 2-9, Sep. 2012. [12] H. G. Yoon, H. Kim, C. O. Kim, M. Song, Opinion polarity detection in Twitter data combining shrinkage regression and topic modeling, J. Informetrics, vol. 10, pp. 634-644, 2016. [13] F. H. Khan, U. Qamar, S. Bashir, SentiMI: Introducing point-wise mutual information with SentiWordNet to improve sentiment polarity detection, Appl. Soft Comput., vol. 39, pp. 140-153, Apr. 2016. [14] A. Agarwal, B. Xie, I. Vovsha, Sentiment analysis of Twitter data, Proc. Workshop Lang. Social Media Assoc. Comput. Linguistics, pp. 30-38, 2011. [15] Bo Pang, Lillian Lee, A sentimental education: sentiment analysis using subjectivity summarization based on minimum cuts, ACL \'04 Proceedings of the 42nd Annual Meeting on Association for Computational Linguistics, Article No.271, 2004. [16] Kong X, Jiang H, Yang Z, Xu Z, Xia F, Tolba A (2016) Exploiting Publication Contents and Collaboration Networks for Collaborator Recommendation. PLoS ONE 11(2): e0148492. [17] G. Vinodhini et al, Sentiment Analysis and Opinion Mining: A Survey, International Journal of Advanced Research in Computer Science and Software Engineering (IJARCSSE), Vol 2, Issue 6, June 2012. [18] Jayashri Khairnar and Mayura Kinikar, Machine Learning Algorithms for Opinion Mining and Sentiment [19] Classification, International Journal of Scientific and Research Publications (IJSRP), Volume 3, Issue 6, ISSN 2250-3153, June 2013.

Copyright

Copyright © 2023 Mohd. Naved Qureshi, Anas Iqubal, Hassan Adeeb, Dr. Inderpreet Kaur. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET50174

Publish Date : 2023-04-07

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online