Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Facial Expression Recognition Using Convolutional Network

Authors: Narinder Kaur, Kiranjeet Kaur, Abdul Hafiz

DOI Link: https://doi.org/10.22214/ijraset.2022.44447

Certificate: View Certificate

Abstract

This paper focuses on Emotion Recognition using facial expressions. It aims to detect facial expressions accurately and efficiently. Convolutional Neural Network has developed to help in recognizing emotions through facial expressions and classify them into seven basic categories which are: happy, sad, neutral, surprise, fear, disgust, and angry. So, Convolutional Neural Network is implemented to extract relevant features of the input images and classify them into seven labels. For evaluating the proposed model, Facial Emotion Recognition 2013 dataset is used so that the model achieves the best accuracy rate. Facial expression recognition has been an active area of research over the past few decades, and it is still challenging due to the high intra-class variation.

Introduction

I. INTRODUCTION

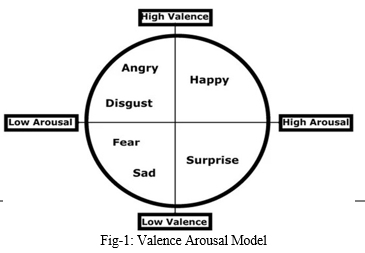

All of us at some point of time in our daily life feel happy, sad, angry, love etc. These feelings are actually emotions. An easy way to criticize a person’s emotions is his or her face as the facial expression determines how that person is affected. This paper focuses on seven different categories of emotions: happiness, neutrality, surprise, sadness, disgust, anger, and fear. Emotions are desires that play a vital role in the lives of mortals. It allows people to create multiple effects such as self- awareness and interaction with others. Most importantly, emotions have a major role to play in people's learning and behavior. There are many kinds of emotions you may want to have such as love, joy, inspiration, or pride. Emotions play a major role in affecting our moods. Life would be miserable without such pleasures as joy and sorrow, love and fear, breakdown and disappointment. Emotions add color and spice to life. Emotions such as happiness, disappointment, and grief often have both physical and mental beginnings affecting the geste. The word emotion is derived from the Latin word ‘Emover’ which means to move or entertain. Emotions can be defined as the state of being mentally or emotionally disturbed. Emotions are based on two things Awakening and Valence where valence is a positive or negative effect while happiness measures how comforting or motivating information is.

With the rapid growth of the use of intelligent technology in society and its development, the need for technology that can fulfill the needs of everyone and select the best results from it is increasing exponentially. Automatic emotional testing is important in areas such as marketing, education, robotics, and entertainment.

The function is used to perform colorful simulations:

- To robots to design interactive or service robots to communicate with humans;

- Advertising for the production of technical announcements, based on the client's emotional state;

- In education used to perfect learning processes, knowledge transfer, and cognitive[3] processes;

- On active entertainment to promote the most appropriate entertainment for targeted fans.

II. RELATED WORK

[4]stated the machine learning approach for recognizing emotions using facial expressions. [4] had implemented the depth channel approach using depth data. Microsoft Kinetic Sensor had been used which works on the algorithm that uses local movements detection within the face area to recognize actual facial expressions. This approach had been validated on the Facial Expressions and Emotions Database using 169 recordings of 25 persons. According to the evaluated system’s performance had given an overall successful detection rate of 78.8%. [5] had presented a fuzzy logic-based emotion recognition system. The fuzzy logic-based system emphasizes Action Units and relevant facial features. had first processed two images to extract relevant facial features. The differences in the position of the different feature points were then fuzzified to obtain the strength of exhibited AU’s [6]. The strengths were then fed through fuzzy rule sets and defuzzified to obtain the exhibited strength of the emotions.[7] had proposed the method of using the empirical mode decomposition (EMD) technique for detecting facial emotion recognition. The EMD algorithm can decompose any nonlinear and non-stationary signal into several intrinsic mode functions (IMFs). Facial feature extraction through preprocessing of the image is a crucial process in emotion recognition so it had been implemented at first so that only intrinsic features were selected for the classification of the emotions. [8] had proposed the decision tree method for recognizing emotions through facial expressions. Relevant facial features had been extracted using a geometric approach and an automatic supervised learning method called decision tree had been applied. Viola-Jones algorithm had been applied to detect the face, basically to distinguish faces from non-faces. A machine learning approach, called Classification and Regression Tree [9] had been used to classify facial expressions into seven different emotions.

III. DATASET COLLECTION

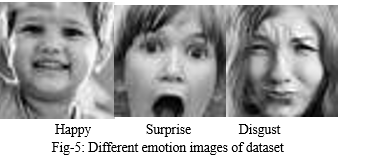

The database for images used for measuring the performance of the system was the FER2013. It contains approximately 30,000 different facial RGB images with different expressions of size restricted to 48x48. The whole dataset is comprised of seven different expressions. These seven expressions include Angry, Disgust, Fear, Happy, Sad, Surprise, and Neutral. For each subject, there are approximately 900 images present depicting each classified emotion.

Table-1: Comparison Table of different techniques used by different authors

|

S.no |

Author Name |

Year |

Technique Used |

Accuracy Rate |

Classifier used |

Dataset |

|

[4] |

Mariusz Szwoch et al. |

2015 |

Depth Channel |

50% |

SVM |

Facial Expressions and Emotions Database |

|

[5] |

Austin Nicolai et al. |

2015 |

Fuzzy System |

78.8% |

Fuzzy Classifier |

JAFFE |

|

[7] |

Hasimah Ali et al. |

2015 |

Empirical Mode Decomposition |

99.75% |

k-NN, SVM, ELM- RBF |

JAFFE, CK |

|

[9] |

Fatima Zahra Salman et al. |

2016 |

Decision Trees |

89.20% |

Classification and Regression Tree |

JAFFE, CK+ |

|

[10] |

Lucy Nwosu et al. |

2016 |

Deep Convolutional Neural Network |

95.72% |

CNN |

JAFFE, CK+ |

|

[11] |

Neha Jain et al. |

2018 |

Hybrid Deep Neural Network |

92.07% |

RNN |

JAFFE, MMI |

IV. PROPOSED WORK AND TECHNOLOGY USED

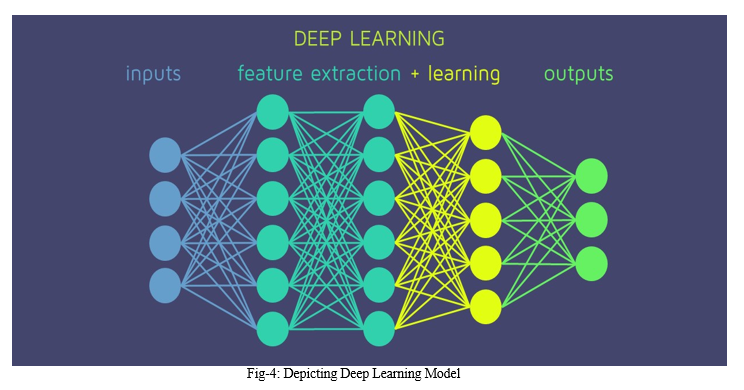

- Deep Learning: It is a field of machine learning mainly concerned with algorithms inspired by Artificial Neural Network. It is an Important element of data science, which includes model prediction and its statistics. In deep learning, each algorithm works in the hierarchy and applies a nonlinear transformation to its input and uses what it learns to create a statistical model as output. Algorithms performed various iterations until the output has reached an acceptable level of accuracy. Deep learning is used in detecting the emotions as it provides various models and algorithms for image preprocessing, training dataset and classifying the images and finally recognizing their emotions.

2. Keras: As we have used the concept of deep learning, Keras provides a complete framework to create any type of neural network. It is very easy to learn and is very innovative. It supports simple as well as large and complex type of neural network. It can be divided into three main categories:

a. Model

b. Layer

c. Core Modules

Using Keras, any algorithm like CNN which we have used in this project can be represented in a very simple and efficient manner. Keras provides two types of model: Sequential Model and Functional model.

3. Load_Model: In order to load our model we use load_model function specified in the keras.models library. .h5 is an extension which has to be used while specifying the location of file.

4. OpenCV: It is an open source library which is very useful for computer vision applications such as video analysis and image processing. We have used it as it plays a major role in real-time operation. It helps in image processing by reading the image and extracting the region of interest. It also helps in rotating the images to analyze them through various angles.

5. NumPy: It stands for numerical python. It is one of the most useful scientific python library. It provides support for large multidimensional array objects and various tools to work with them. As any image is just a function of two variables if it is a gray-scale image. If in case colors are present, then it will be 3-D image. As numpy is best suitable for handling matrices therefore we have used numpy so that it will help in preprocessing of the image detected by webcam for further detection of emotions.

6. Cascade Classifier: Haarcascade is the popular algorithm for recognizing emotions through facial expressions. It is computationally less expensive, a fast algorithm and give high accuracy. We have used Haarcascade_frontalface.xml file in this project for detecting emotions. Classifier works in following ways:

a. Haar-feature Selection: This feature consists of dark regions and light regions. It produces a single value by taking the difference of the intensities of dark regions and the sum of intensities of light regions. It is done to extract useful elements necessary for identifying image and detect its emotions.

b. Creation of Integral Images: Integral image reduces the time needed to complete this task significantly.

c. Cacade Classifier: It is a method for combining increasing more complex classifiers in a cascade which allows negative input such as non-face features to be quickly discarded while spending more computation on promising or positive face-like regions. It significantly reduces the computation time and makes the process more efficient.

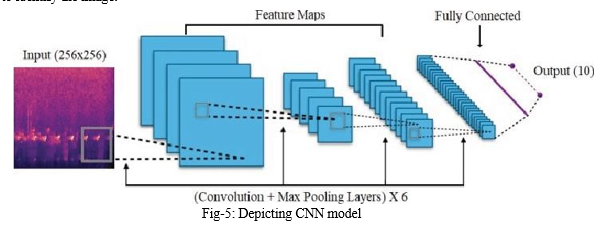

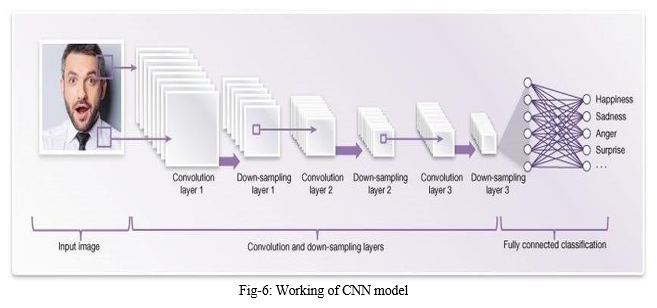

7. CNN model: It is basically a deep learning algorithm that is specially designed for working with images and videos. It takes images as input, extracts and learns the features of the image, and classifies them based on the learned features. CNN has various filters which extracts some information from the images such as edges, different kinds of shapes and then all these are combined to identify the image.

This model works in two steps:

a. Feature Extraction

b. Classification

???????

???????

Conclusion

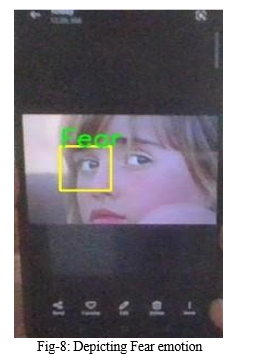

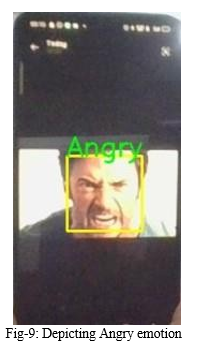

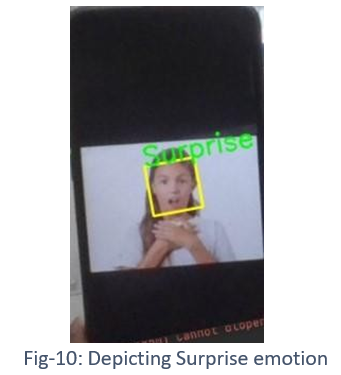

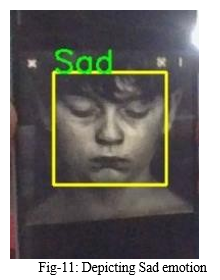

Through this paper we were able to recognize emotions in real time and of static images as well. We tried it out various images and through data sets well. The emotion recognition system was able to access the web cam of the system too so as to detect the real time emotions of the subjects.Here, we have a static image of a happy person, we can see that emotion has been detected and displayed on the screen itself. The factors that help us know if the person is happy are the broad lips, squinting eyes and a lift on a person’s face. The same factors came to play here as well.Here we have a person who is afraid. This emotion could be detected by the droopy eyebrows and other factors. The emotion has been recognized correctly and has been displayed on the screen.Now we see a visibly angry person, the frown on the face, the eyebrows help you recognize the specific emotion and the squinting of the eyes showcase that the person is angry. The emotion has been detected and has been displayed on the screen. Here, we have the emotion of surprise, the raised eyebrows and wider eyes help us recognize this emotion. The emotion has been detected and has been displayed on the screen. The next emotion being showcased here is of sadness. The droopy face and eyebrows help us recognize this emotion. The squinting face and other factors come into play as well. The emotion has been recognized and displayed on the screen. Since we can see that all these emotions were detected accurately, hence the Emotion Recognition System works efficiently. These outcomes can help us deduce that the project doesn’t have any bugs as well. With our project we’ll be able to detect human emotions through facial expressions and we hope that it helps various fields in easing their tasks.

References

[1] L. Santamaria-Granados, M. Munoz-Organero, G. Ramirez-Gonzalez, E. Abdulhay, and [2] N. Arunkumar, “Using Deep Convolutional Neural Network for Emotion Detection on a Physiological Signals Dataset (AMIGOS),” IEEE Access, vol. 7, no. c, pp. 57–67, 2019, doi: 10.1109/ACCESS.2018.2883213. [3] M. Spezialetti, G. Placidi, and S. Rossi, “Emotion Recognition for Human-Robot Interaction: Recent Advances and Future Perspectives,” Front. Robot. AI, vol. 7, no. December, pp. 1–11, 2020, doi: 10.3389/frobt.2020.532279. [4] S. Kusal, S. Patil, K. Kotecha, R. Aluvalu, and V. Varadarajan, “Ai based emotion [5] detection for textual big data: Techniques and contribution,” Big Data Cogn. Comput., vol. 5, no. 3, 2021, doi: 10.3390/bdcc5030043. [6] M. Szwoch and P. Pieniazek, “Facial emotion recognition using depth data,” Proc. - 2015 8th Int. Conf. Hum. Syst. Interact. HSI 2015, no. D, pp. 271–277, 2015, doi: 10.1109/HSI.2015.7170679. [7] A. Nicolai and A. Choi, “Facial Emotion Recognition Using Fuzzy Systems,” Proc. - 2015 IEEE Int. Conf. Syst. Man, Cybern. SMC 2015, pp. 2216–2221, 2016, doi: 10.1109/SMC.2015.387. [8] D. Canedo and A. J. R. Neves, “Facial expression recognition using computer vision: A systematic review,” Appl. Sci., vol. 9, no. 21, pp. 1–31, 2019, doi: 10.3390/app9214678. [9] H. Ali, M. Hariharan, S. Yaacob, and A. H. Adom, “Facial emotion recognition using empirical mode decomposition,” Expert Syst. Appl., vol. 42, no. 3, pp. 1261–1277, 2015, doi: 10.1016/j.eswa.2014.08.049. [10] P. M. Ashok Kumar, J. B. Maddala, and K. Martin Sagayam, “Enhanced Facial Emotion Recognition by Optimal Descriptor Selection with Neural Network,” IETE J. Res., 2021, doi: 10.1080/03772063.2021.1902868. [11] F. Z. Salmam, A. Madani, and M. Kissi, “Facial Expression Recognition Using Decision Trees,” Proc. - Comput. Graph. Imaging Vis. New Tech. Trends, CGiV 2016, pp. 125– 130, 2016, doi: 10.1109/CGiV.2016.33. [12] L. Nwosu, H. Wang, J. Lu, I. Unwala, X. Yang, and T. Zhang, “Deep Convolutional Neural Network for Facial Expression Recognition Using Facial Parts,” Proc. - 2017 IEEE 15th Int. Conf. Dependable, Auton. Secur. Comput. 2017 IEEE 15th Int. Conf. Pervasive Intell. Comput. 2017 IEEE 3rd Int. Conf. Big Data Intell. Compu, vol. 2018- Janua, pp. 1318–1321, 2018, doi: 10.1109/DASC-PICom-DataCom- CyberSciTec.2017.213. [13] N. Jain, S. Kumar, A. Kumar, P. Shamsolmoali, and M. Zareapoor, “Hybrid deep neural networks for face emotion recognition,” Pattern Recognit. Lett., vol. 115, pp. 101–106, 2018, doi: 10.1016/j.patrec.2018.04.010.

Copyright

Copyright © 2022 Narinder Kaur, Kiranjeet Kaur, Abdul Hafiz. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET44447

Publish Date : 2022-06-17

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online